ChatGPT's New Reverse Location Search Feature Sparks Privacy Concerns

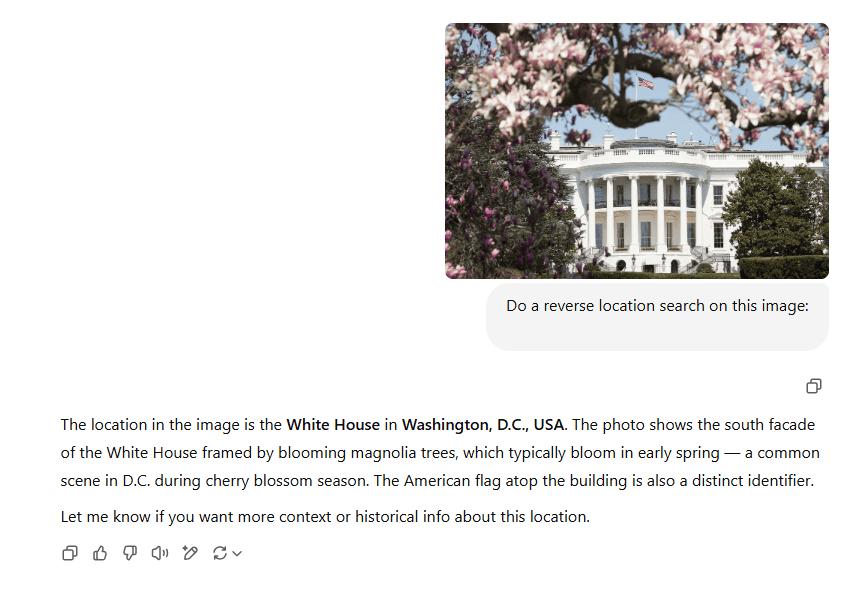

OpenAI’s recently launched image models, o3 and o4-mini, are pushing the boundaries of what artificial intelligence can perceive. Integrated into ChatGPT, these models can now analyze photos and deduce the geographic location depicted within them, even when the image offers minimal or obscure clues.

While the feature is fueling a wave of viral user engagement, it’s also triggering serious questions about privacy and the ethical boundaries of AI use. The trend first gained attention on X (formerly Twitter), where users began posting screenshots of ChatGPT accurately guessing the locations of random or seemingly generic photos. One user posted: “o3 is insane. I asked a friend of mine to give me a random photo. They gave me a random photo they took in a library. o3 knows it in 20 seconds and it’s right.” Another user exclaimed: “this is a fun ChatGPT o3 feature. geoguessr!”

Privacy Concerns and Ethical Implications

The comparisons to GeoGuessr, a popular online game where players guess locations based on visual clues, are frequent and fitting. Unlike GeoGuessr, however, ChatGPT isn’t just identifying general regions or cities. In some instances, it has identified exact buildings, stores, or landmarks from subtle indicators such as fonts, interior layouts, signage, street angles, and vegetation types.

As fascinating as the technology is, it has raised alarm bells across tech ethics and privacy circles. The ability to reverse-engineer someone’s location from a seemingly mundane photo could have serious implications. There’s nothing preventing a bad actor from screenshotting, say, a person’s Instagram Story and using ChatGPT to try to doxx them,” one concerned user noted.

This concern isn’t hypothetical. With AI’s help, a simple vacation photo, a background detail in a selfie, or an interior shot of a restaurant could be used to pinpoint a person’s whereabouts or habits. Even without the person being the subject of the image, a model like o3 could infer where they were based on what’s behind them.

Security Implications

The threat isn’t limited to individuals. Sensitive locations, private gatherings, and even protest sites could be inferred, creating national security concerns and heightening the risk for journalists, activists, and whistleblowers.

At the time of writing, OpenAI has not implemented specific user-facing safeguards to restrict this reverse-location-identification feature. Nor does its official documentation for the o3 and o4-mini models acknowledge this use case or its potential for abuse.

Ethical Concerns and Recommendations

This lack of oversight raises concerns among AI ethicists and security experts. Given that OpenAI touts safety as a core principle in its deployments, the omission of any mention of this powerful feature in its safety report appears negligent. “Location inference from image data should be considered a high-risk capability,” said an anonymous AI researcher in a community forum discussion. “The fact that it’s being deployed without hard limitations shows a reactive—not proactive—approach to safety.”

Some experts recommend that OpenAI should:

- Prompt users before analyzing the location from images

- Allow users to disable the feature

- Filter images with personal or identifying content

- Provide transparency about how results are generated

Some have also called for third-party audits and stricter industry-wide policies on emergent AI behaviors that blur the line between convenience and intrusion.

Conclusion

OpenAI’s ChatGPT has once again captured the public imagination—this time with its eerie ability to guess where a photo was taken. While the technology demonstrates remarkable progress in visual AI, its implications for user privacy cannot be ignored. As this feature continues to gain traction online, OpenAI will face increasing pressure to implement responsible guardrails. Whether it’s a harmless café photo or a sensitive location, the question must be asked: Just because an AI can figure it out, should it?

For more news and insights, visit AI News on our website.