The Viral AI Action Figure Trend Could Be Putting Your Privacy at Risk

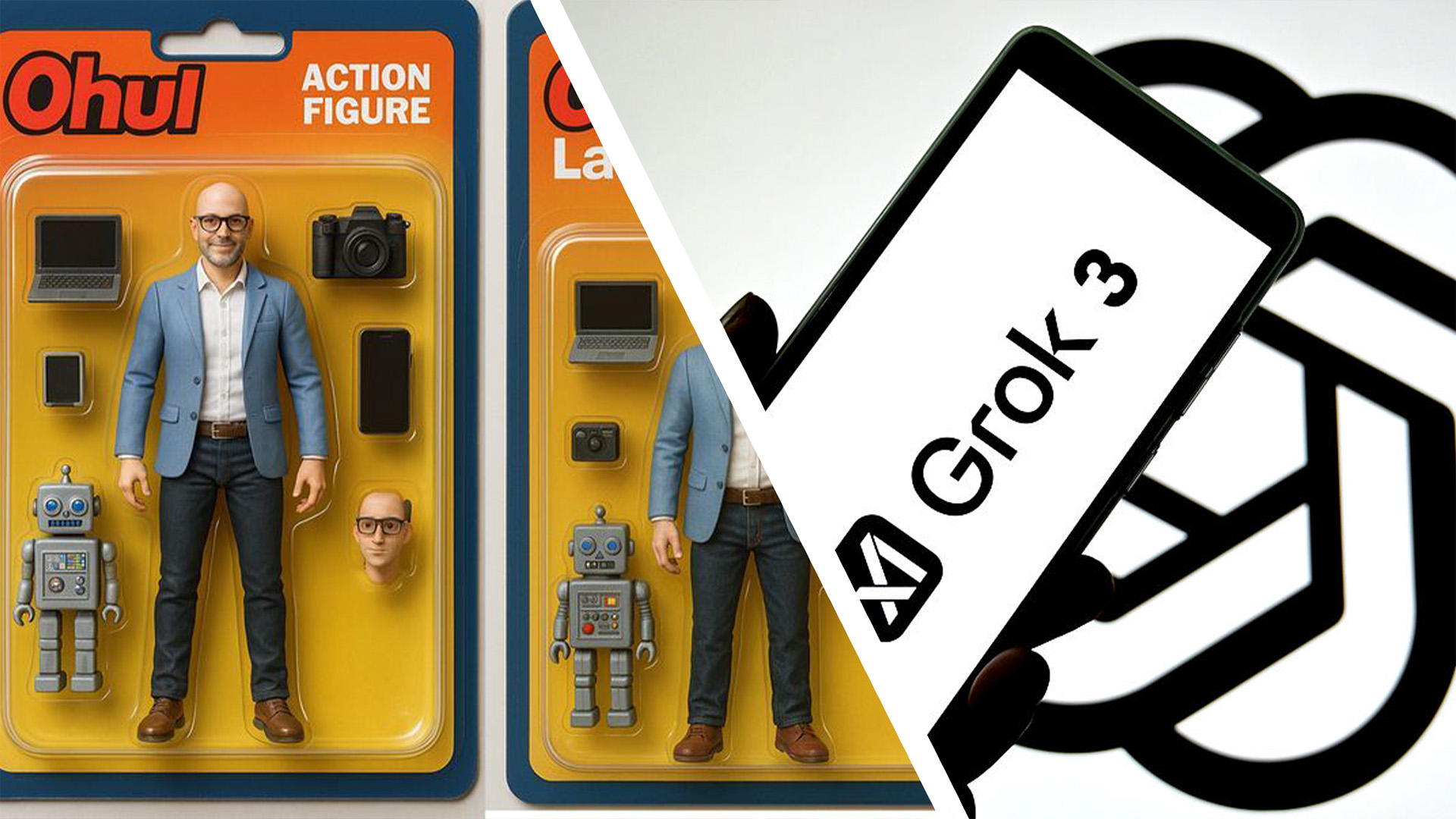

If you’re on social media, it’s highly likely you’re seeing your friends, celebrities and favorite brands transforming themselves into action figures through ChatGPT prompts. Lately, artificial intelligence chatbots like ChatGPT are not just for generating ideas about what you should write ― they’re being updated to have the ability to create realistic doll images.

Once you upload an image of yourself and tell ChatGPT to make an action figure with accessories based off the photo, the tool will generate a plastic-doll version of yourself that looks similar to the toys in boxes. While the AI action figure trend first got popular on LinkedIn, it has gone viral across social media platforms.

Data Privacy Risks of the Trend

Actor Brooke Shields, for example, recently posted an image of an action figure version of herself on Instagram that came with a needlepoint kit, shampoo, and a ticket to Broadway. People in favor of the trend say, “It’s fun, free, and super easy!” But before you share your own action figure for all to see, you should consider these data privacy risks, experts say.

The more you share with ChatGPT, the more realistic your action figure “starter pack” becomes — and that can be the biggest immediate privacy risk if you share it on social media. These action figure accessories can reveal more about you than you might want to share publicly, making you vulnerable to social engineering attacks and phishing attempts.

Privacy Concerns and Cybersecurity Risks

Sharing personal information through these AI tools can lead to potential privacy breaches, as hackers can leverage the data to manipulate and target individuals with tailored attacks. Cybersecurity experts warn against the normalization of sharing personal information with AI platforms like ChatGPT, emphasizing the importance of safeguarding personal data online.

Future Implications and Long-term Privacy Concerns

The long-term implications of AI models being trained on users' images remain uncertain, raising concerns about data usage and potential privacy risks. While platforms like OpenAI assure users that data is used to improve models' performance, the specific applications of this data remain undisclosed.

Users are encouraged to consider the implications of their data contributions to AI training and assess their comfort level with potential data monetization by AI companies. Understanding the risks associated with sharing personal information online is crucial in maintaining online security and privacy.

As the AI doll trend continues to gain popularity, it's essential for individuals to prioritize their privacy and security when engaging with such technologies, to mitigate potential risks associated with data exposure and misuse.

For more information on online scams and cybersecurity, you can visit the following articles on spoofing scams, phone call scams, and 'hello' text scams.