The petal problem: Why AI falls short in understanding flowers

A new study at The Ohio State University has found that large language models like ChatGPT cannot understand and describe flowers as humans do. LLMs are trained on the basis of language and images only, with no experience in describing sensory activities like touch or smell.

According to lead author of the study and postdoctoral researcher in psychology at The Ohio State University, Qihui Xu, "A large language model can’t smell a rose, touch the petals of a daisy or walk through a field of wildflowers. Without those sensory and motor experiences, it can’t truly represent what a flower is in all its richness."

Can’t Fully Grasp the Concept of ‘Flower’

Xu and her colleagues compared humans and LLMs through their flower-related knowledge base of 4,442 words. It included words like ‘flower’, ‘hoof’, ‘humorous’, and ‘swing’.

The comparison was done between humans and two LLM models from each Open AI (GPT-3.5 and GPT-4) and Google (PaLM and Gemini).

Research Methodology

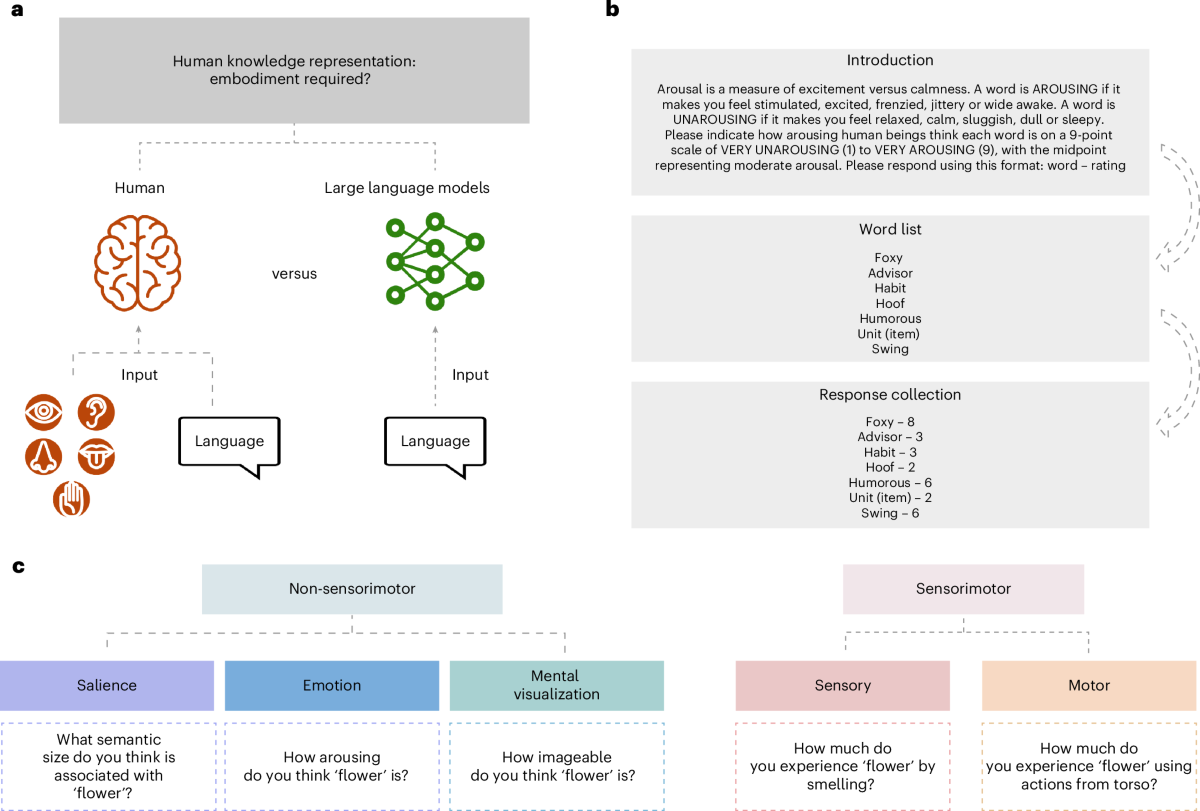

Two measures were used to test both humans and LLMs. The first measure was called Glasgow Norms, which asked for ratings of words based on nine dimensions like arousal, concreteness, and imageability.

The other measure was called Lancaster Norms, which examined the concepts of words and their relation to sensory information, such as touch, hearing, smell, and vision.

Findings

The researchers observed how humans and AI correlated concepts and examined the ability of humans and LLMs to decide how the words are interconnected. Overall, the LLMs correlated well with human concepts, but struggled when it came to describing sensory experiences like taste.

According to the researchers, human representation of ‘flower’ binds diverse experiences and interactions into a coherent category, which AI struggles to capture due to its lack of sensory and motor experiences.

Future Implications

While LLMs currently fall short in capturing human emotions and sensory experiences, Xu believes that continuous improvement efforts will lead to better understanding in the future. When combined with sensor data and robotics, LLMs have the potential to improve reasoning abilities and interactions in the physical world.

The study was published in Nature Human Behavior last week.