Google Gemini 2.5 Flash can think for as long as devs want it to ...

Google is rolling out Gemini 2.5 Flash to the Gemini app, allowing users to experiment with the company’s latest model. It is considered a brighter and lighter replacement for 2.0 Flash and will initially be available in preview form. At the same time, API users will have to spend less than they would be on competing models as Google ramps up the rivalry with OpenAI and Anthropic.

Although Google’s Gemini (formerly Bard) got off to a slow start back in 2023, it has made a strong comeback by now, in early to mid-2025. In addition to impressive results, the release schedule is relentless. A new model appears in the Gemini app or development tools such as AI Studio almost on a weekly basis. The latest addition is the faster and more efficient Gemini 2.5 Flash model, which is still in preview.

Gemini App Selection Complexity

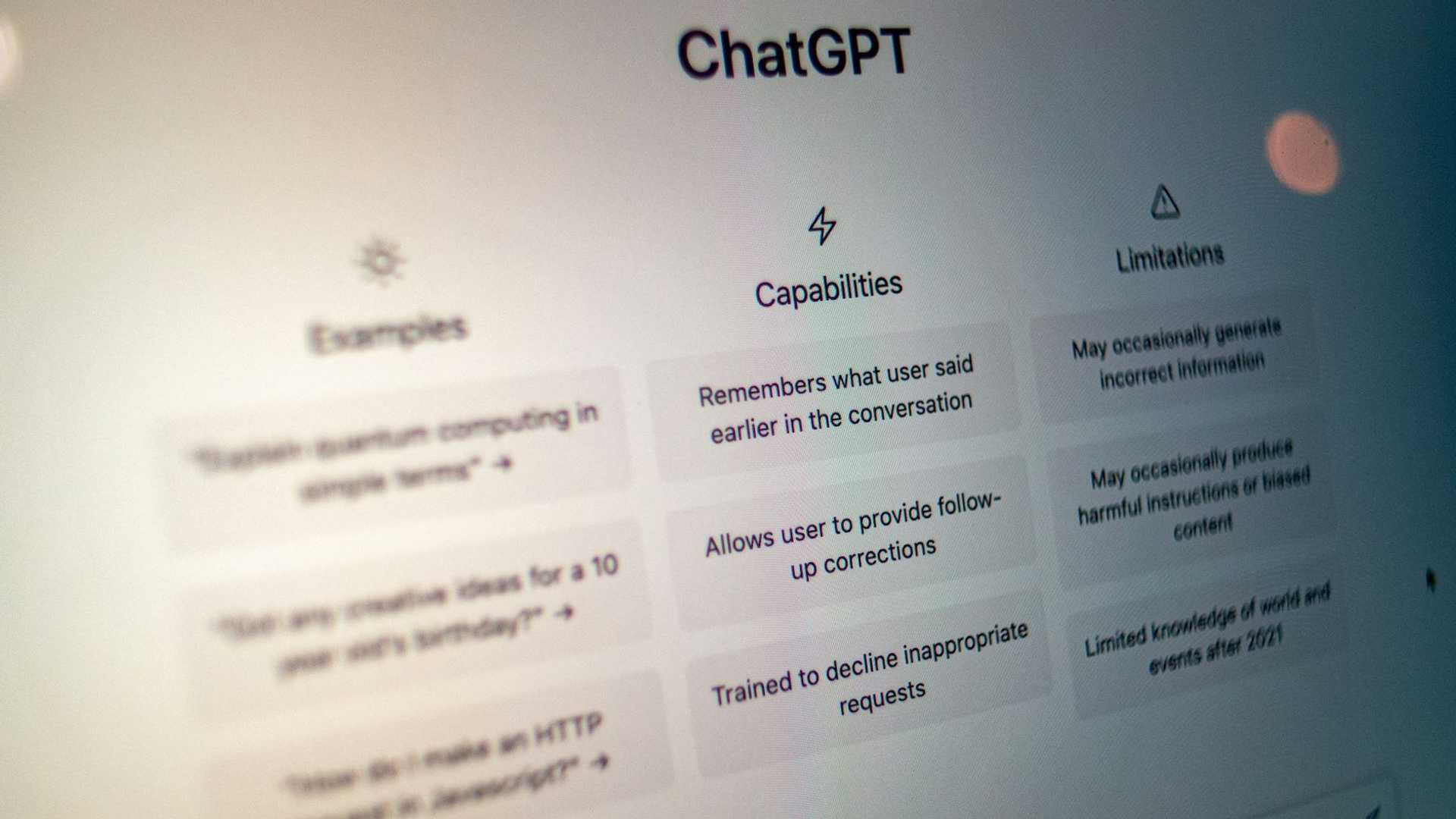

The drop-down menu for model selection in the Gemini app is becoming increasingly complex, a luxury problem that OpenAI’s ChatGPT also suffers from. Google releases so many preview models and new ways to use Gemini that it can be challenging to know which option is right for which task.

Tip: OpenAI launches o3 and o4-mini

Tulsee Doshi, director of product management for Gemini at Google and leader of the team building these models, told Ars Technica that she prefers the more powerful option, Gemini 2.5 Pro. She says this model is particularly helpful to her when it comes to writing assistance.

The new Flash model is significantly smaller than Gemini 2.5 Pro and about the same size as 2.0 Flash, but should perform better. Doshi calls it a “major improvement” over 2.0 Flash. In any case, Gemini 2.5 Flash will not cause any additional app confusion, as it will be listed as 2.5 Flash (Experimental) in the app and on the website, replacing the 2.0 Thinking (Experimental) option.

Dynamic Reasoning in Gemini 2.5

Like all models in the 2.5 branch and beyond, Gemini 2.5 has built-in simulated reasoning, referred to by Google as “thinking.” This means that the model checks its facts during generation, resulting in more accurate output. However, this also makes the models slower and significantly more expensive. Since not all questions require this level of continuous analysis, Google has equipped Flash with tools that allow developers to tune the model to their specific use case.

The fact that the 2.0 thinking model never made it past the experimental phase underscores how fast Google’s Gemini team is moving these days. In addition, the new model thinks “dynamically,” depending on the input prompt it receives.

Control and Customization for Developers

Returning to Flash 2.5: unlike the 2.0 Thinking model, the new model will directly support Google’s Canvas feature for working on text or code. According to a Google spokesperson, support for “deep research” with this model will follow later.

Gemini 2.5 Flash allows developers to set a token limit for ‘thinking’ or disable this functionality entirely. Google has announced prices of $0.15 per million tokens for input, while output comes in two variants. Without ‘thinking’, output costs $0.60, but with ‘thinking’ enabled, this rises to $3.50.

Feedback and Development

The new Flash model goes further by giving developers control over the “thinking” process. Google is now launching the model in preview to get feedback from developers on where the model meets their expectations and where it under- or over-thinks, so they can continue to iterate on the dynamic thinking functionality.

Read also: Google makes Gemini 2.5 Pro free (update)