Why Meta's AI Risk Policy is a Game Changer for Artificial Intelligence Development

Meta's AI risk policy is setting new standards for responsible artificial intelligence development. This policy focuses on ensuring ethical innovation, transparency, and safety in the ever-evolving AI landscape.

Frontier AI Framework

Meta has recently released its Frontier AI Framework, which serves as a guideline for the evaluation and mitigation of risks associated with advanced AI models. This framework outlines the criteria that Meta will use to classify AI systems based on their risk levels. It also specifies the corresponding actions that Meta will take, including halting development, restricting access, or refraining from releasing certain systems.

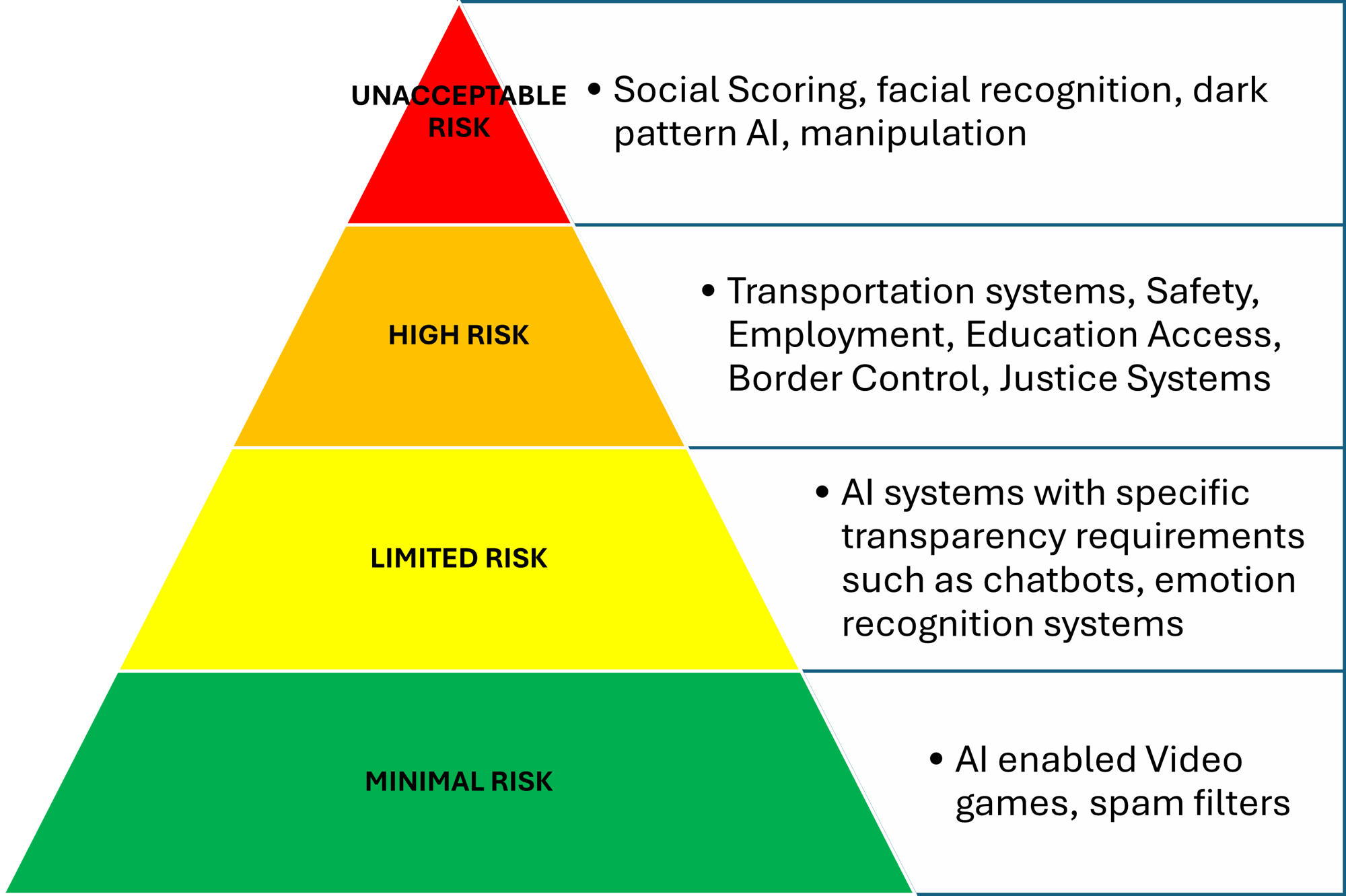

Risk Classification

AI models classified as "critical risk" are those that have the potential to uniquely enable the execution of a defined threat scenario. In such cases, Meta would stop the development process, restrict access to a limited group of experts, and implement security measures to prevent hacking or data exfiltration.

On the other hand, high-risk AI models are those that could significantly increase the likelihood of a threat scenario but do not enable its execution. For these models, Meta would restrict access and implement measures to reduce the risk to moderate levels.

Threat Scenarios

Meta's risk assessment considers various threat scenarios that the AI systems could pose. While the list is not exhaustive, it covers the most urgent and likely catastrophes that could result from the release of a powerful AI system.

Evolving Risk Assessment

Meta's risk assessment process involves both internal and external experts from diverse disciplines, as well as company leaders. The company emphasizes that its approach to evaluating and mitigating risks will continue to evolve over time. Meta is committed to enhancing the robustness and reliability of its evaluations to ensure that testing environments accurately reflect real-world performance scenarios of the AI models.

Implementation

For systems deemed high-risk, Meta will restrict internal access and refrain from releasing them to the public until risk mitigation strategies are in place to reduce the risk to moderate levels. In the case of critical-risk systems, Meta will immediately halt development and implement security measures to prevent any potential misuse.

This new risk policy applies specifically to Meta's most advanced models and systems that either match or exceed current capabilities. By sharing its approach, Meta aims to promote transparency within the industry and stimulate discussions and research on enhancing AI evaluation and risk quantification.