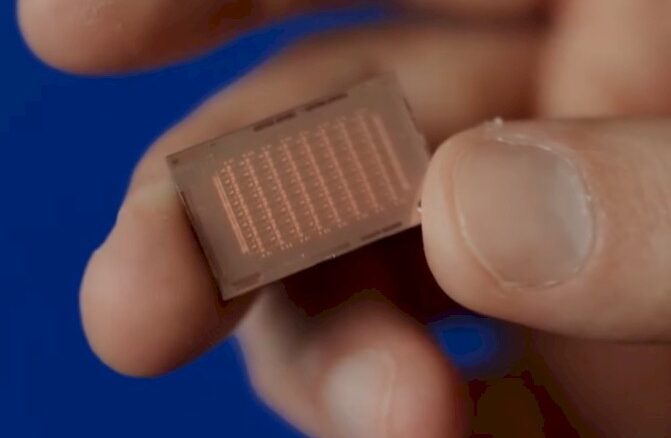

META Custom AI Inference Chips MTIA for a META AI Personal Assistant

META CEO Mark Zuckerberg predicts that by 2025, an AI assistant will serve over one billion users, with META being the company to provide that AI assistant. In 2023, Meta revealed that they are designing AI inference accelerators in-house specifically for Meta’s AI workloads. These accelerators support deep learning recommendation models that enhance various experiences across Meta products.

At the recent earnings call, Meta introduced the Meta Training and Inference Accelerator (MTIA) family of custom-made chips designed for their AI workloads. These custom $AVGO MTIA chips are aimed at training workloads and ranking systems, with the goal of reducing dependence on NVIDIA GPUs. By deploying the custom MTIA silicon in areas optimized for their unique workloads, Meta expects to achieve cost efficiencies.

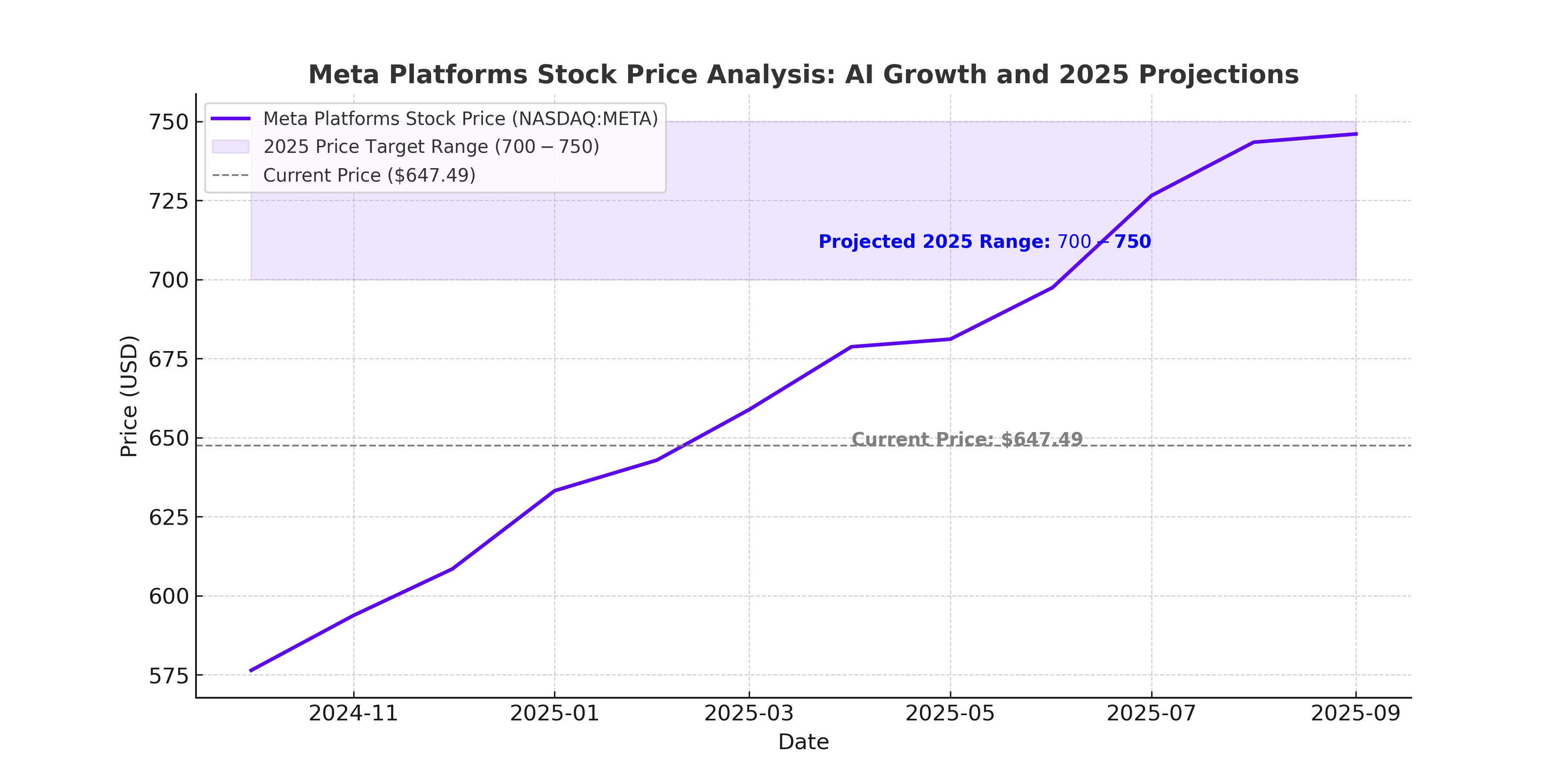

Meta's revenues have increased by 21% over the past year, reaching a record high of $48.4 billion. Net income has also seen a significant rise of 49% year over year, reaching a new high of $20.8 billion. Operating margins have increased from 41% to 48% compared to a year ago.

AI Driving Revenue Growth

AI has been a key driver of revenue growth for Meta. The number of advertisers using generative AI tools has increased to 4 million, up from 1 million six months ago. The strategic advancements and investments in AI are evident as Meta takes a thoughtful approach to enhance margins.

Meta plans to focus on AI monetization once they reach a billion-user scale. Their strategic shift includes the development of custom $AVGO MTIA chips to target training workloads and ranking systems, aiming to reduce reliance on NVIDIA GPUs. This shift has already impacted NVIDIA stock prices, with Meta’s in-house silicon posing a threat to NVIDIA’s AI dominance.

Meta's long-term strategy involves improving margins through AI advancements, with a focus on scaling AI products before monetization. The development of custom AI inference chips such as MTIA is a crucial step towards achieving this goal.

AI success is heavily reliant on investing in infrastructure and CapEx to deliver quality products at scale. Meta plans to continue investing $60-65 billion in Capex, with a major focus on AI infrastructure to support their growing AI capabilities.

Pipelined custom implementation of AI models, such as language models, is expected to be far more efficient and faster than traditional GPUs. This approach allows for more economical scaling and efficient processing of AI workloads.