Roadmap: Data 3.0 in the Lakehouse Era

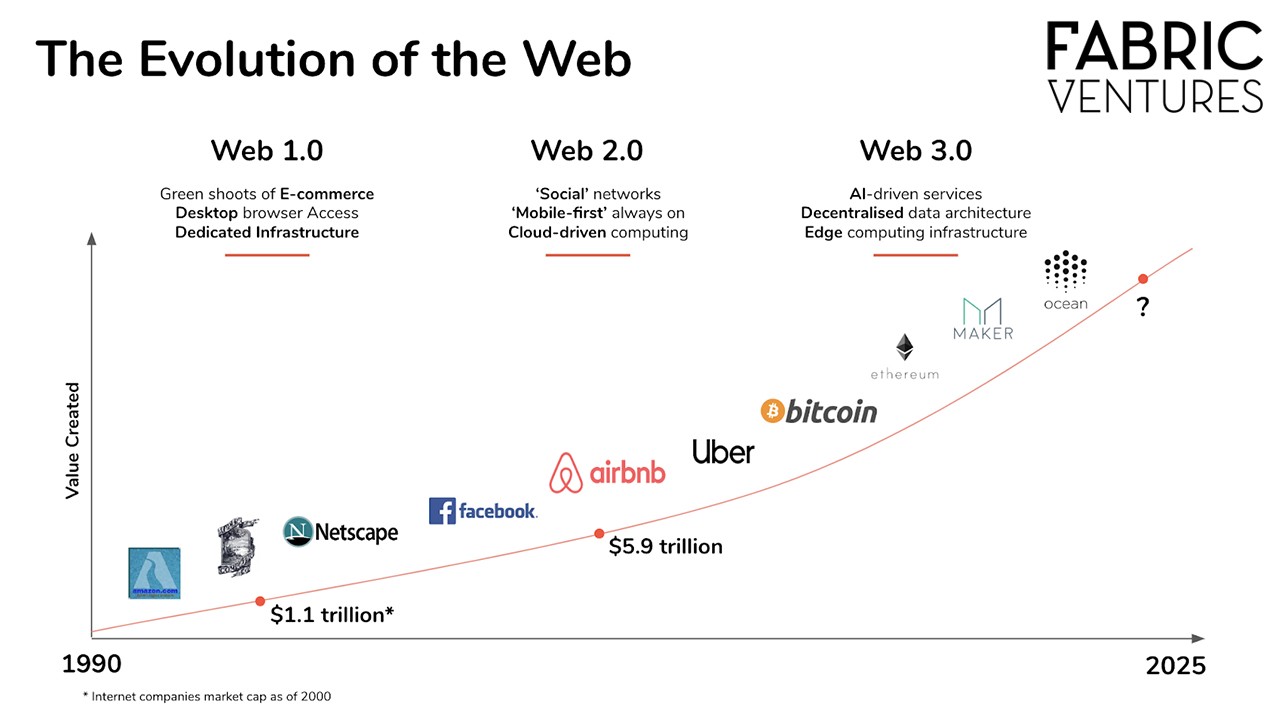

Data infrastructure plays a crucial role in enabling new products and businesses while adapting to the demands of technological innovations. We have witnessed a significant evolution in data warehouses over the past five decades, transitioning from traditional on-premise solutions to cloud-native architectures and data lakes. Today, we stand at a pivotal moment as we move beyond the modern data stack towards a Data 3.0 era, driven by various catalysts shaping the landscape.

The Lead Up to the Lakehouse Revolution

One of the key drivers of this transformation is the proliferation of AI, which has revolutionized the AI infrastructure landscape. However, alongside this shift, a monumental change is underway in enterprise data infrastructure driven by the emergence of the data lakehouse — a revolutionary architectural paradigm that combines the strengths of data warehouses and data lakes to support a wide range of use cases, from analytics to AI workloads, on a unified platform.

Enterprise Data Architecture: A Call and Response to Innovation

The data lakehouse represents more than just incremental progress; it signifies a radical overhaul that promises unprecedented interoperability and sets the stage for the emergence of new data infrastructure giants. The investment in data infrastructure has surged in recent years, reflecting the industry's recognition of the need for more advanced architectures to keep pace with the evolving AI landscape.

Modern Open Table Formats Unlocking the Full Potential of the Lakehouse

Open table formats like Delta Lake, Iceberg, and Hudi have played a pivotal role in unleashing the power of the lakehouse paradigm. These formats abstract underlying files into a unified "table" accessible through APIs, enabling enhanced capabilities and driving innovation within the industry.

In particular, Iceberg had a remarkable year in 2024, culminating in its acquisition by Databricks, underscoring the growing significance of these open table formats in reshaping the data infrastructure landscape.

At the Tip of the “Iceberg” for Innovation in this New Architectural Paradigm

The evolution from Data 1.0 to Data 2.0 highlighted the limitations of existing architectural paradigms in meeting the demands of AI applications and workloads. This realization catalyzed the search for a successor that could seamlessly integrate the strengths of data warehouses and data lakes while addressing their shortcomings.

We're at the Precipice of Data 3.0 in the Lakehouse Era

This quest for a new architectural standard led to the inception of the data lakehouse, a transformative concept that redefines data infrastructure operations by combining the robustness of data warehouses with the flexibility of data lakes. The resultant interoperability opens up a new realm of possibilities for AI-driven applications, real-time analytics, and enterprise intelligence.

A Glimpse into the Future of Data 3.0

As the industry embraces the lakehouse paradigm, there is a growing opportunity for visionary founders to pioneer innovative solutions within this evolving ecosystem. By leveraging technical innovation and strategic market positioning, the Data 3.0 landscape is poised for the emergence of groundbreaking infrastructure platforms that could shape the future of data processing and analytics.

The Shift Towards Data 3.0 Ecosystem

Data pipelines, ETL tools, and orchestrators are undergoing a fundamental transformation in the Data 3.0 era to meet the evolving needs of user-facing production use cases. New products like Prefect, Windmill, and dltHub are challenging traditional approaches with code-native solutions that enhance pipeline orchestration, monitoring, and scalability, catering to the demands of AI-driven workflows.

Furthermore, innovations such as the Model Context Protocol by Anthropic are redefining how AI systems interact within the Data 3.0 ecosystem, paving the way for context-aware AI applications and automated workflows.

As the industry progresses towards real-time data processing, technologies like Apache Kafka and Apache Flink are playing a critical role in enabling complex ML pipelines and continuous model training. Stream processing patterns optimized for model serving and continuous learning pipelines are expected to emerge, driving more accurate predictions and recommendations in the AI landscape.