Can a machine out dream us? Google Gemini's bold leap into...

Decades ago, I read a book that felt like a dispatch from the future—The Age of Intelligent Machines, where a thinker named Ray Kurzweil imagined a world of computers not just crunching numbers but reasoning, creating, dreaming like us. In its pages, penned through the late 1980s, he saw machines weaving patterns of thought, turning silicon into poets and problem-solvers.

That vision, born in an era of clunky computers and carried forward by Kurzweil and others at Google since 2012, wasn’t just a prediction; it was a question about what intelligence could mean. I recall those questions now, and marvel at how Kurzweil’s ideas ripple into April 2025. I’m watching video streams from Google Cloud Next, where Google unveils Gemini 2.5—a system that writes novels, codes games, summarizes reports in a Google Doc, and turns notes into podcasts.

The Paradox of Google Gemini

Yet, as demos dazzle the crowd, a deeper question lingers: what does it mean when machines don’t just mimic us but reshape how we work, think, and create?Gemini, Google’s family of multimodal AI models, is no ordinary technology. It’s a puzzle—a paradox, even. It promises to simplify our lives while complicating our relationship with intelligence itself.

The Rise of Gemini 2.5 Pro

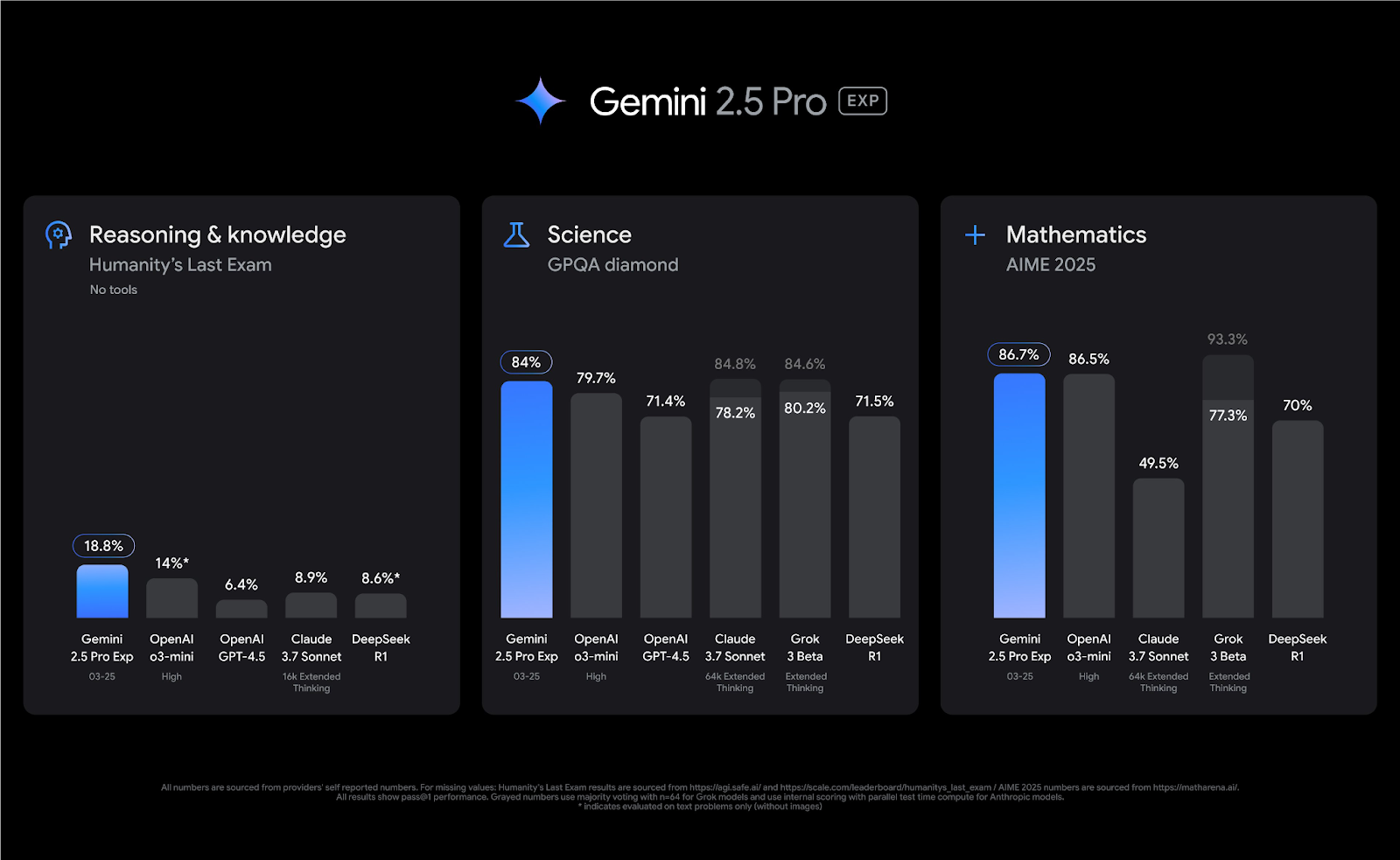

At Cloud Next 2025, Google revealed Gemini 2.5 Pro and teased Gemini 2.5 Flash, alongside features that let you export outputs to Google Docs with a click or generate podcast-like Audio Overviews from a single file. These aren’t just tools; they’re invitations to rethink what collaboration and creativity mean in an AI-driven world.

The Power of Gemini 2.5 Pro

But here’s where the story grows bigger, almost mythic. During the conference, Alphabet CEO Sundar Pichai laid out a vision that felt less like a business plan and more like a wager on the future. Google, he announced, is pouring $75 billion into capital expenditures in 2025—most of it to expand data centers and AI infrastructure. This isn’t just about keeping Gmail or Photos running; it’s about fueling models like Gemini 2.5 with the computational muscle to think faster, deeper, and wider.

The Intersection of AI and Humanity

This isn’t just tech—it’s choreography. Google is orchestrating a world where AI doesn’t replace humans but dances alongside them, amplifying their strengths.

Google's Audio Overview Feature

Now, imagine flipping through a 146-page camera manual, your eyes glazing over. Instead, you upload it to gemini.google.com, hit “Generate Audio Overview,” and five minutes later, you’re listening to two AI hosts—a witty duo reminiscent of NPR—discussing the manual’s highlights as if it’s the plot of a thriller. This is Gemini’s Audio Overview, born from NotebookLM’s viral success and now a core feature.

Google's Vision for the Future

But here’s where the story grows bigger, almost mythic. During the conference, Alphabet CEO Sundar Pichai laid out a vision that felt less like a business plan and more like a wager on the future. Google, he announced, is pouring $75 billion into capital expenditures in 2025—most of it to expand data centers and AI infrastructure. This isn’t just about keeping Gmail or Photos running; it’s about fueling models like Gemini 2.5 with the computational muscle to think faster, deeper and wider.

Google's AI Integration into Business

Google didn’t stop at models. At Cloud Next, they unveiled tools to weave Gemini into the fabric of business—a choreography of code and ambition. Vertex AI now offers supervised tuning and context caching, letting companies mold Gemini for tasks like spotting fraud or drafting contracts, all while cutting costs.