AI Goes From Guesswork to PhD-Level Science in Just 2 Years ...

Recent advancements in artificial intelligence (AI) have been nothing short of groundbreaking. According to Liam Carroll, a researcher at the Gradient Institute, AI models have rapidly progressed to the point where they can now master PhD-level science in just a matter of months. At a recent online event focused on AI, Carroll highlighted the impressive development of frontier models, which are evolving at an unexpectedly rapid pace.

PhD-Level Challenges

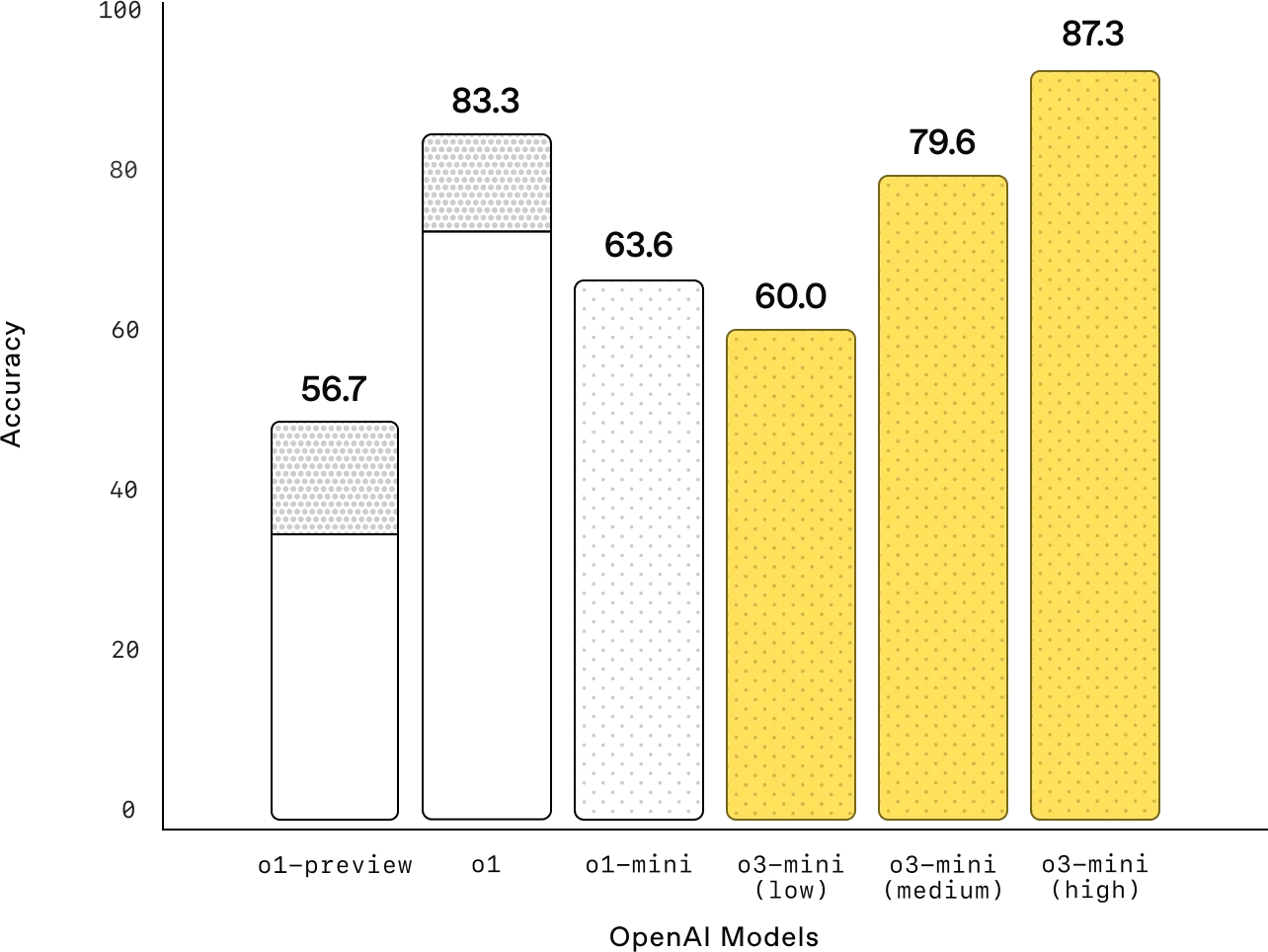

Carroll referred to data from the AI research institute Epoch AI, where AI models were tested on PhD-level science questions utilizing a GPQA (Graduate-Level Google-Proof Q&A) dataset. This dataset consisted of 198 challenging multiple-choice questions spanning various complex fields such as molecular biology, astrophysics, and quantum mechanics. These questions, curated by experts with PhDs or in pursuit of one, are intentionally crafted to be difficult for non-experts to answer.

During a trial period, AI models initially scored a benchmark of 25 percent, equivalent to random guessing. However, over time, these models demonstrated significant progress, with a trial involving PhD experts achieving an impressive 69.7 percent score. By the end of a 21-month period from July 2023 to April 2025, these systems began delivering responses at an expert level. In the following three months from January to April of this year, several AI models successfully surpassed the 70 percent threshold, with Google's Gemini 2.5 Pro model exceeding an 80 percent score.

Advancements Beyond Memorization

Carroll emphasized that these AI models are not merely parroting information without comprehension. He noted that they are capable of learning generalizable patterns about the world, as evidenced by interactions with advanced models like Claude 3.7 and ChatGPT 01. Carroll pointed out that while current AI developments may give the impression of being limited to chatbots, the future promises autonomous AI agents capable of operating computers independently. Notable examples include Open AI's Operator and Monica's Manus, both exhibiting the ability to write and execute code in a computer terminal.

Managing AI Risks

While the rapid evolution of AI technology holds immense potential for the economy, Carroll cautioned that precautions must be taken. Highlighting the importance of ensuring the safety and reliability of AI systems, Carroll referenced the AI Risk Repository project. This project, which he has been involved in, identified over 1,600 types of AI risks as of March 2025, categorizing them into 24 distinct risk types. These risks range from common concerns like exposure to harmful content and misinformation to more severe issues such as weapon development and threats to human agency.

As AI continues to progress at an unprecedented rate, the need for responsible and careful management of these technologies becomes increasingly evident. While the benefits of AI advancements are vast, it is crucial to address and mitigate the associated risks to ensure a safe and prosperous future.