Enhanced Voice Features: Meta's Llama 4 Set to Revolutionize AI Interactions - CoinStats

The buzz in the AI world is getting louder, and it’s all thanks to whispers about Meta’s next-generation Llama models. If the rumors are true, we could be on the verge of experiencing a significant leap in how we interact with artificial intelligence, particularly through voice. Let’s dive into what makes this news so exciting and what it could mean for the future of open AI and beyond.

Upgraded Voice Features with Llama 4

According to a recent report in the Financial Times, Meta is gearing up to launch Llama 4, the latest iteration of its flagship Llama model family, and it’s expected to arrive very soon – within weeks! The highlight? Significantly upgraded voice features. This isn’t just a minor tweak; reports suggest a major focus on creating a more natural and intuitive voice interaction experience. Imagine being able to converse with an AI model as smoothly as you would with another person. That’s the direction Meta seems to be heading.

You might be wondering, why all the fuss about voice? Well, consider this: Meta’s push into enhanced voice features with Llama 4 isn’t happening in a vacuum. It’s a clear signal that they’re directly challenging the dominance of OpenAI’s ChatGPT and Google’s Gemini in the open AI space. Both OpenAI and Google have already showcased impressive voice capabilities, with features like ChatGPT’s Voice Mode and Gemini Live. Meta is not just catching up; they seem to be aiming to leapfrog the competition by focusing on key improvements like interruptibility – the ability to interrupt the AI mid-speech, making conversations feel much more fluid and natural.

Competition Driving Innovation at Meta

What’s driving this rapid innovation at Meta? Competition, plain and simple. The emergence of AI models from the Chinese AI lab DeepSeek has been a significant catalyst. DeepSeek’s models are reportedly performing on par, or even better, than Meta’s Llama models. This has injected a sense of urgency and spurred Meta to accelerate its development efforts. The race is on to create not only the most powerful but also the most efficient and cost-effective AI models.

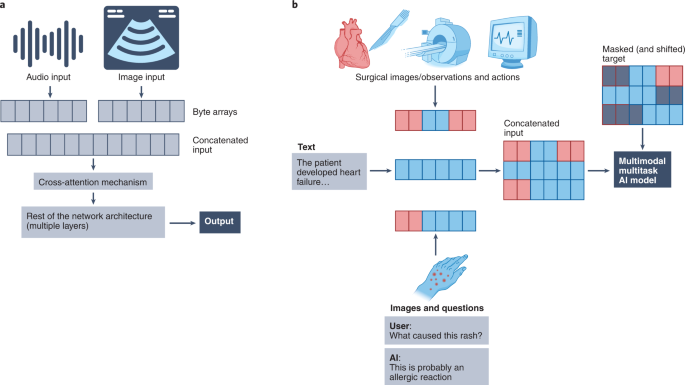

Meta’s chief product officer, Chris Cox, revealed at a Morgan Stanley conference this week that Llama 4 will be an “omni” model. But what does “omni” mean in this context? It signifies a model capable of natively understanding and generating not just text, but also speech and potentially other data types. This is a significant step towards creating truly multimodal AI models that can process and interact with the world in a more holistic way.

Voice Features: A Crucial Battleground

Meta’s focus on voice features in Llama 4 is a clear indication that voice is becoming a crucial battleground in the AI race. As these models become more sophisticated and voice interactions become more seamless, we can anticipate a wave of new applications and use cases across various industries. From customer service and education to entertainment and personal productivity, the potential is immense.

The development of robust speech recognition and voice synthesis technologies is not just about making AI easier to use; it’s about unlocking new dimensions of interaction and creating a future where AI is truly integrated into the fabric of our daily lives.

Conclusion

In conclusion, the prospect of Meta’s Llama 4 arriving with upgraded voice features is incredibly exciting for the AI community and beyond. It signals a significant step forward in the evolution of open AI models and promises to make AI interactions more natural, intuitive, and powerful. Keep an eye out for Llama 4’s launch in the coming weeks – it could very well redefine how we think about and interact with artificial intelligence.

To learn more about the latest AI market trends, explore our article on key developments shaping AI features.