Ilya Sutskever, former chief scientist at OpenAI launches rival AI firm ...

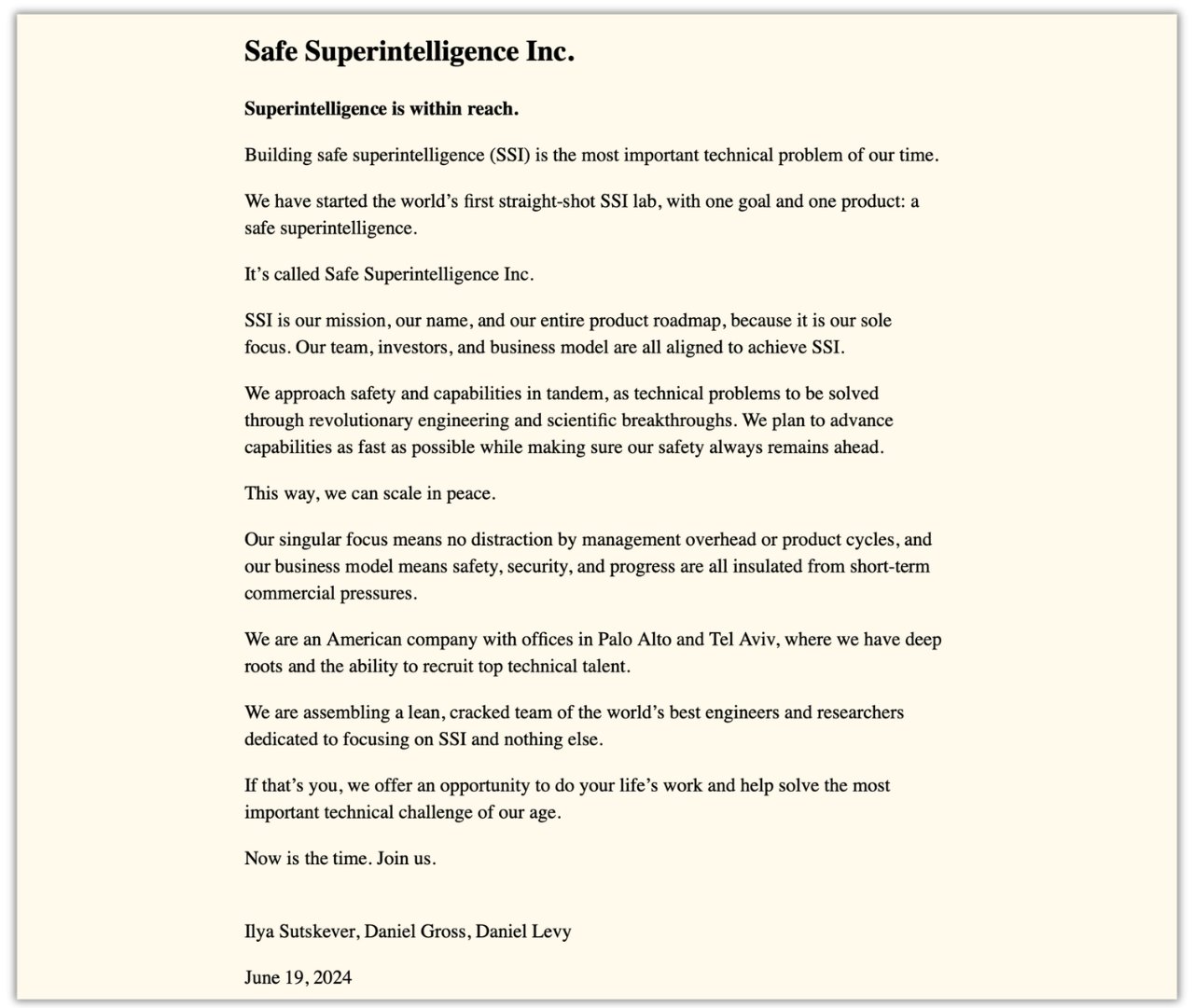

In an exciting development in the AI industry, Ilya Sutskever, along with Daniel Gross and Daniel Levy, has announced the launch of a new AI startup named Safe Superintelligence Inc. The primary focus of this startup is to pave the way for the creation of safe superintelligence or artificial general intelligence (AGI). Prior to this venture, Ilya Sutskever held the position of co-founder and chief scientist at OpenAI, a renowned AI research lab.

Background and Mission

Safe Superintelligence Inc. is not just the name of the company; it embodies their mission and product roadmap. The team, business model, and investors are all aligned towards achieving the goal of safe superintelligence. The company aims to develop both the technological capabilities and necessary guardrails simultaneously while maintaining a rapid pace of innovation.

Company Structure and Focus

Unlike some players in the industry, Safe Superintelligence Inc. is structured to avoid distractions caused by management overhead or short-term commercial pressures. The company's business model prioritizes safety, security, and technological progress, ensuring that these aspects are not compromised for immediate gains.

Geographical Presence and Team

Safe Superintelligence Inc. is based in the United States, with offices in Palo Alto, California, and Tel Aviv, Israel. The company is in the process of building a team of top-tier scientists who are dedicated to the ambitious goal of realizing safe superintelligence.

Industry Dynamics

The AI industry is witnessing a divide between two opposing ideologies - caution and innovation. This divide was highlighted during a past incident at OpenAI. On one side, there is a group that believes in the Silicon Valley ethos of rapid progress, often referred to as Effective Accelerationism. On the other side are proponents of Effective Altruism, who prioritize ensuring the safety and meticulous testing of AI technologies before widespread deployment. Both camps share the common goal of harnessing AI for the benefit of humanity.