NextGen: AI – OpenAI, others facing delays with training new LLMs

AI giants such as OpenAI, Google, Anthropic, and others are facing challenges in building more advanced AI as their current methods reach limitations. According to Bloomberg and Reuters, companies have been experiencing delays and disappointments in their efforts to develop advanced LLMs that surpass existing models like Chat GPT-4.

The Challenges Faced by AI Companies

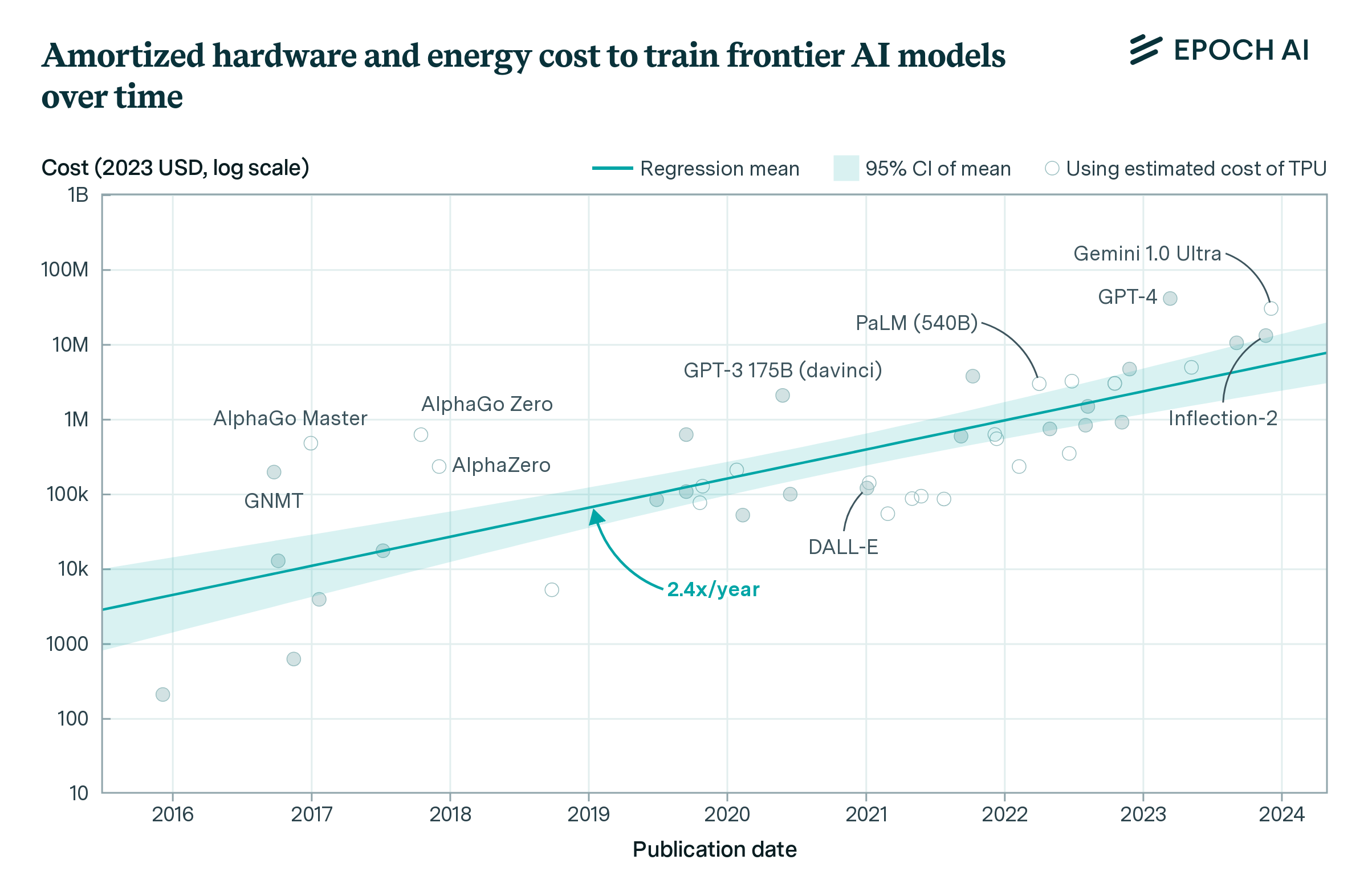

One of the main factors contributing to these delays is the high training costs for LLMs. Additionally, hardware failures and power shortages resulting from the complexity of AI systems and the substantial energy requirements needed to power AI have also been hindering progress. Companies are also encountering the hurdle of having exhausted all easily accessible data for training AI models.

Exploring New Concepts in AI

To address these challenges, researchers are exploring innovative concepts like 'test-time compute' to enhance the capabilities of AI during the inference phase. This involves training existing AI models to dedicate more time and power to processing complex requests, leading to more robust outputs.

Noam Brown, a researcher at OpenAI, illustrated this concept at a TED AI event by showcasing how a bot thinking for just 20 seconds in a poker hand could achieve a performance boost equivalent to scaling up the model significantly and training it for an extended period.

The Future of AI in Financial Services

As AI continues to evolve, many organizations are still in the early stages of implementing AI solutions. It is inevitable that they will encounter challenges and delays as they explore the full potential of AI in financial services. Overcoming these obstacles and deploying AI effectively are key topics that will be discussed at Finextra's inaugural NextGen: AI event happening in London on 26 November.

Discover our agenda and register today to be part of reshaping AI in financial services.