Gemini 2.0 : The most important advancement in Google's new AI

Google Cloud - Community -- ListenShare

Welcome to the world of AI’s glitziest launch: Gemini 2.0 — Google’s flagship AI model, the new “super-agent” in town. Everyone’s talking about its jaw-dropping feats: it can interpret complex code, reason like a philosopher, and even handle text, images, and audio simultaneously. Some say it’s the next big leap in AI; others wonder if it’s the closest we’ve come to realizing science fiction’s ultimate dream of a sentient assistant.

But let’s pause for a second. Amid all the hullabaloo about Gemini 2.0’s capabilities, have you noticed the one thing no one seems to be shouting about? It’s not the multimodal reasoning or the agentic magic. It’s Google’s Responsible AI framework — the meticulously designed, yet underappreciated scaffolding that makes this AI both powerful and trustworthy.

The Foundation of Responsible AI

To understand why this is groundbreaking, we need to start with the foundation: Google’s seven AI principles, introduced in 2018, form the ethical bedrock of every AI innovation at Google. They emphasize fairness, accountability, and inclusivity, ensuring that AI systems benefit humanity without creating harm. Gemini 2.0 isn’t just a marvel of engineering — it’s a testament to these principles, brought to life with cutting-edge safety measures like watermarking (SynthID), multilingual inclusivity, and AI-assisted risk assessments.

The Paradox of Responsible AI

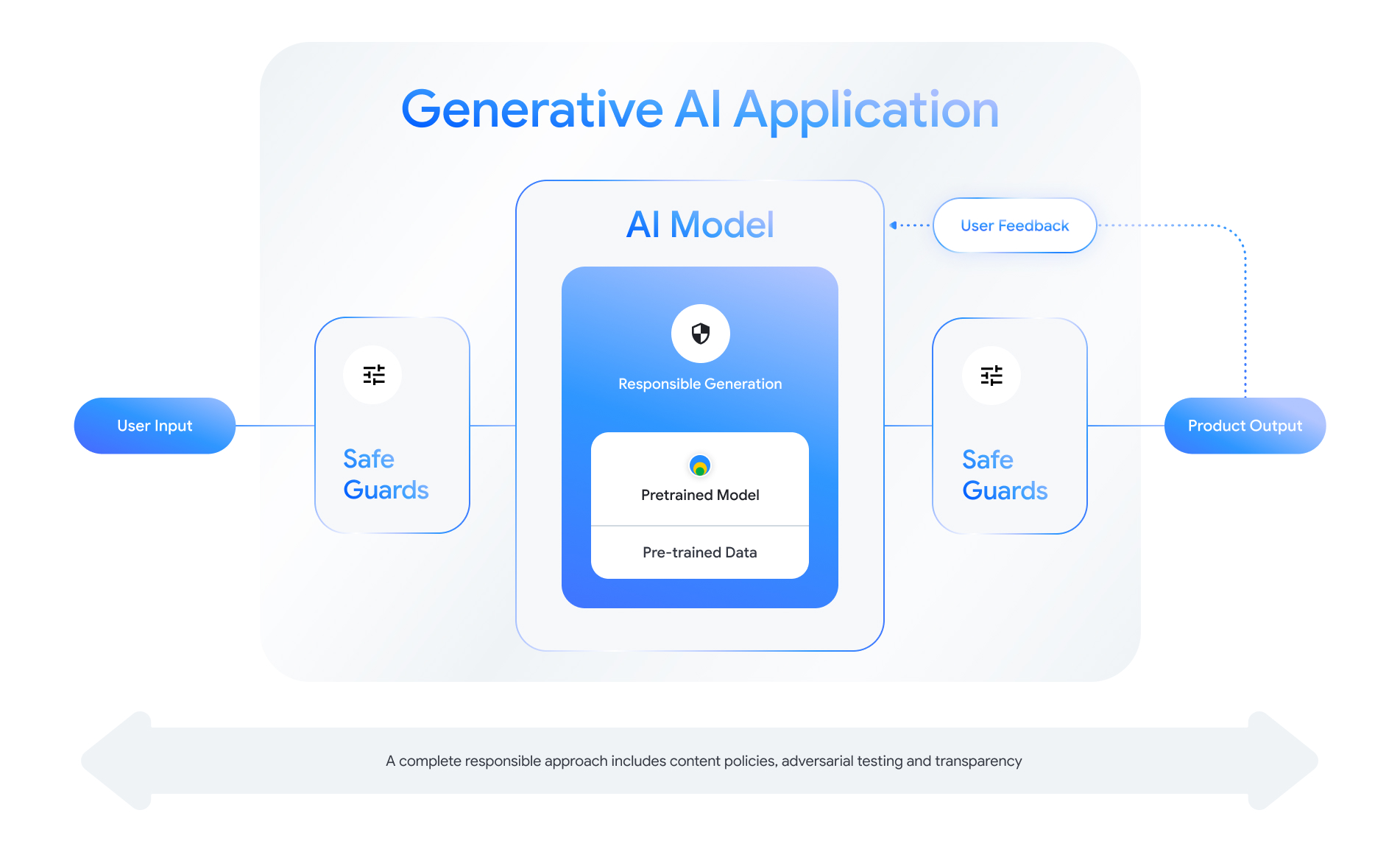

Yet, here’s the paradox: the very safeguards that make Gemini 2.0 safe and effective are often invisible to the casual observer. While flashy features grab the headlines, Responsible AI does the heavy lifting behind the scenes, from combating misinformation with imperceptible watermarks to thwarting malicious attacks through enhanced prompt injection resistance.

As someone who has spent years researching AI safety and ethics — trust me when I say this — the real revolution isn’t just in what AI can do. It’s in how it does it safely, ethically, and responsibly. And Google’s efforts in this space, particularly with Gemini 2.0, are a masterclass in balancing innovation with responsibility.

The Multimodal Powerhouse

Imagine trying to solve a puzzle where half the clues are images, a quarter are text, and the rest are audio snippets. Sounds daunting, right? Well, Gemini 2.0 thrives in exactly this kind of scenario. It’s not just a language model — it’s a multimodal powerhouse that can process and understand multiple types of data simultaneously.

The Agentic Capabilities

If multimodality is Gemini’s brain, its agentic capabilities are its heart. Unlike traditional AI models that passively respond to queries, Gemini can take proactive actions. Take Project Mariner, for example — a Chrome extension powered by Gemini 2.0. It can navigate websites, fill out forms, and automate repetitive tasks, all while keeping you in control.

Gemini 2.0 Flash

AI systems have always had one Achilles’ heel: latency. Ask a model a complex question, and you might find yourself waiting several seconds for a response. Enter Gemini 2.0 Flash, the experimental version that’s lightning-fast, capable of processing real-time audio and visual streams.

This isn’t just about speed; it’s about redefining how humans interact with AI. Streaming real-time data opens new vulnerabilities, which Google addresses through robust privacy controls, encryption, and real-time assurance evaluations.

The Unsung Hero: Responsible AI

Let me tell you a little secret about tech: the shinier the object, the quieter its support team. Gemini 2.0 may be the star of the show, but every star needs a stage crew — and in this story, Responsible AI is the one pulling all the invisible strings.

While all these capabilities are undeniably exciting, they also overshadow a crucial truth: none of this would be possible without the Responsible AI framework supporting it. The innovations get the headlines, but it’s the safety measures — like watermarking, fairness testing, and red-teaming — that ensure these innovations don’t spiral into chaos.

In the next section, we’ll explore why Responsible AI gets so little attention despite being the unsung hero of Gemini 2.0. Spoiler alert: it’s like trying to sell broccoli at a candy store — it might not be flashy, but it’s what keeps everything healthy.