Alumni @ RopesTalk: DeepSeek Deep Dive with Dr. Vasanth Sarathy

On this special edition of Ropes & Gray’s Alumni @ RopesTalk podcast series, technology and IP transactions partner Regina Sam Penti is joined by Dr. Vasanth Sarathy, a professor of computer science at Tufts University and a Ropes & Gray alum. Together, they delve into the technical and business implications of DeepSeek, a Chinese app and model that has generated significant buzz in the AI world. They explore whether DeepSeek is a game-changer in AI, its cost advantages, and the potential impact on AI investments and startups. Dr. Sarathy also addresses the privacy and security concerns associated with DeepSeek, especially given its origins in China. Tune in to understand the reality behind the hype and what it means for businesses considering AI adoption.

Transcript:

Regina Sam Penti: Hi. Welcome to this episode of Alumni @ RopesTalk. My name is Regina Penti—I’m a partner in the IP transactions practice here at Ropes & Gray and have advised dozens of companies on AI strategy and adoption. Today, we are discussing DeepSeek, the Chinese app and model.

Many claims have been made about what this app and model mean for the AI world. Is it the killer of the current AI paradigm? Is it the fixer of AI energy issues? Is it a democratization of AI? And so on. My guest today is Dr. Vasanth Sarathy. Vasanth is a professor of computer science at Tufts University. His research is at the intersection of AI and natural language processing. He also advises companies seeking to bridge the gap between business and technology on a regular basis. Vasanth is also a fully trained lawyer and a Ropes alum. Prior to going into academia, Vasanth was an associate here at Ropes & Gray for almost a decade. So, we’re really excited to welcome him back to be discussing this really important topic. Welcome, Vasanth.

Dr. Vasanth Sarathy: Hi, thanks for having me.

Regina Sam Penti: Vasanth and I will focus today on the technical and business implications of DeepSeek, and we’ll try to sift the reality from the hype. For anyone interested in the legal risks and considerations regarding DeepSeek, I coauthored a Ropes & Gray alert which addresses these points—please reach out to me if you’d like a copy. Let’s just jump right in. For our listeners who may not be fully up to date on what DeepSeek is, how do you describe it? What makes it special? And why has it generated so much excitement, and if I must say, confusion?

Dr. Vasanth Sarathy: DeepSeek, at the very high level, is a large language model (“LLM”) that was designed and built in China by a hedge fund.

The underlying idea here is that, traditionally, software engineering involves somebody writing a program—you take some input, you write the program—and it does some computations and produces an output. Machine learning came along and said, “You don’t have to write the program. We’ll just give it a bunch of inputs and outputs and it’ll produce the program for us.” Deep learning took that to a whole new level where you now have much more complex systems. One way to think about these systems is, like, a big, giant machine with a whole bunch of knobs on them. When these machines get trained, those knobs get automatically adjusted or tuned, and so that in the future, when you have some input, you can get the right kind of output. Language modeling is one subset of all of this where you’re trying to predict the next word. That’s essentially what you’re doing—you’re looking at the existing set of words and you’re saying, “What is the most likely next word?” The large language models do that, except that they were trained on very large amounts of data—trillions of words across the internet. Models like ChatGPT, that people are aware of, is an example of a large language model.

There are others: Claude. DeepSeek also is in that same family of large language models. The interesting thing about DeepSeek is that when these large language models come out, they often do a whole bunch of what’s called “benchmarking,” which is, essentially, evaluating these models to see how well they do on a variety of different tasks.

Reasoning Tasks:

You can think of reasoning as just thinking through multiple steps or something like that. The goal of a lot of the new AI models is that we want to build better reasoning models, so they actually think about an answer before they give you the answer, or they are able to do some kind of thinking internally as well. DeepSeek is officially the R1 model—the latest R1 model is another flavor of the reasoning models. What’s interesting is that the performance of these models, particularly DeepSeek, isn’t that much better than existing models.

Models like ChatGPT, and Meta has a model that’s called Llama, all perform very similarly when it comes to reasoning tasks. And so, then the question is: Why did everything go crazy when DeepSeek came out?

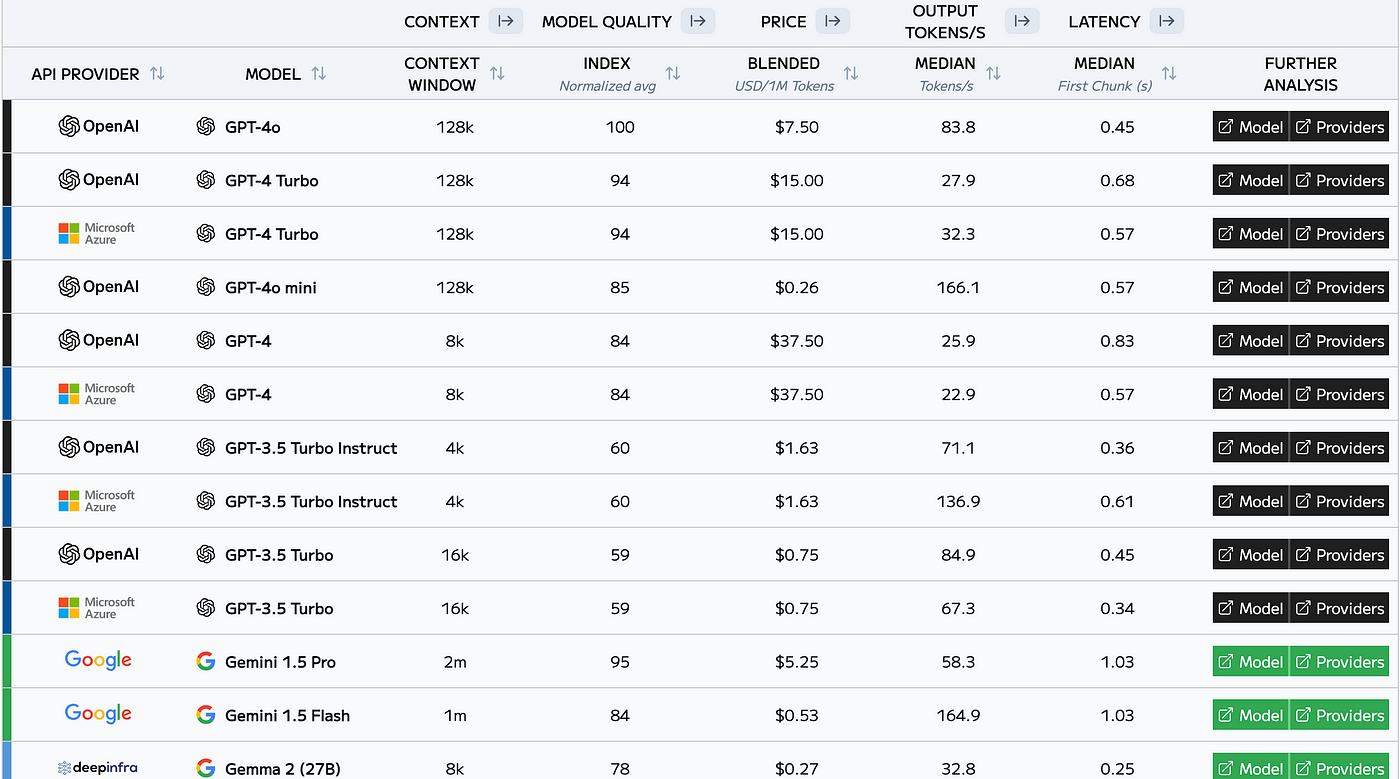

One of the big reasons is that it was very cheap. If you were using the app, you were paying much less than you would pay for ChatGPT, and that got people’s interest. So, that was a big factor. It was significantly cheaper, I think, 30 times cheaper. Then, we have the other issue, which is that even though the performance of DeepSeek is comparable to, say, ChatGPT and its later versions—the most recent version is called o1 and o3—DeepSeek does much better than a lot of the open-source models, which are models that are publicly available for anybody to use. Oftentimes, models like ChatGPT are behind a proprietary wall, and you don’t get to see the internals of these models at all.