ChatGPT Evaluates Online Comments: Accuracy In Detecting ...

ChatGPT has made significant strides in identifying inappropriate online comments, showing enhanced accuracy through continuous improvements up to Version 6. However, the detection of targeted content has shown some inconsistencies, with a higher rate of false positives compared to human expert evaluations. This highlights the need for ongoing development and a deeper understanding of contextual nuances.

Challenges of Online Content Moderation

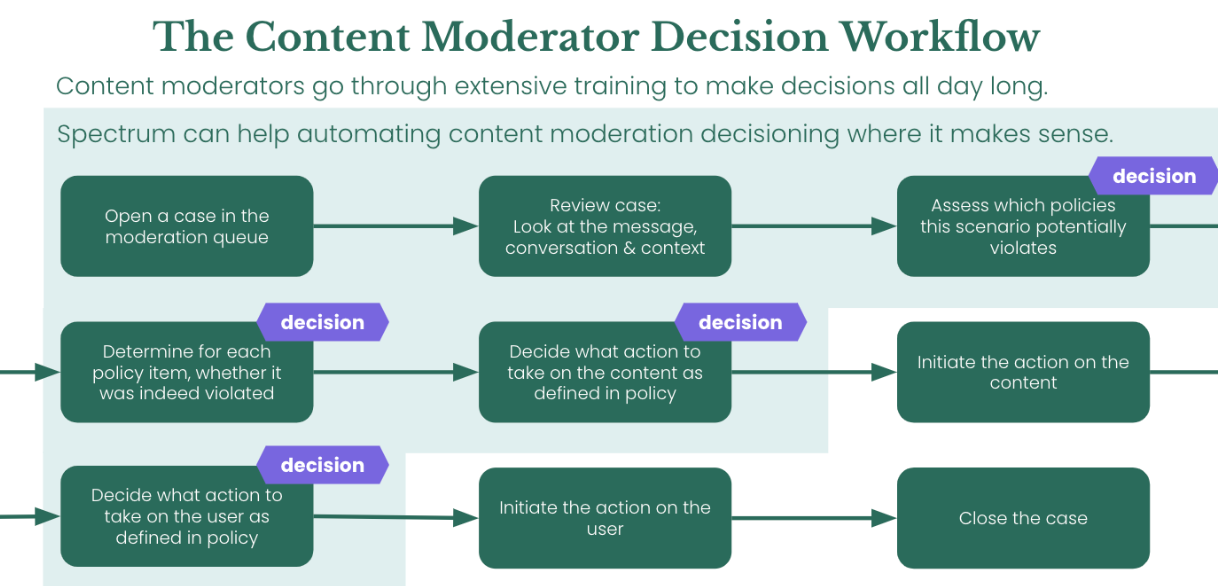

The proliferation of user-generated content on digital platforms poses a formidable challenge for maintaining respectful conversations and shielding users from harmful interactions. While automated content moderation powered by artificial intelligence offers a potential solution, it requires meticulous evaluation to ensure effectiveness and dependability.

Research on ChatGPT's Performance

A study conducted by researchers at Vrije Universiteit Amsterdam – Baran Barbarestani, Isa Maks, and Piek Vossen – titled 'Assessing and Refining ChatGPT’s Performance in Identifying Targeting and Inappropriate Language: A Comparative Study' delves into the evaluation of ChatGPT's proficiency in recognizing abusive language and targeted harassment in online discourse. The research compares ChatGPT's performance with human annotations and expert assessments to gauge its accuracy and consistency.

Automated Solutions for Content Moderation

The exponential growth of user-generated content necessitates scalable and automated content moderation approaches, as manual moderation becomes impractical. ChatGPT's effectiveness in identifying inappropriate content and targeted abuse towards individuals underscores the importance of advanced linguistic analysis and intent comprehension. However, challenges persist in accurately identifying nuanced forms of harassment, leading to concerns about potential over-censorship.

Contextual Understanding and Human Oversight

While ChatGPT excels in flagging overtly offensive language, it struggles with deciphering subtle forms of abuse that rely on implicit meanings. The model's inclination towards false positives accentuates the need for careful calibration and human review to prevent unintended restrictions on legitimate expression. Balancing automated systems with human oversight is crucial to uphold fairness and protect freedom of speech.

Future of AI-Driven Content Moderation

The study underscores the potential of large language models like ChatGPT in enhancing content moderation practices. Continuous refinement of models, coupled with a focus on contextual comprehension and human intervention, is imperative to foster safer online environments and combat harmful behaviors effectively.

👉 More information

🗞 Assessing and Refining ChatGPT’s Performance in Identifying Targeting and Inappropriate Language: A Comparative Study