Run powerful AI models locally with Ollama! | by Abbas Salloum

Running Large Language Models (LLMs) locally is a growing trend in the AI community. It offers enhanced security and greater control over model outputs. In this article, we will walk you through the process of installing LLMs on your local device and running your first prompt.

Generative AI

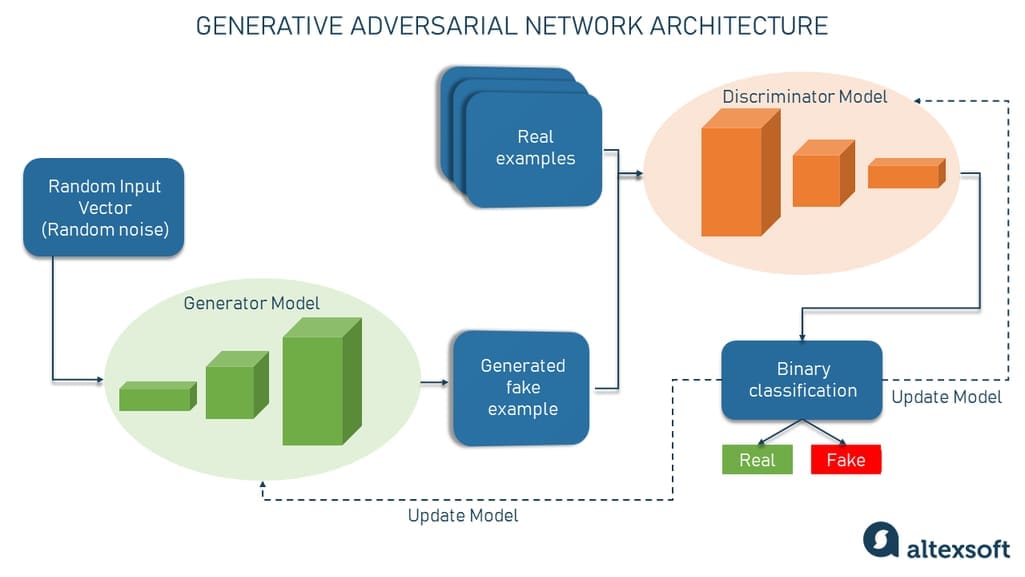

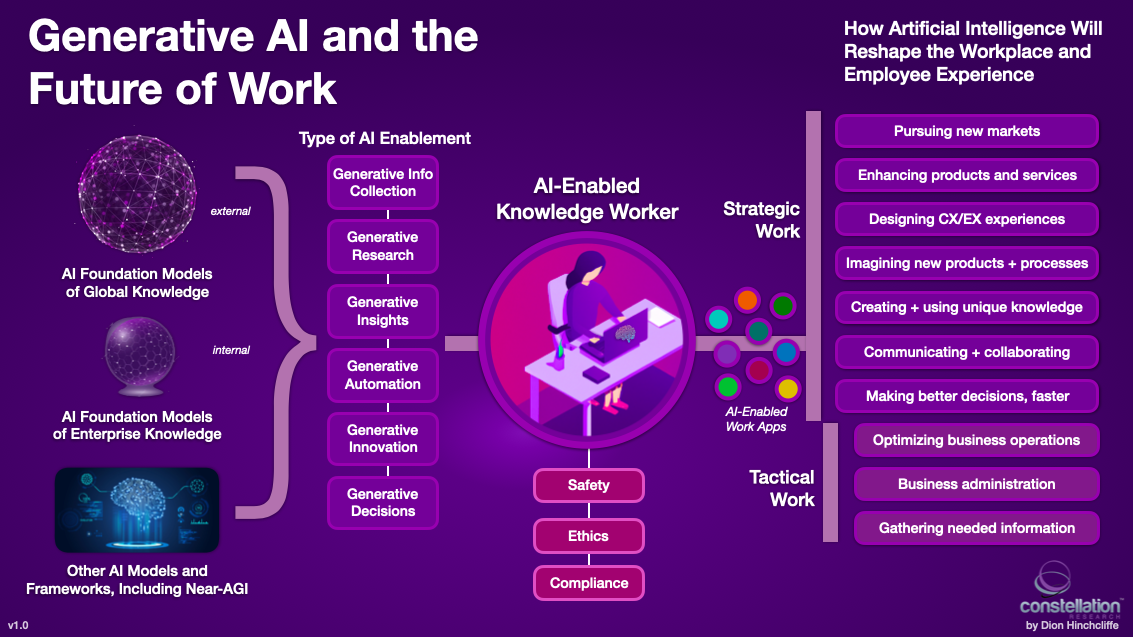

Generative AI is revolutionizing the field of artificial intelligence by enabling machines to create content autonomously. One key aspect of this advancement is the ability to run powerful AI models locally, as opposed to relying on cloud services.

By utilizing Ollama, a cutting-edge AI platform, you can harness the full potential of LLMs while keeping your data secure and under your direct supervision. This not only enhances privacy and confidentiality but also allows for customized configurations tailored to your specific needs.

Advantages of Local AI Model Execution

When you run AI models locally with Ollama, you benefit from:

- Increased Security: Your data remains on your local device, reducing the risk of unauthorized access.

- Greater Control: You have full control over the model and its outputs, enabling fine-tuning and customization.

- Enhanced Privacy: By avoiding external servers, you maintain the privacy of your data and interactions.

Getting Started with Ollama

To begin running AI models locally with Ollama, follow these steps:

- Installation: Download and install the Ollama software on your local device.

- Configuration: Customize the settings and parameters according to your requirements.

- Running LLMs: Initiate the model and start generating content based on your prompts.

By following these simple steps, you can unlock the power of AI right from your own machine, empowering your workflows and enhancing your productivity.

Join the growing community of AI enthusiasts who are embracing local AI model execution with Ollama. Experience the future of artificial intelligence today!