Updated: You Should Default To Google's Gemini Flash 1.5 Or GPT ...

Today, OpenAI Released "GPT-4o mini" which takes aim directly at Gemini Flash. Relative to Flash, GPT-4o mini is slightly slower (~12%), slightly more capable (1%), and about 40% cheaper. Both are significantly cheaper than their big brother models. With this model in the wild, you should be using one either of these as your goto Agent model.

Artificialanalysis.ai recently released a wonderful and comprehensive breakdown of quality vs speed vs price of most of the major LLM providers. In particular, Google's Gemini Flash has amazing price vs quality vs speed ratios; If you aren't at least trying it for your AI Agent and Agentic workflows, you should be.

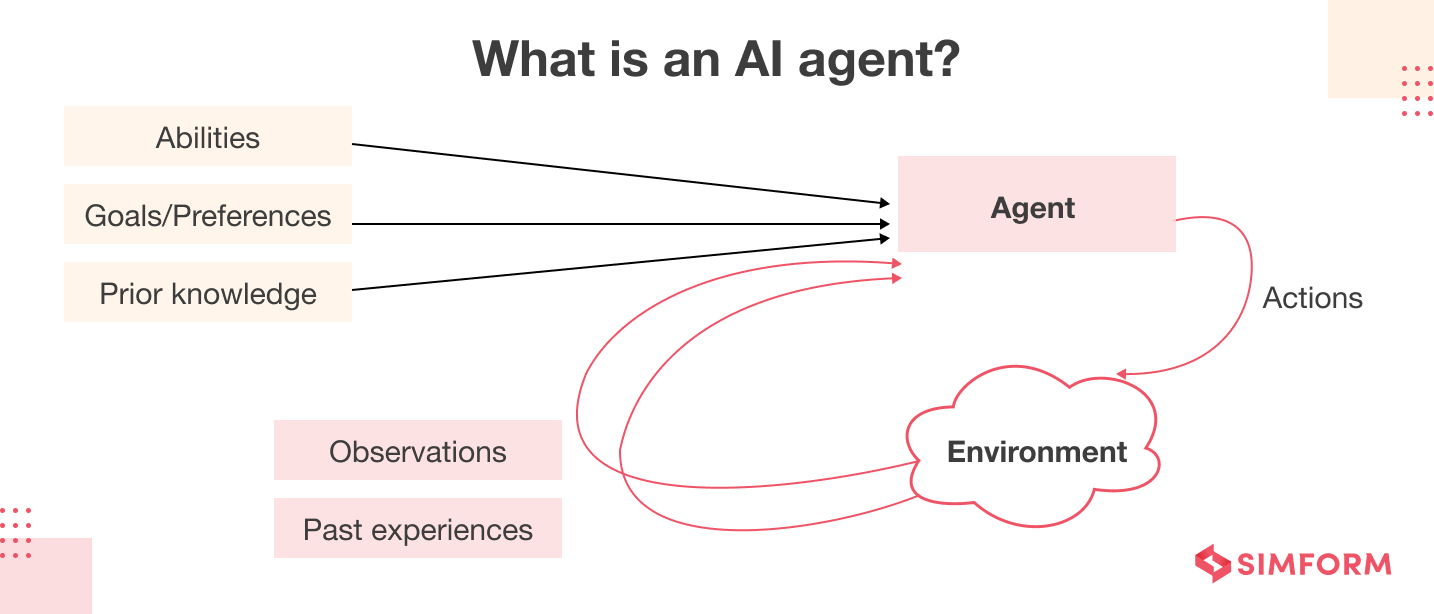

AI Agents Complexity

AI Agents are not simply LLM API calls, they are complex processes that may re-try if they don't like their own output, may connect to and process data from elsewhere via tools, and may pass their output as context to other agents. This complexity adds a 2nd level of non-determinism to the time it takes for them to complete the task at hand.

CrewAI is a great framework for making Agentic workflows. It's easy to use but still quite powerful. In this simple example, we'll define 2 agents; one to summarize the data and one to format it. For good measure, I also ran the prompt against gemini-1.5-pro and gpt-4-turbo. We can see the distinct speedup Flash enjoys.

Optimizing AI Agents with Gemini Flash 1.5

If we introduced HTTP calls for the research portion (and even the format portion), we can expect even longer durations with additional variability. Gemini 1.5 Flash is positioned high enough on reasoning and outperforms the competition on speed and price, making it a great default choice for powering your agents.

If you're using GPT-3.5 or GPT-4 Turbo, Gemini 1.5 Flash will be faster, cheaper, and better. If you're using GPT-4o (or Gemini-pro-1.5), you'd be giving up about 10% in reasoning & knowledge and 4.5% in general ability for a 2x speedup and nearly 7x price reduction. It’s worth a test if nothing else.

Llama3 on Groq stands out in the report as well. If you're focused on more open models, this route looks very promising.

Reference - Final Python Code

You can contact us at [email protected]