Gemini enabled the production of terrorist and pedocriminal deepfakes

On March 5, 2025, Google submitted a report to the Australian eSafety Commission detailing terrorist and pedocriminal deepfakes generated by its AI model, Gemini. Between April 2023 and February 2024, the tech giant received 258 and 86 complaints, respectively, for these illegal uses.

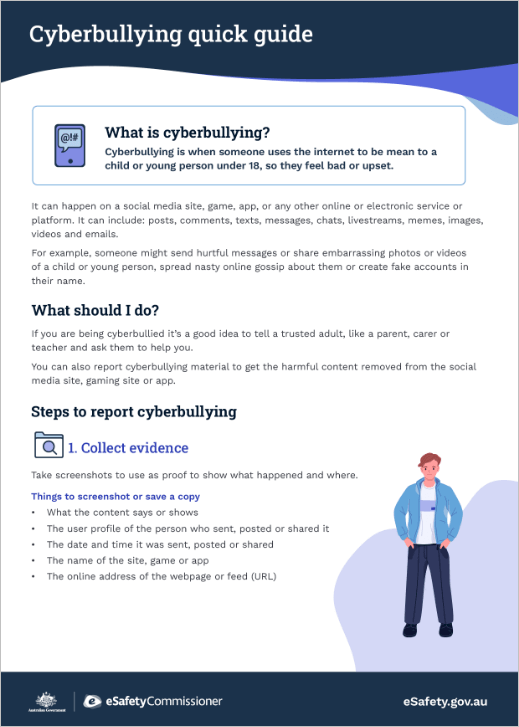

Report to the Australian eSafety Commission

According to Julie Inman Grant, eSafety Commissioner, this report, which represents a “global first,” “highlights how critical it is for companies developing AI products to integrate and test the effectiveness of safeguards aimed at preventing the creation of such content.”

Compliance with Australian Law

Australian law requires tech companies to regularly provide eSafety with information on the measures implemented to strengthen the protection of their systems. The commission has not yet reviewed the reports submitted by Google.