Meta's AI Risk Policy: A Careful Balance Between Open Access and...

Mark Zuckerberg, CEO of Meta, has voiced his dedication to ensuring that artificial general intelligence (AGI) will eventually be accessible to all. AGI pertains to a sophisticated AI system with the capability to carry out tasks at a level comparable to human intelligence. However, Meta has recently released a policy document that lays out circumstances under which the company might choose to withhold access to its most potent AI models due to potential risks.

The Frontier AI Framework

This policy, dubbed the Frontier AI Framework, introduces two distinct categories of AI systems that Meta considers too hazardous for unrestricted release: "high-risk" and "critical-risk" AI models. Meta defines high-risk AI as systems that have the potential to enable cybersecurity breaches or contribute to chemical and biological threats, albeit not in a completely reliable manner. On the other hand, critical-risk AI is described as technology that could result in catastrophic consequences without effective mitigation strategies in place.

The company provides specific examples, such as an AI capable of launching a full-scale cyberattack on a secure corporate network or one that could expedite the development of high-impact biological weapons. While these examples are not exhaustive, Meta identifies them as some of the most pressing and realistic dangers posed by advanced AI.

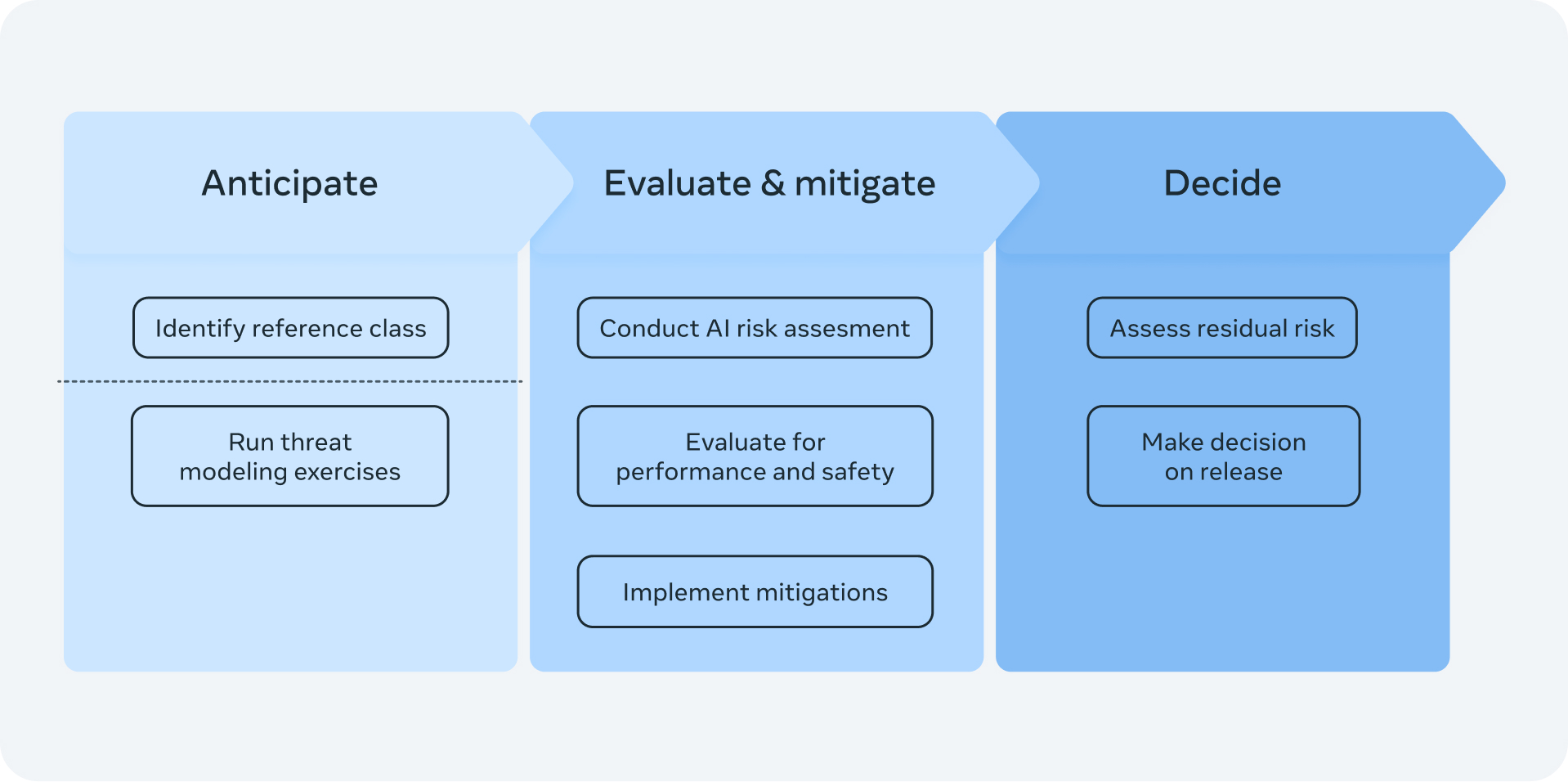

Risk Assessment Process

Meta evaluates the risk associated with AI based on expert opinions from both internal and external researchers, with final decisions resting with senior leadership. The company argues that current scientific methodologies for assessing AI safety are not yet sufficiently reliable to generate definitive, quantifiable risk evaluations.

If an AI system is deemed high-risk, Meta will limit internal access and postpone its release until proper safeguards are in place. For systems classified as critical-risk, stringent security measures will be implemented to prevent unauthorized access, and further development will be paused until risks are adequately mitigated.

Adapting to Evolving Risks

The Frontier AI Framework is structured to adapt to changing AI risks and is consistent with Meta's recent commitment to making it publicly available prior to the France AI Action Summit. This move likely reflects concerns regarding Meta's historically open approach to AI development.

Unlike competitors such as OpenAI, which restrict access to their models through APIs, Meta has positioned itself as a pioneer in open AI research, although its models do not align entirely with the traditional definition of open-source.

Impact of Meta's AI Models

Meta's Llama family of AI models has been widely adopted and utilized by millions. However, this open accessibility has its downsides, such as reports of a U.S. adversary leveraging Llama to construct a defense-related chatbot.

By clearly outlining restrictions in the Frontier AI Framework, Meta aims to differentiate itself from companies like China's DeepSeek, which provide freely available AI models with minimal safeguards. Meta underscores the importance of striking a balance between innovation and responsible risk management to ensure that AI benefits society while upholding safety standards.

Meta's Stance on AI Safety

The document emphasizes Meta's evolving approach to AI safety, one that strives to maintain openness while taking cautious measures against potentially harmful applications of its technology.

"By assessing both the advantages and possible dangers of AI deployment, our goal is to deliver cutting-edge technology in a manner that maximizes benefits while containing risks to an acceptable level," states the policy.

This policy underscores Meta's evolving stance on AI safety—one that seeks to maintain openness while taking measured precautions against potentially harmful applications of its technology.