Former OpenAI Researcher: AI Safety Experts Are Fleeing the...

OpenAI, the developer of the popular AI assistant ChatGPT, has witnessed a significant departure of its artificial general intelligence (AGI) safety researchers, as disclosed by a former employee. According to Fortune, Daniel Kokotajlo, a former OpenAI governance researcher, recently unveiled that nearly half of the company's workforce dedicated to assessing the long-term risks associated with superpowerful AI have left in the recent months.

Shift in Focus

The exits include notable researchers such as Jan Hendrik Kirchner, Collin Burns, Jeffrey Wu, Jonathan Uesato, Steven Bills, Yuri Burda, Todor Markov, and cofounder John Schulman. These departures followed the high-profile exits of chief scientist Ilya Sutskever and researcher Jan Leike in May, who jointly led the company's "superalignment" team.

Company Culture

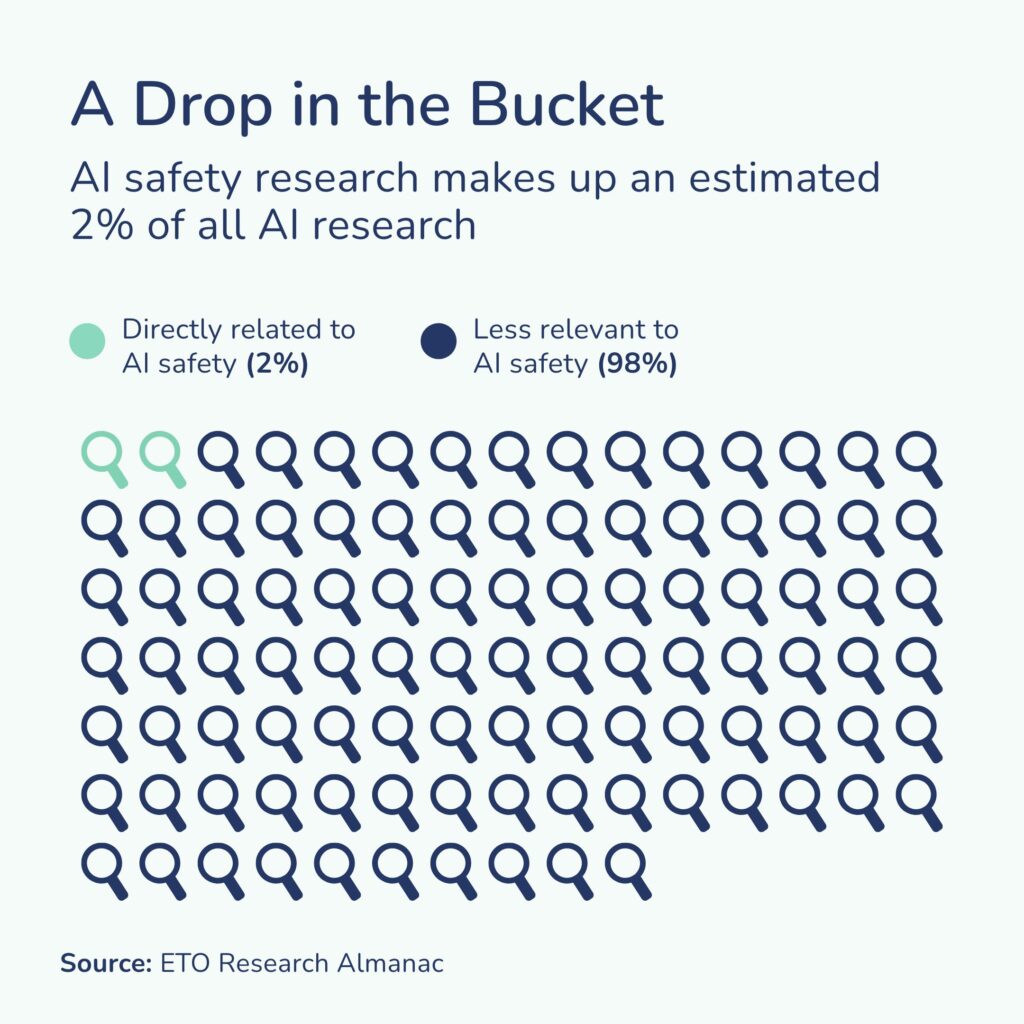

Founded with the mission to advance AGI in a manner that benefits humanity, OpenAI has long maintained a significant research team focused on "AGI safety" – methodologies aimed at ensuring that future AGI systems do not pose catastrophic or existential threats. However, Kokotajlo suggests a shift in the company's priorities towards product development and commercialization, with less emphasis on research for safe AGI development.

Employee Exodus

Having joined OpenAI in 2022 and resigned in April 2023, Kokotajlo noted a gradual exodus, with the number of AGI safety personnel reducing from about 30 to just 16. He attributed the departures to individuals losing hope as OpenAI continues to prioritize product development over safety research.

Warnings and Concerns

Kokotajlo expressed disappointment over OpenAI's stance against California's SB 1047, a bill designed to regulate the development and use of highly potent AI models. Despite the departures, some remaining employees have transitioned to other teams with similar project focuses. The company has also established a new safety and security committee and appointed Zico Kolter, a professor at Carnegie Mellon University, to its board of directors.

Competitive Landscape

As competition to develop AGI escalates among major AI companies, Kokotajlo cautioned against groupthink and the assumption that success in the AGI race inherently benefits humanity, influenced by prevailing opinions and incentives within the organization.

For more information, read the full article on Fortune.