Meta pilots first in-house AI training chip, production already underway

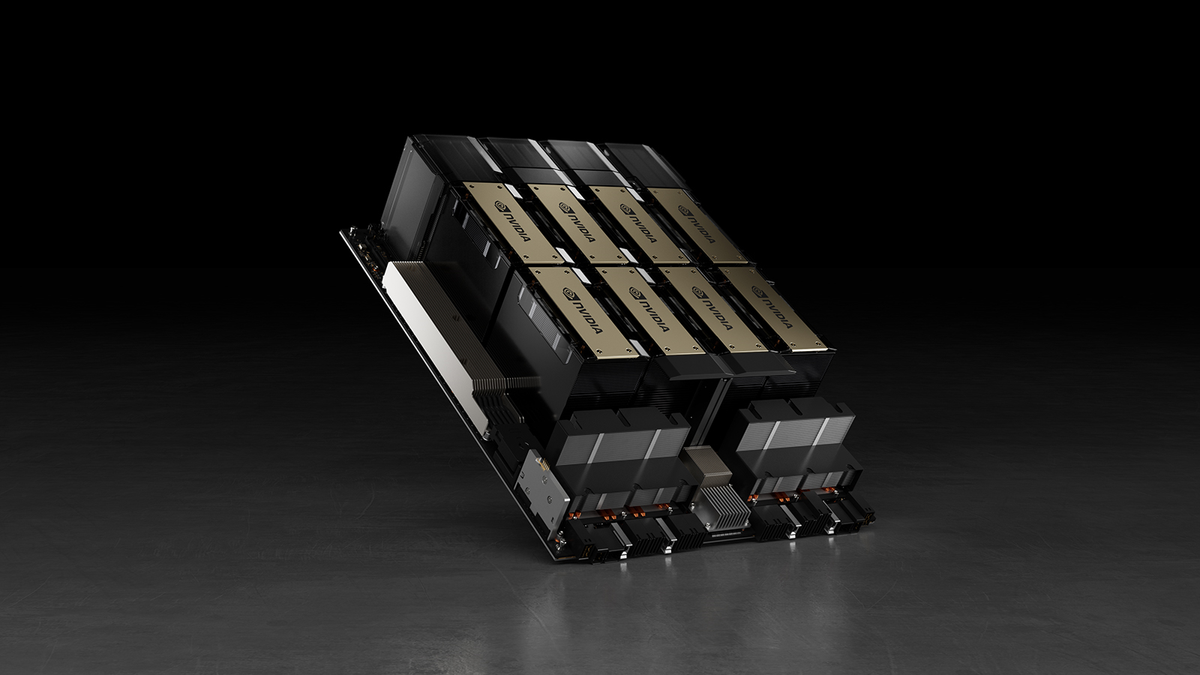

According to Reuters, Meta, the company behind large-scale language models like Llama, has taken a significant step by experimental introduction of its own AI training chips. While NVIDIA currently dominates the market for AI training chips, Meta's decision to develop its own chips marks a strategic move towards reducing dependence on NVIDIA.

Exclusive Test of Meta's First In-House AI Training Chip

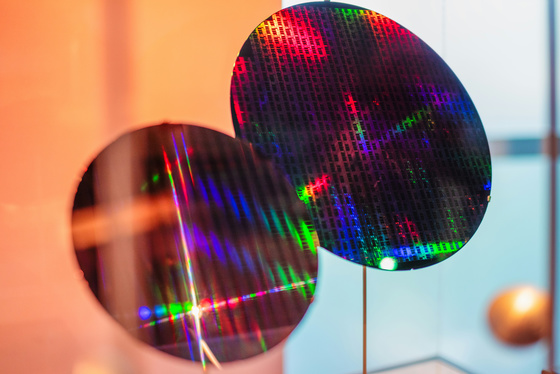

Meta is reportedly testing its first AI chip based on RISC-V architecture for AI training, as per Tom's Hardware. The company has been actively working on its own AI chip designs, including the MTIA, with plans to integrate custom chips into its data centers starting from the second half of 2024.

As reported by GIGAZINE, Meta is set to deploy its proprietary AI processor 'Artemis' in its data centers by the second half of 2024, further showcasing the company's commitment to developing its own hardware solutions.

Accelerating AI Tasks with In-House Chips

According to inside sources speaking to Reuters, Meta's new AI-specific accelerator, developed in-house, is tailored for optimal performance in AI tasks, potentially offering higher power efficiency compared to traditional GPUs. Meta's current trials with these chips are aimed at assessing their performance for potential large-scale production, with plans to expand usage if successful.

The Future of Meta's AI Accelerators

Experts at Tom's Hardware note that Meta's collaboration with Broadcom and TSMC for developing AI accelerators based on the RISC-V architecture could mark a significant milestone in the industry. By creating efficient accelerators that rival NVIDIA's offerings like H200 and B300, Meta aims to lessen its reliance on external chip providers like NVIDIA.

Despite reaching out for comments, both Meta and TSMC have chosen not to provide any statements to Reuters at this time.