Meta's LLAMA 3.1 Announcement

Meta has recently unveiled the LLAMA 3.1 model, featuring an impressive 3.145 billion parameters aimed at delivering enhanced performance in various applications. This significant update introduces new benchmark figures, catering to a broad spectrum of users, from casual enthusiasts to large enterprises. The LLAMA 3.1 model showcases improved reasoning abilities and supports zero-shot tool utilization, marking a noteworthy advancement in the AI landscape.

Collection of Pre-trained Models

As part of its commitment to open-source initiatives, Meta has expanded its array of pre-trained models, including the 8B and 70B variants. These models are designed to address diverse use cases, offering heightened performance, enhanced reasoning capabilities, and seamless integration with zero-shot tools. By making these models available to developers, Meta empowers the AI community to leverage these outputs for enhancing other AI models effectively.

License and Open Source Commitment

Meta underlines its dedication to open-source principles by releasing the new models under an updated license structure. This revised licensing framework grants developers the freedom to utilize the outputs generated by models like the 405B for refining and optimizing other AI systems. The introduction of LLAMA 3.1 and its counterparts represents Meta's strategic push towards facilitating synthetic data generation and distillation processes to propel advancements in AI research.

Rollout and Broad Usage

Meta has initiated the deployment of LLAMA 3.1 across its AI ecosystem, extending the model's capabilities to popular platforms such as Facebook Messenger, WhatsApp, and Instagram. By broadening the accessibility of open-source AI technologies, Meta seeks to expedite the adoption of AI solutions and address critical global challenges effectively.

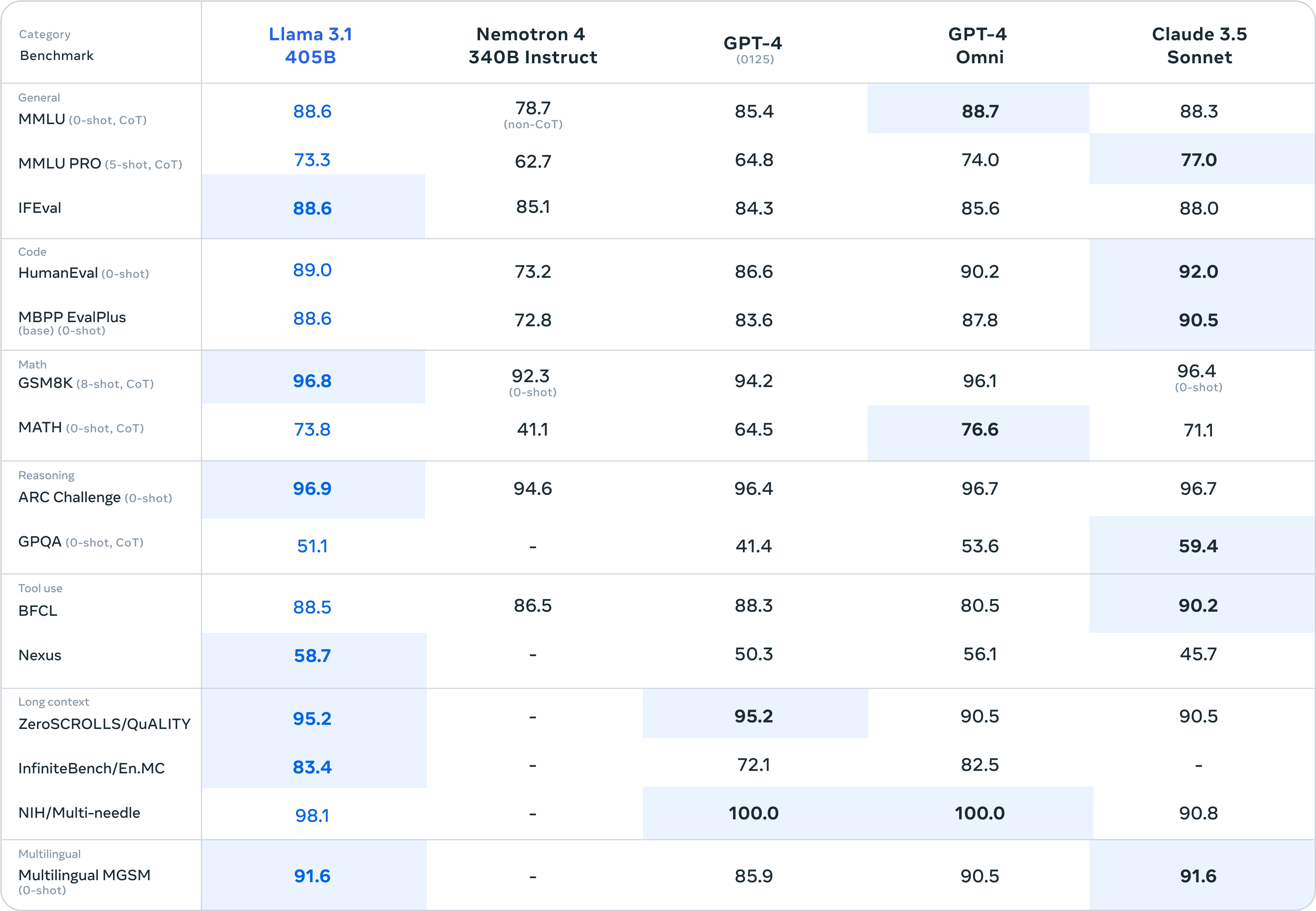

Benchmarks and Comparison

An in-depth analysis of LLAMA 3.1 benchmarks underscores the model's competitive performance when pitted against existing state-of-the-art counterparts. The model's efficiency is particularly noteworthy, as it achieves a substantial reduction in size compared to behemoths like GPT 4, while still delivering superior reasoning capabilities across various domains.

Multimodal Model Developments

Meta's research efforts have culminated in the integration of image, video, and speech functionalities into the LLAMA 3 series. This integration has yielded compelling results in multimodal tasks, with the models showcasing remarkable performance in vision-related assignments, surpassing previous benchmarks in select categories.

Tool Use and Implementation

LLAMA 3 demonstrates remarkable efficacy in tool utilization, exemplified by its adeptness in tasks such as content identification in CSV files and proficient graph plotting. The model's extended token context length further enhances its capabilities in managing complex operations and executing a diverse range of functions efficiently.

Future Improvements and Accessibility

Meta hints at significant advancements on the horizon in AI model development, positioning LLAMA 3 as a precursor to forthcoming innovations. The accompanying video emphasizes the ease of access and utilization of LLAMA 3.1, highlighting its availability across different regions and platforms, including Gro for users in the UK.

FAQ

Some frequently asked questions regarding Meta's LLAMA 3.1 model:

- Q: What is the name of the newest model released by Meta?

- A: The newest model released by Meta is LLAMA 3.1.

- Q: How many parameters does the LLAMA 3.1 model have?

- A: The LLAMA 3.1 model boasts 3.145 billion parameters.

- Q: What are some of the improvements and capabilities of the LLAMA 3.1 model?

- A: The LLAMA 3.1 model offers enhanced performance, improved reasoning, and supports zero-shot tool usage.

- Q: How does Meta show its commitment to open source in relation to the new models?

- A: Meta showcases its commitment to open source by providing the new models under a revised license that enables developers to leverage model outputs for enhancing other AI systems.

- Q: What does the LLAMA 3.1 model aim to drive in terms of AI research?

- A: The LLAMA 3.1 model aims to drive synthetic data generation and distillation to advance AI research.

- Q: What capabilities does LLAMA 3 demonstrate in handling tasks like identifying content in CSV files?

- A: LLAMA 3 showcases impressive tool use capabilities, such as content identification in CSV files and efficient graph plotting.