It was found that the hallucinations of the recently released inference AI models 'o3' and 'o4 mini' by ChatGPT developer OpenAI were more severe than the previous version.

It has been discovered that the latest inference AI models 'o3' and 'o4 mini' released by OpenAI's ChatGPT developer are experiencing more severe hallucinations compared to their predecessors. Hallucination in this context refers to instances where a generative AI service provides responses that are not factual or contextually accurate. According to a report by TechCrunch on the 19th, OpenAI noted that the 'o3' model hallucinates answers to 33% of questions in their Person QA benchmark, a significant increase from the previous models.

Increased Hallucination Rates

When compared to OpenAI's previous models, the 'o3' and 'o4 mini' exhibited higher rates of hallucinations. The o3 model registered a 33% hallucination rate, surpassing the 16% and 14.8% recorded by its predecessors o1 and o3 minis. The o4 Mini showed the poorest performance with a staggering 48% hallucination rate, even exceeding that of the GPT-4o, a conventional OpenAI model with no exploitation capabilities.

Enhanced Features and Capabilities

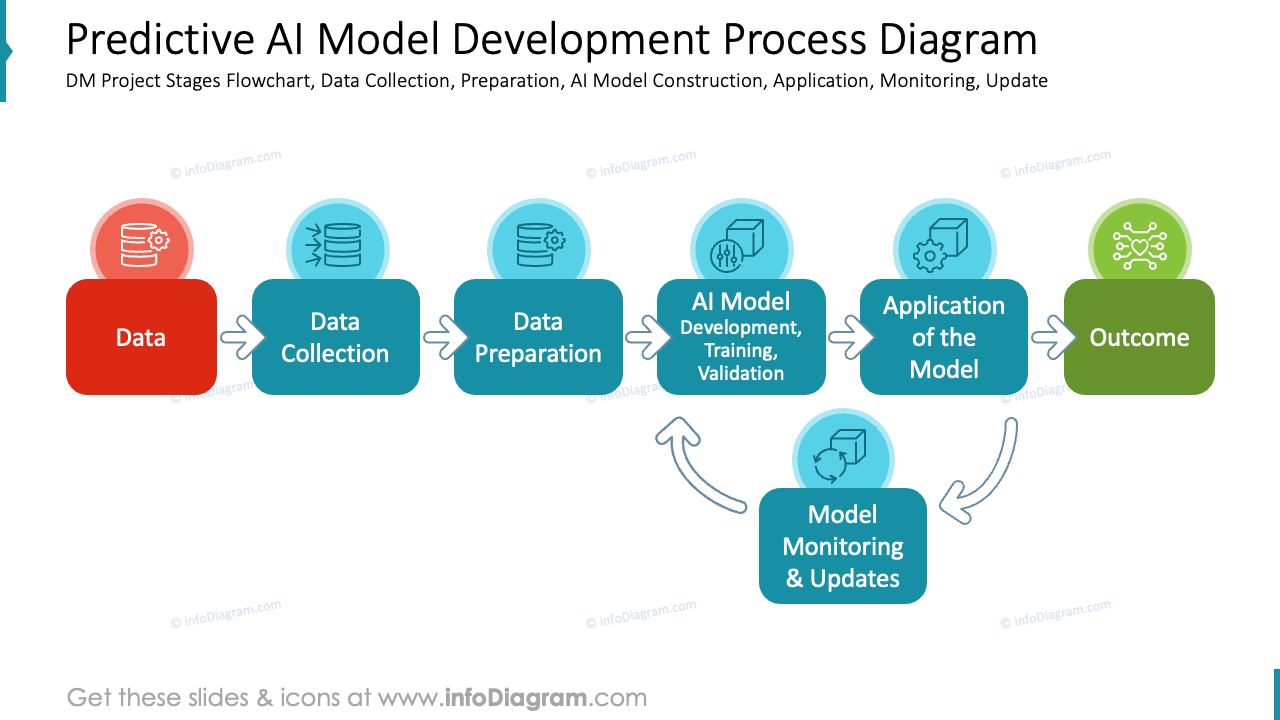

The o3 and o4 mini models, launched on the 16th, were promoted as the first models capable of processing images. They were designed to not only interpret images but also incorporate visual information directly into the reasoning process. These models can analyze and respond to user-uploaded images, diagrams, or whiteboard drawings, even able to handle low-quality or blurred visuals effectively.

Technical Advancements and Challenges

Despite the considerable coding capabilities of the o3 and o4 mini models, they showcased significant improvements in the SWE benchmark verification, scoring 69.1% and 68.1% respectively. However, the emergence of increased hallucination rates in these models has raised concerns within the industry. OpenAI has acknowledged the issue but has yet to pinpoint the exact cause behind the deteriorating phenomenon, emphasizing the need for further research.

Future Implications and Industry Concerns

Industry experts worry that persistent hallucination issues could undermine the credibility of inference models, particularly in sectors where accuracy is paramount, such as tax, accounting, and legal domains. As major AI companies shift their focus towards developing inference models, concerns regarding reliability and accuracy persist. OpenAI reassures continual efforts to address hallucination problems and improve overall performance and dependability.