Building with Gemini — Part 3 — Routing | Google Cloud - Community

In part 1 of this series, we built a simple product recommendation bot using Gemini. In part 2, we talked about voice input and output. Now, in part 3, we will delve deeper into advanced concepts to enhance our bot's capabilities.

Enhancing the Bot

After the initial setup in part 1, our system was centered around an LLM-based agent handling user questions. However, what if we are not satisfied with the agent's responses? In such cases, we have options to tweak the system:

- Prompt/System Instruction: Modifying system instructions and prompts to guide the agent.

- Numeric Parameters: Adjusting parameters like max output tokens, temperature, top-K, top-P, and seed for tailored responses.

These parameters allow fine-tuning of the bot's behavior, offering control over response generation and specificity.

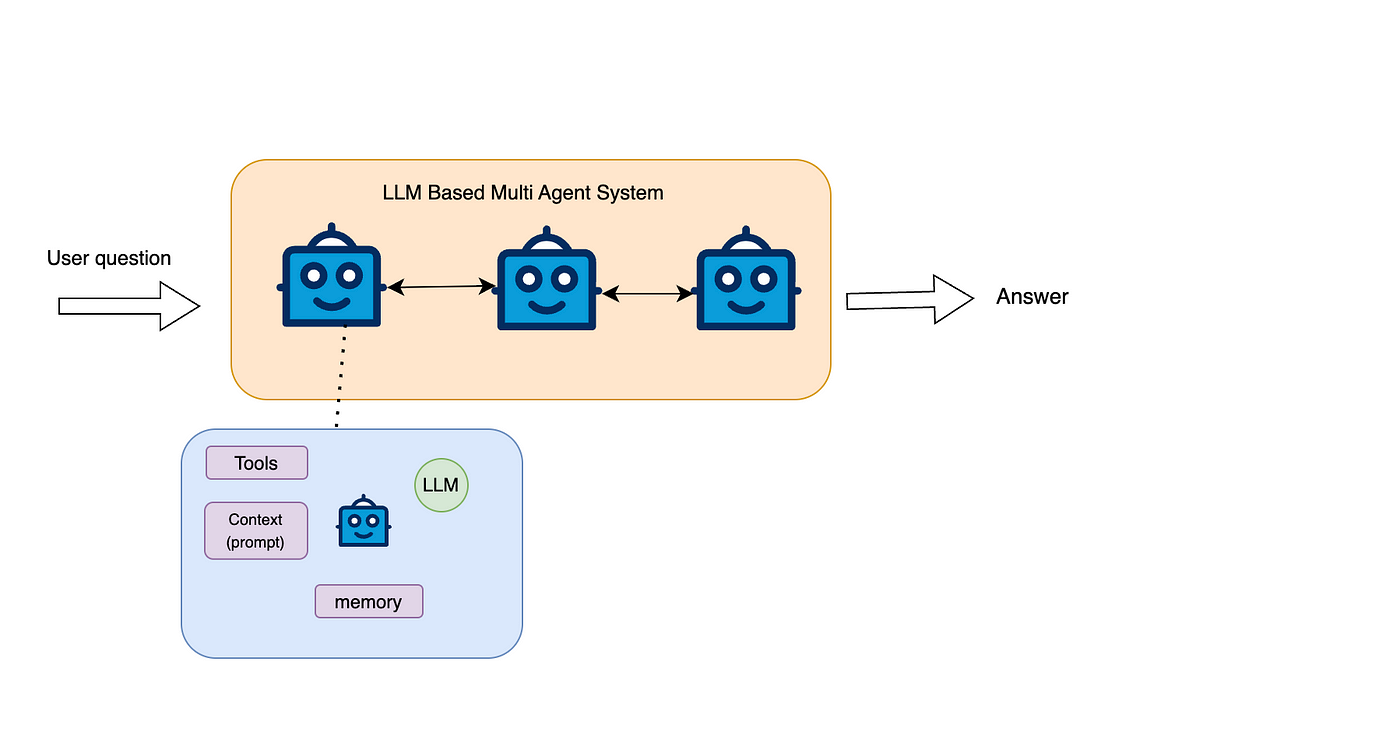

Implementing Multi-Agent Systems

For complex tasks like our wine recommendation bot, where different requirements exist for various functions, employing multi-agent systems proves beneficial. By having multiple LLM-based agents, each specialized in a specific task, we can achieve greater efficiency.

A key strategy to manage these agents is through a routing pattern. A router component analyzes user messages and chat history to direct queries to the appropriate agent.

Building the Router

Traditionally, routing conversations involved models like Naive Bayes and SVM. While these methods are effective, leveraging LLMs for routing tasks can enhance accuracy and flexibility.

Integrating LLMs into the routing process allows for a more dynamic and context-aware handling of user queries. By presenting potential routes to the LLM and letting it choose the correct path based on dialogue history, we streamline the decision-making process.

Building the Router

For our wine recommendation bot, we can define routes such as catalog recommendations, small talk, general wine inquiries, and expert wine advice. Each route corresponds to a specific LLM agent, ensuring optimized responses for varied user interactions.

By incorporating LLMs into the routing mechanism, we can efficiently manage diverse conversational scenarios and deliver tailored recommendations seamlessly.