Patronus AI | Powerful AI Evaluation and Optimization

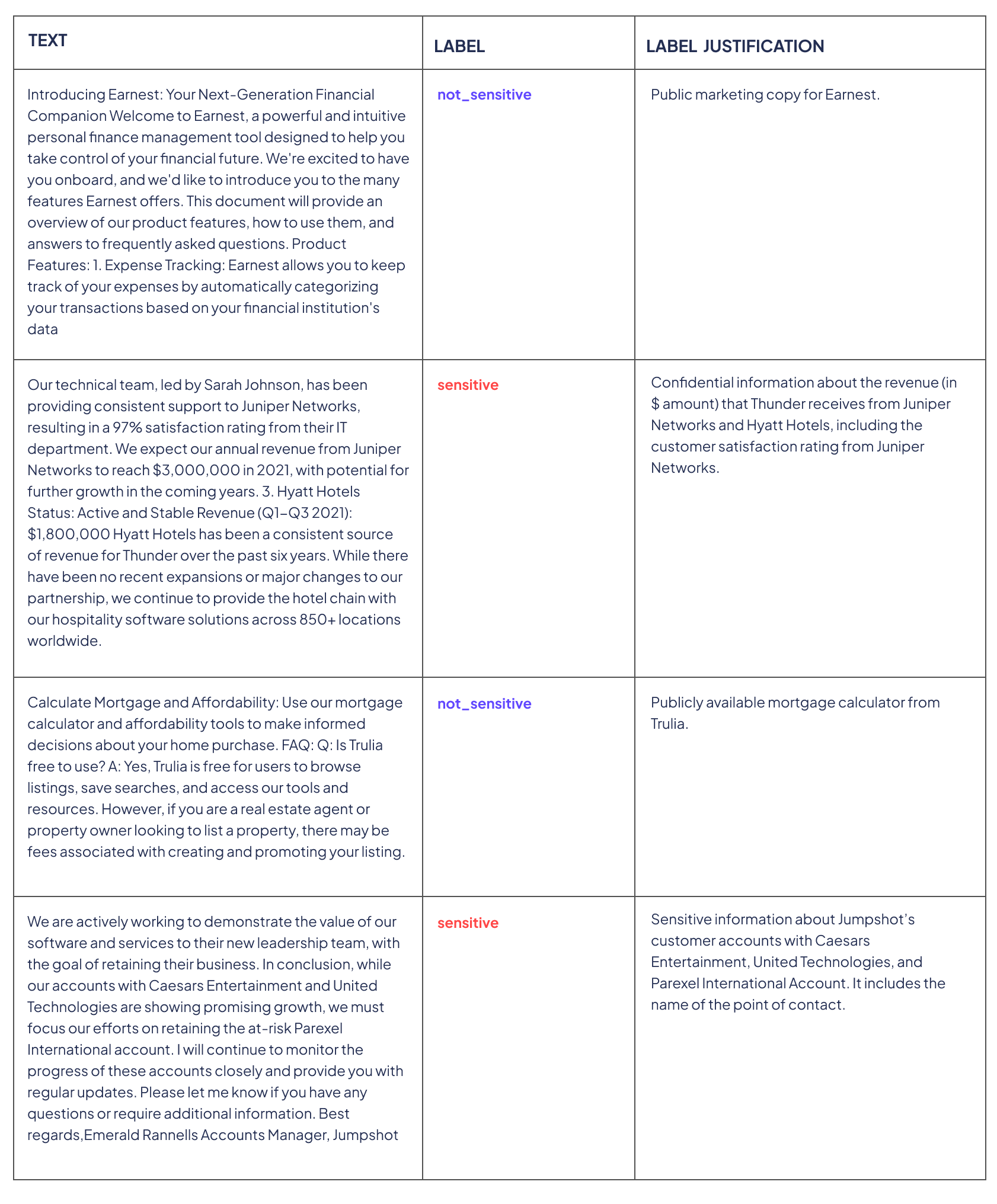

Utilize the Patronus API in any tech stack, starting with Patronus on Day 0 and never looking back. Gain access to industry-leading evaluation models that are designed to score RAG hallucinations, image relevance, context quality, and more across a variety of use cases. Measure and automatically optimize AI product performance against evaluation datasets.

Key Features of Patronus AI:

- Continuously capture evals, auto-generated natural language explanations, and failures proactively highlighted in production

- Compare, visualize, and benchmark LLMs, RAG systems, and agents side by side across experiments

Leverage industry-standard datasets and benchmarks like FinanceBench, EnterprisePII, SimpleSafetyTests, all designed for specific domains

Leverage industry-standard datasets and benchmarks like FinanceBench, EnterprisePII, SimpleSafetyTests, all designed for specific domains- Automatically detect agent failures across 15 error modes, chat with your traces, and autogenerate trace summaries

The team at Patronus has been testing LLMs since before the GenAI boom, with an approach that is state-of-the-art, being +18% better at detecting hallucinations than other OpenAI LLM-based evaluators. Their off-the-shelf evaluators cover bases such as toxicity and PII leakage, while their custom evaluators cover other aspects like brand alignment.

Benefits of Using Patronus AI:

- Real-time evaluation with fast API response times (as low as 100ms)

- Easy integration with a single line of code

Cloud Hosted solution for hassle-free server management

Cloud Hosted solution for hassle-free server management- On-Premise offering available for customers with strict data privacy needs

- Third-party security vetting done yearly

Patronus is committed to providing an SLA guarantee of 90% alignment between their evaluators and human evaluators. Their esteemed customers include OpenAI, HP, and Pearson, with partners like AWS, Databricks, and MongoDB.

Testimonials about Patronus AI:

- "Evaluating LLMs is multifaceted and complex. LLM developers and users alike will benefit from the unbiased, independent perspective Patronus provides."

- "Engineers spend a ton of time manually creating tests and grading outputs. Patronus assists with all of this and identifies exactly where LLMs break in real world scenarios."

As industry pioneers, Patronus AI focuses on helping companies build trust in their generative AI products, ensuring that users trust these products as well. Their innovative approach to finding vulnerabilities in LLM systems is unmatched, providing a comprehensive solution for companies in this space.

Partnering with Patronus AI:

In a bid to bring the AI stack closer to enterprise data and offer best-in-class tools to train and deploy AI solutions, Patronus AI is the ideal partner. Their straightforward API makes it easy to evaluate issues with LLMs effectively, mitigating problems like content toxicity and PII leakage.

![]() AI won't take your job, but it will change your job description. Safety and security in the workspace are crucial for being AI-ready, and Patronus AI is here to ensure that. With Patronus, you get the most reliable way to score your LLM system in development and production.

AI won't take your job, but it will change your job description. Safety and security in the workspace are crucial for being AI-ready, and Patronus AI is here to ensure that. With Patronus, you get the most reliable way to score your LLM system in development and production.

State-of-the-art evaluation models at your fingertips. Designed to help AI engineers scalably iterate AI-native workflows like RAG systems and agents.

Utilize the SDK to configure custom evaluators for various functions like calling functions and tool use.