How Claude's 3.7's new 'extended' thinking compares to ChatGPT ...

Anthropic recently introduced a new model named Claude 3.7 Sonnet, and among its features, the "extended" mode caught my attention. This mode seemed reminiscent of OpenAI's initial introduction of the o1 model for ChatGPT, enabling users to access o1 seamlessly within ChatGPT 4o by typing "/reason" for the AI chatbot to leverage o1. Despite it being somewhat redundant now, it still functions within the app. The enhanced, structured reasoning promised by both models intrigued me, leading to a desire to compare their capabilities.

Extended Mode in Claude 3.7

Claude 3.7's Extended mode is designed as a hybrid reasoning tool, offering users the flexibility to switch between quick, conversational responses and detailed, step-by-step problem-solving. It meticulously examines prompts before delivering answers, making it particularly suitable for tasks involving mathematics, coding, and logic. Users can fine-tune the balance between speed and depth by setting time limits for the AI to contemplate its response. Anthropic positions this feature as a means to enhance the practicality of AI for real-world applications that necessitate layered, methodical problem-solving beyond superficial responses.

Exploring the Monty Hall Problem

To explore the Extended thinking mode of Claude 3.7, I engaged it in analyzing and elucidating the well-known Monty Hall Problem - a challenging probability puzzle. The scenario involves selecting one door out of three, one hiding a car and the others concealing goats (or in this case, crabs). After making a choice, the host, aware of the contents behind each door, opens one of the remaining doors to reveal a goat (or crab). The pivotal decision then arises: stick with the initial choice or switch to the unopened door. Despite the common misconception, switching actually provides a 2/3 chance of winning, whereas sticking yields only a 1/3 probability.

With Extended Thinking enabled, Claude 3.7 meticulously explained the problem in an academic manner, breaking down the underlying logic into multiple steps and emphasizing the rationale behind the shifting probabilities post-revelation by the host. The AI didn't just present the correct answer but articulated it through hypothetical scenarios, simplifying the comprehension of why switching is the optimal strategy. The response felt akin to being guided by a patient professor, ensuring a thorough understanding of why the conventional intuition is flawed.

Comparison with ChatGPT o1

ChatGPT o1 also provided a detailed breakdown and explanation of the Monty Hall Problem, delving into various aspects such as basic probability, game theory, narrative perspectives, psychological insights, and economic analysis. However, the abundance of information presented by ChatGPT could be overwhelming for some users.

Interactive Features

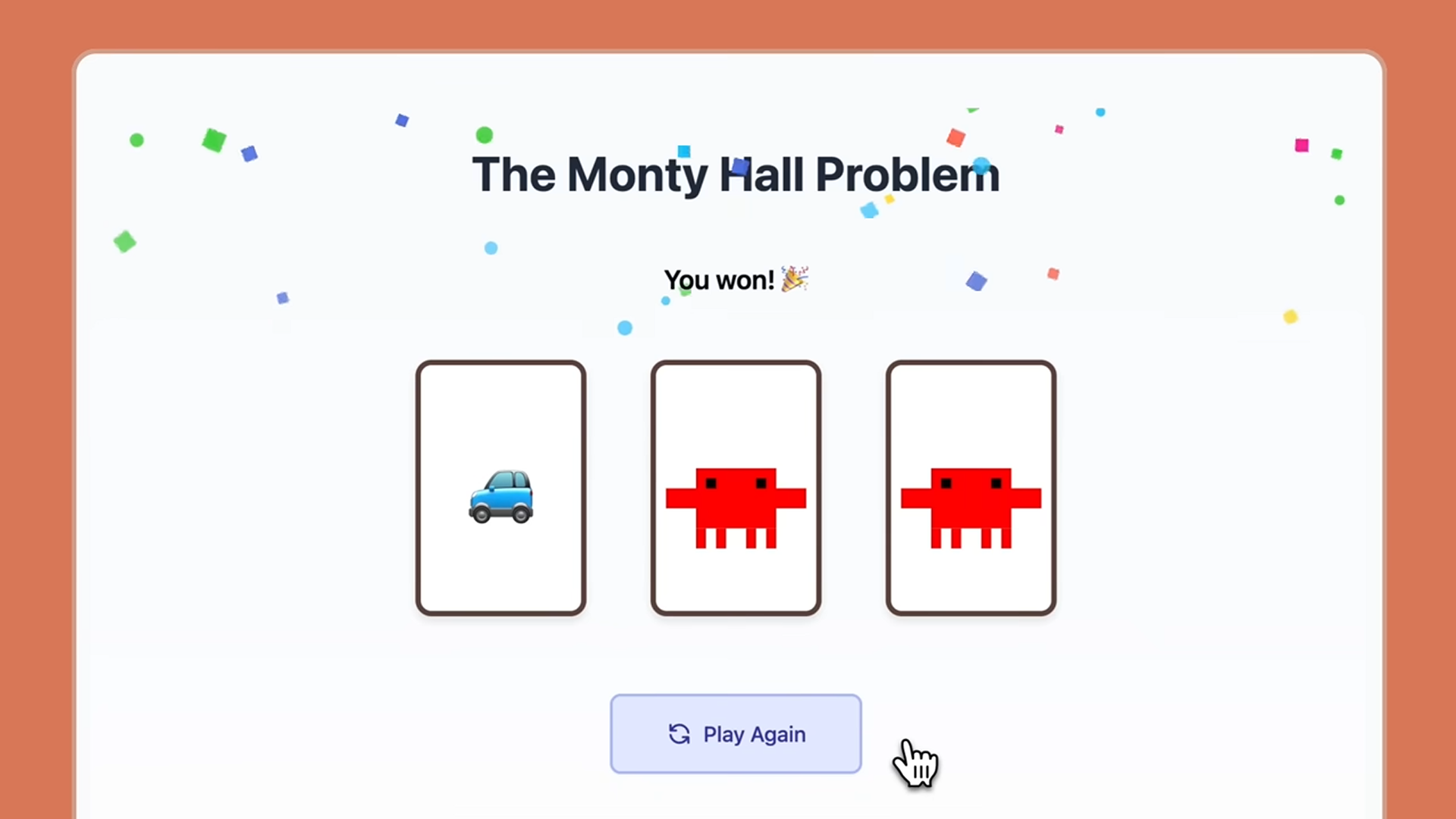

Claude's Extended thinking showcased its versatility by transforming the Monty Hall Problem into an interactive game within the chat, offering a hands-on experience for users. In contrast, ChatGPT o1 generated an HTML script for simulating the problem, which required additional steps to access.

Final Thoughts

While there may be minor differences in performance depending on the specific tasks, both Claude's Extended thinking and ChatGPT's o1 model excel in providing analytical approaches to logical problems. The ability to adjust the depth and duration of reasoning in Claude's AI presents a distinct advantage. Whether seeking rapid responses or in-depth analyses, both models offer valuable insights and solutions to users.