How ChatGPT Improves Learning Outcomes in Schools: Wang & Fan Study

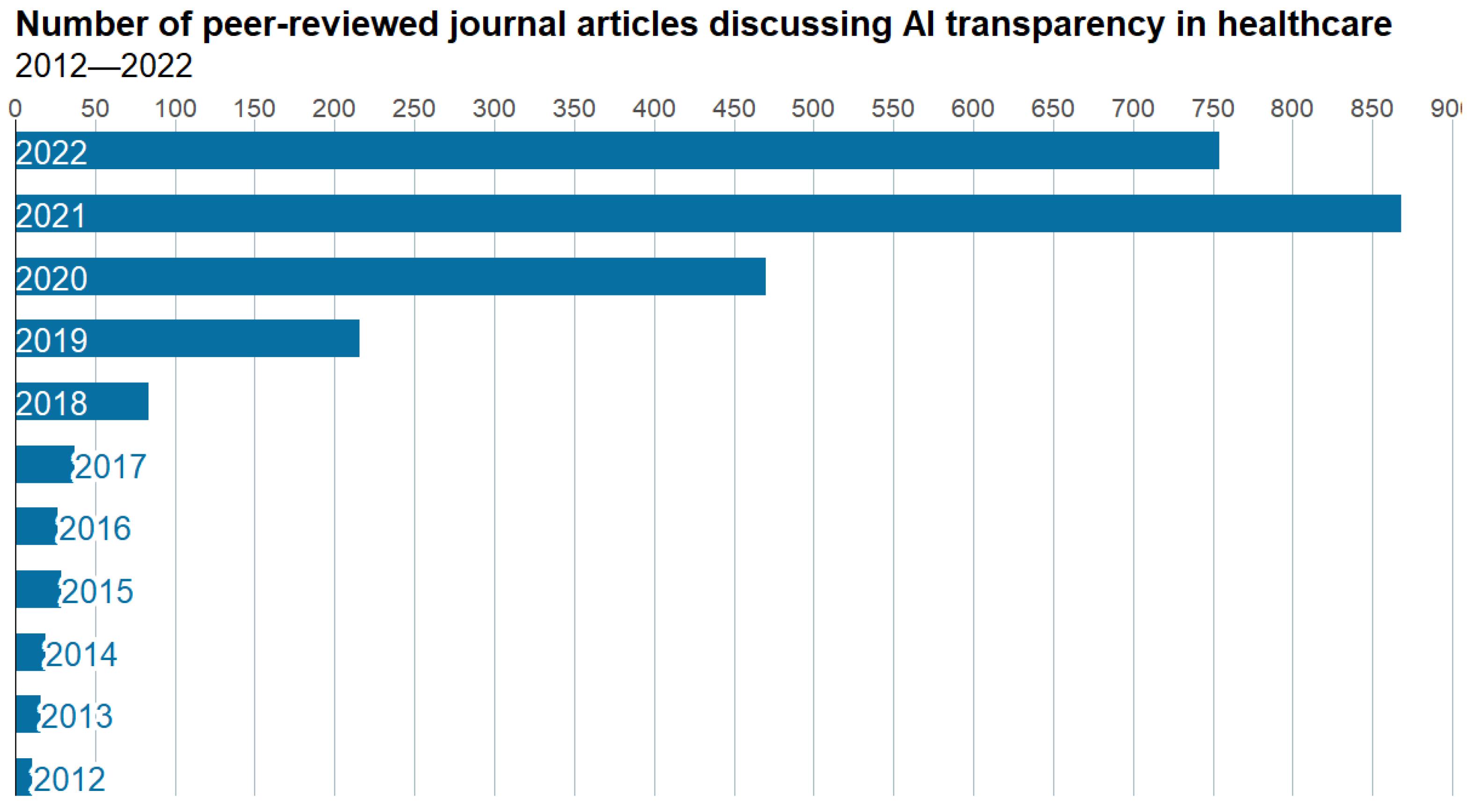

Remember the drama around the Microsoft and Carnegie Mellon Study which made headlines saying AI was making us dumb? A new study by researchers Jin Wang and Wenxiang Fan – who analyzed 51 studies on ChatGPT in education – seems to contradict the fearmonger headlines. Their meta-analysis – published in May 2025 – should mark a major shift in how schools should approach AI. Rather than asking if AI should be used in classrooms, the question now is how to use it effectively.

Addressing the Concerns

The study by Wang & Fan, published in Nature’s Humanities and Social Sciences Communications section, directly addresses the rising interest in AI tools. It also tackles concerns about screen time, equity, and learning quality.

However, not everybody agrees with what is published in this study. Ilkka Tuomi, Chief Scientist at Meaning Processing Ltd., for instance has serious doubts.

Critical Voices

According to Tuomi, the study’s review process reduces thousands of articles to just 51, using keyword filters like ‘ChatGPT’ and ‘educ*’ – without clear controls on study quality or population consistency.

His critique isn’t just about one study – it’s about how we evaluate evidence in an era of fast science and faster headlines.

The Statistical Metrics

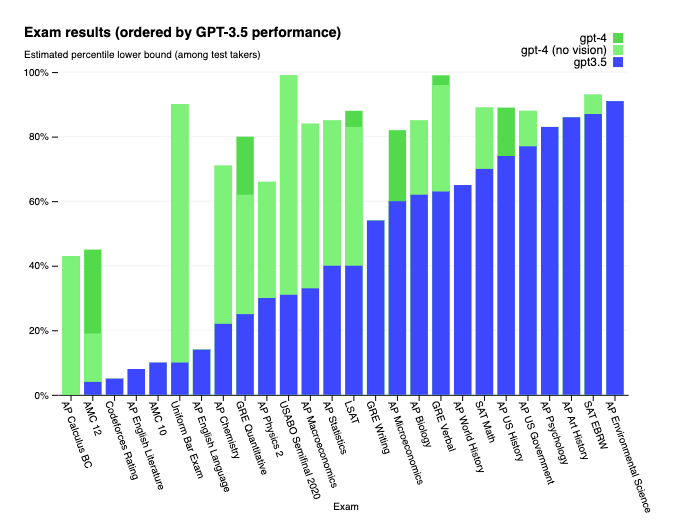

Wang & Fan (2025) use Hedges’ g – a common statistical metric in education research – to calculate effect sizes across 51 studies evaluating ChatGPT’s impact on student outcomes.

In theory, this metric allows researchers to:

- Assess the effectiveness of interventions

- Compare outcomes across different studies

- Standardize results for better understanding

Challenges and Limitations

It's important to note that an impressive effect size is meaningless if based on flawed or incomparable inputs. The lack of consistency across studies may reflect cherry-picked effects or publication bias rather than genuine insight.

Educators, policymakers, and researchers should not take the reported results at face value. Instead, they must demand higher standards in AI-in-education research – where transparency, theory, and replicability matter as much as effect size.

Interested in having your news featured on WINSS? Send it to our editors at [email protected].