Using OpenAI's o3-mini Reasoning Model in Semantic Kernel

OpenAI’s o3-mini is a newly released small reasoning model (launched January 2025) that delivers advanced problem-solving capabilities at a fraction of the cost of previous models. It excels in STEM domains (science, math, coding) while maintaining low latency and cost similar to the earlier o1-mini model.

Features of OpenAI o3-mini:

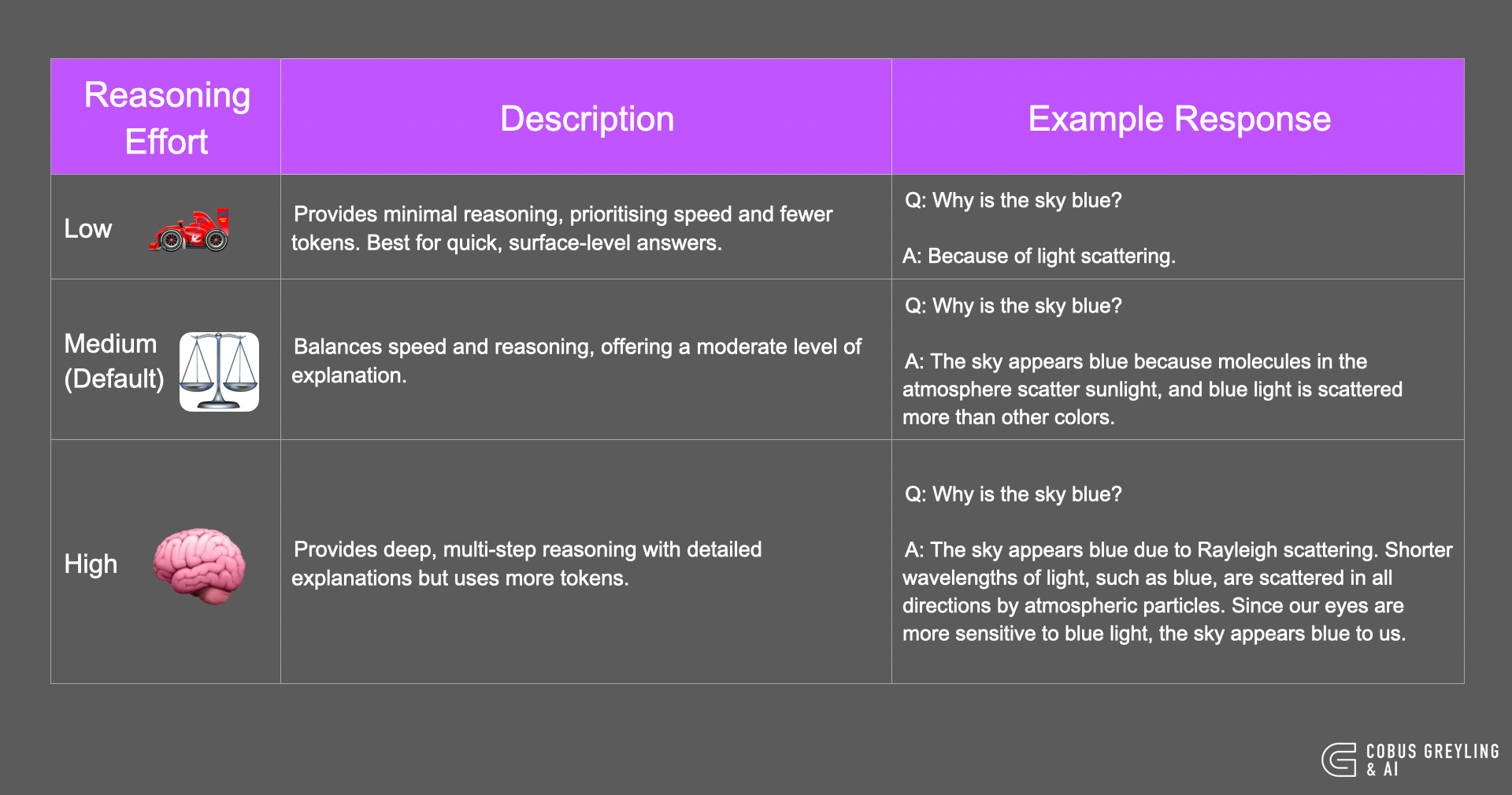

Reasoning Effort Control: Adjust the model’s “thinking” level (low, medium, high) to balance response speed vs depth. This parameter lets the model spend more time on complex queries when set to high, using additional hidden reasoning tokens for a more thorough answer.

Structured Outputs: Supports JSON Schema-based output constraints, enabling the model to produce well-defined JSON or other structured formats for downstream automation.

Function and Tool Integration: Natively calls functions and external tools (similar to previous OpenAI models), making it easier to build AI agents that perform actions or calculations as part of their responses.

Developer Messages: Introduces a new "developer" role (replacing the old system role) for instructions, allowing more flexible and explicit system prompts.

Enhanced STEM Performance:

Improved abilities in coding, mathematics, and scientific reasoning, outperforming earlier models on many technical benchmarks.

Performance & Efficiency: Early evaluations show that o3-mini provides more accurate reasoning and faster responses than its predecessors. OpenAI’s internal testing reported 39% fewer major errors on challenging questions compared to the older o1-mini, while also delivering answers about 24% faster. In fact, with medium effort, o3-mini matches the larger o1 model’s performance on tough math and science problems, and at high effort it can even outperform the full o1 model on certain tasks (OpenAI o3-mini | OpenAI). These gains come with substantial cost savings: o3-mini is roughly 63% cheaper to use than o1-mini, thanks to optimizations that dramatically reduce the per-token pricing.

Pricing: One of o3-mini’s biggest appeals is its cost-effectiveness. According to OpenAI’s pricing, o3-mini usage is billed at about $1.10 per million input tokens and $4.40 per million output tokens. You can get also a 50% discount for cached or batched tokens, further lowering effective costs in certain scenarios.

Now, let’s see how to use o3-mini in Semantic Kernel. Because o3-mini follows the same OpenAI Chat Completion API format, we can plug it into SK using the existing OpenAI connector.