OpenAI Disrupts Iranian Misinformation Campaign Targeting U.S. Elections

OpenAI, the innovative company known for AI tools like ChatGPT, has recently taken a firm stand against the misuse of its technology by foreign entities. In a notable move, the company actively countered Iranian influence operations designed to manipulate public opinion regarding the upcoming U.S. presidential election.

Actions Against Misuse of AI Technology

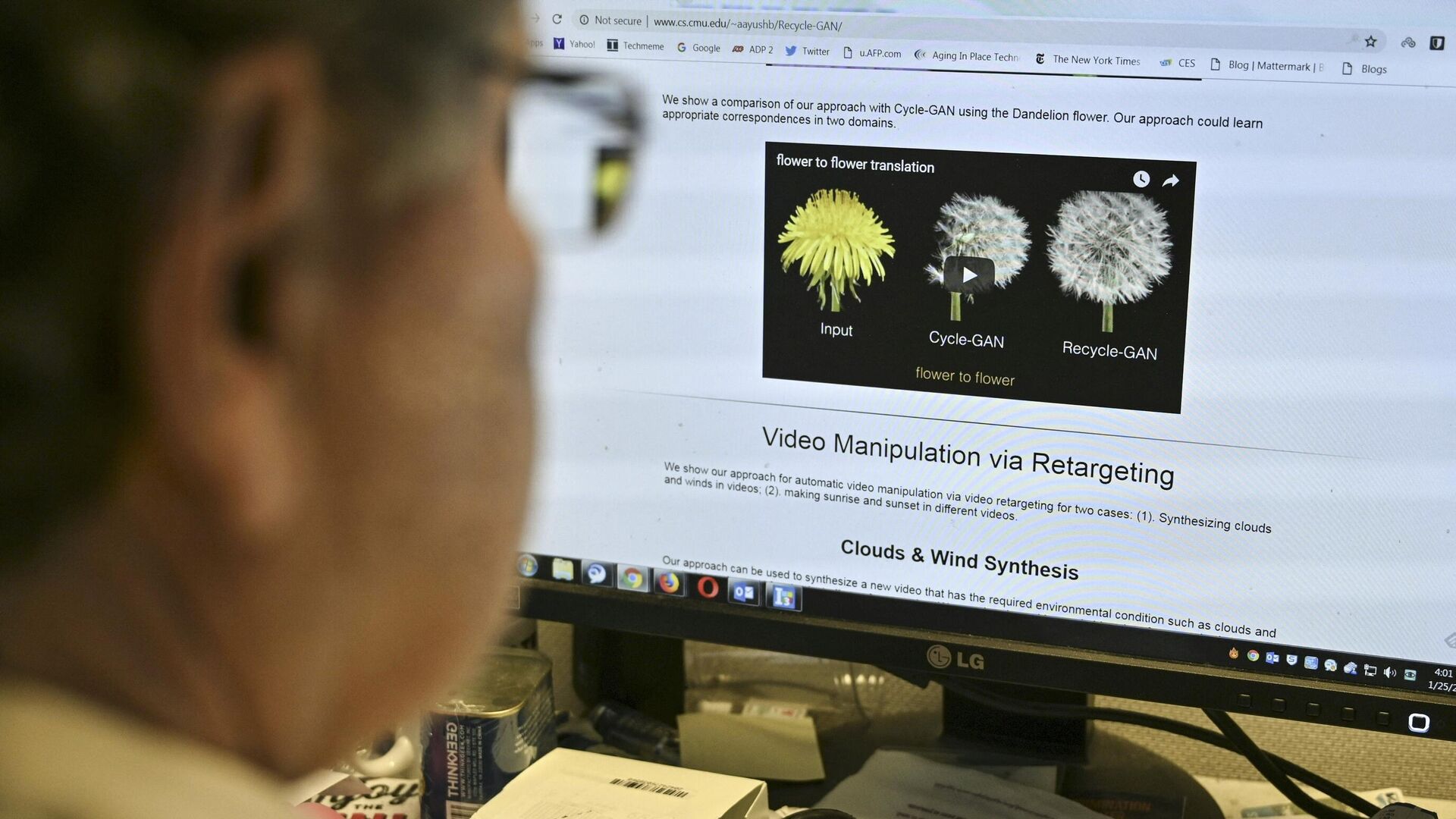

Following claims of hacking by Iranian groups during Donald Trump’s presidential campaign, OpenAI intensified its efforts to monitor digital platforms for misinformation. On February 14, 2024, the company announced the deactivation of multiple ChatGPT accounts associated with Iranian disinformation activities.

These accounts were found to be involved in creating fake news articles and crafting misleading social media content related to the U.S. presidential candidates. This operation, known as "Storm-2035," aimed to influence voter perceptions on various social media platforms.

Impact and Reach of Misinformation Campaigns

Storm-2035 extended its efforts beyond the U.S. elections, covering topics such as the Israel-Hamas conflict and political narratives. By polarizing public opinion and targeting undecided voters, the campaign sought to sway perceptions on critical issues.

Despite using AI to craft convincing disinformation, the Iranian campaign did not achieve significant audience engagement. Ben Nimmo, leading OpenAI's intelligence team, highlighted the limited impact of these attempts.

The Role of AI in Disinformation

Experts have expressed concerns about state-affiliated actors leveraging AI technologies for misinformation. This incident underscores the need for proactive measures to prevent AI tools from disrupting democratic processes, especially during important electoral periods.

OpenAI has been vigilant, suspending accounts that misuse its technology. The company continues to enhance its detection tools to combat suspicious activities related to its AI systems.

Challenges and Future Preparedness

The incident reveals how generative AI can inadvertently support misinformation campaigns, prompting discussions on ethical considerations. As AI technologies evolve, developers face challenges in implementing effective safeguards.

OpenAI's crackdown targeted not only accounts on social media platforms like X (formerly Twitter) but also on Instagram. Social networks are now proactive in identifying and removing content linked to foreign interference.

Addressing Growing Threats

As nations prepare for elections, tech companies face the evolving challenge of countering multilateral disinformation tactics. With AI making content creation easier, online platforms are increasingly vulnerable to manipulation.

Moving forward, experts emphasize the need for ongoing adaptation to combat sophisticated disinformation strategies. The integrity of democracies worldwide is at stake, urging collaborative efforts between tech firms and electoral bodies to safeguard the information ecosystem.