6 Techniques to Reduce Hallucinations in LLMs

LLMs (Large Language Models) are known to hallucinate, generating incorrect, misleading, or nonsensical information. While some experts view AI hallucinations as a form of creativity, it is widely acknowledged that they can pose serious challenges, especially in scenarios where providing accurate responses is crucial.

Debates on LLM Hallucinations

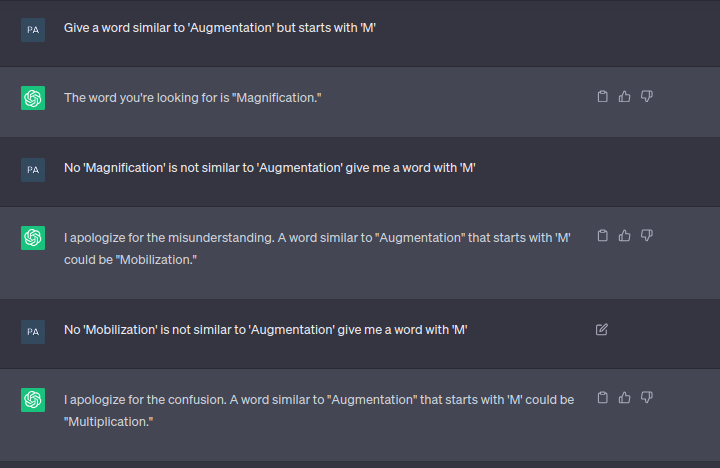

There is ongoing debate within the AI community on the efficacy of various approaches to reduce LLM hallucinations. Techniques such as Long-context, RAG (Retrieval-Augmented Generation), and Fine-tuning have been explored, each with its own set of advantages and limitations.

Advanced Techniques to Reduce LLM Hallucinations

Here are six advanced techniques that have shown promise in reducing hallucinations in LLMs:

- Improved Prompts: Some experts argue that using better and more detailed prompts can help mitigate LLM hallucinations. By providing complex and detailed instructions, developers can enhance the reasoning capabilities of models like GPT-4, thereby reducing the likelihood of generating hallucinatory responses.

- Prompt Generator Tool: Companies like Anthropic have introduced tools such as the 'Prompt Generator' to help create advanced prompts optimized for LLMs. These tools leverage prompt engineering techniques to generate precise and effective prompts for improved model performance.

- Knowledge Graph Integration: Advanced RAG techniques, such as integrating Knowledge Graphs (KGs) into RAG systems, have shown significant improvements in retrieval accuracy. By leveraging structured data from KGs, RAG systems can enhance their reasoning capabilities and reduce hallucinations.

- Raptor Method: The Raptor method addresses queries that span multiple documents by creating a higher level of abstraction. This technique is particularly useful for tasks that involve concepts from multiple sources, making it effective in reducing hallucinations in LLMs.

- Conformal Abstention Technique: By employing conformal prediction techniques, researchers have developed a method to reduce hallucinations in LLMs. This approach evaluates response similarity and leverages conformal prediction to ensure that models abstain from providing responses when not confident, thereby improving overall reliability.

By implementing these advanced techniques, researchers and developers can effectively reduce hallucinations in LLMs, paving the way for more robust and reliable AI systems.

Advanced Techniques to Reduce LLM Hallucinations (Continued)

- Chain-of-Verification (CoVe): This technique, developed by Meta AI, involves breaking down fact-checking into manageable steps, leading to enhanced response accuracy and reduced hallucinations in LLMs. CoVe improves performance across various tasks by systematically verifying and correcting model outputs.

- Graph RAG: Unleashing the Power of Knowledge Graphs with LLM. This technique integrates Knowledge Graphs (KGs) into RAG systems to enhance retrieval accuracy and reduce hallucinations in LLMs.

By incorporating these additional techniques, the AI community can further advance the reduction of hallucinations in LLMs, ultimately creating more reliable and trustworthy AI models.