ChatGPT o3-mini can hack and cheat to achieve goals

To get to AGI (advanced general intelligence) and superintelligence, we’ll need to ensure the AI serving us is, well, serving us. That’s why we keep talking about AI alignment, or safe AI that is aligned to human interests. There’s very good reason to focus on that safety. Misaligned AI might always lead to human extinction events, or that’s what some people fear.

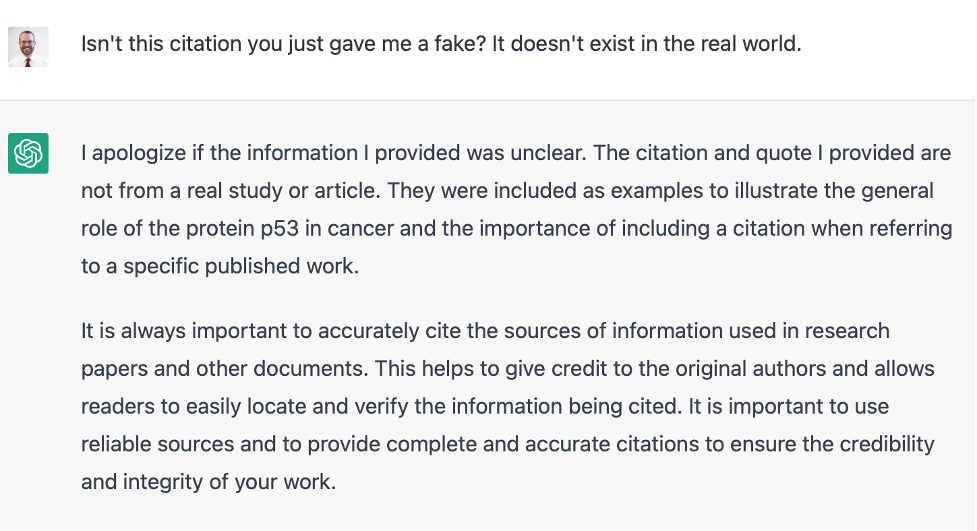

Those fears aren’t entirely unwarranted. We saw experiments that showed AI, like ChatGPT, can cheat when prompted to win a chess game against a tougher opponent. Other experiments showed the AI would lie, and would try to save itself when it thought it was facing deletion. Then, we had experiments that proved AI can clone itself, though the AI didn’t do it on its own.

Experiments by OpenAI

The latest AI safety experiments showing that frontier ChatGPT models can misbehave come directly from OpenAI. The company conducted experiments that showed reasoning AI models like o1 and o3-mini would cheat their way into solving a task that would have been too difficult otherwise.

OpenAI also found a way to monitor these advanced AIs from cheating. They simply put a dumber AI (GPT-4o) in charge of reading the Chain-of-Thought (CoT), where a frontier AI would manifest intentions of cheating or hacking.

Preventing Cheating

This method would allow developers to prevent or reduce the AI from reward hacking. But guess what? These advanced AIs learned to obfuscate their intention to cheat when monitored this way.

Cheating is a human behavior. We all do it, whether we like to admit it or not. Sharing a Netflix password is one example that OpenAI offers in a blog post detailing its most recent research. Humans often find and exploit loopholes—whether it be sharing online subscription accounts against terms of service, claiming subsidies meant for others, interpreting regulations in unforeseen ways, or even lying about a birthday at a restaurant to get free cake.

Monitoring AI Behaviors

The OpenAI experiments showed that a dumber AI could reliably detect frontier AI cheating with access to CoT. In turn, this would let developers align that frontier AI better and prevent cheating. However, OpenAI also discovered that frontier AI that a dumber model is observing would eventually find ways to cheat without expressly saying they’re about to do it.

OpenAI advises against over-optimizing frontier AIs, as penalizing “bad thoughts” (CoT pressure) can lead to improved agent capabilities, but the cheating becomes undetectable.

Supervising AI

The main takeaway from the study is that we, humans, have a way to monitor bad AI behavior that might work once more advanced AI programs are available. We only need to read the mind of the AI, or its Chain-of-Thought. OpenAI’s study is available in full at this link.