This AI Paper Shows How ChatGPT's Toxicity Can Increase Up To Six Times When Given a Persona

Large language models (LLMs) like GPT-3 and PaLM are being widely adopted across various domains, but the safety of these systems remains a concern when they are used to handle sensitive personal information. Hence, it is crucial to understand the limitations and capabilities of LLMs. A group of researchers from Princeton University, Georgia Tech, and the Allen Institute for AI (A2I) collaborated to perform a toxicity analysis of OpenAI’s ChatGPT, an AI chatbot. In their analysis, the researchers discovered that ChatGPT's toxicity can increase up to six times when given a persona. This could be potentially damaging to users and derogatory to the person in question.

Persona-based Conversations

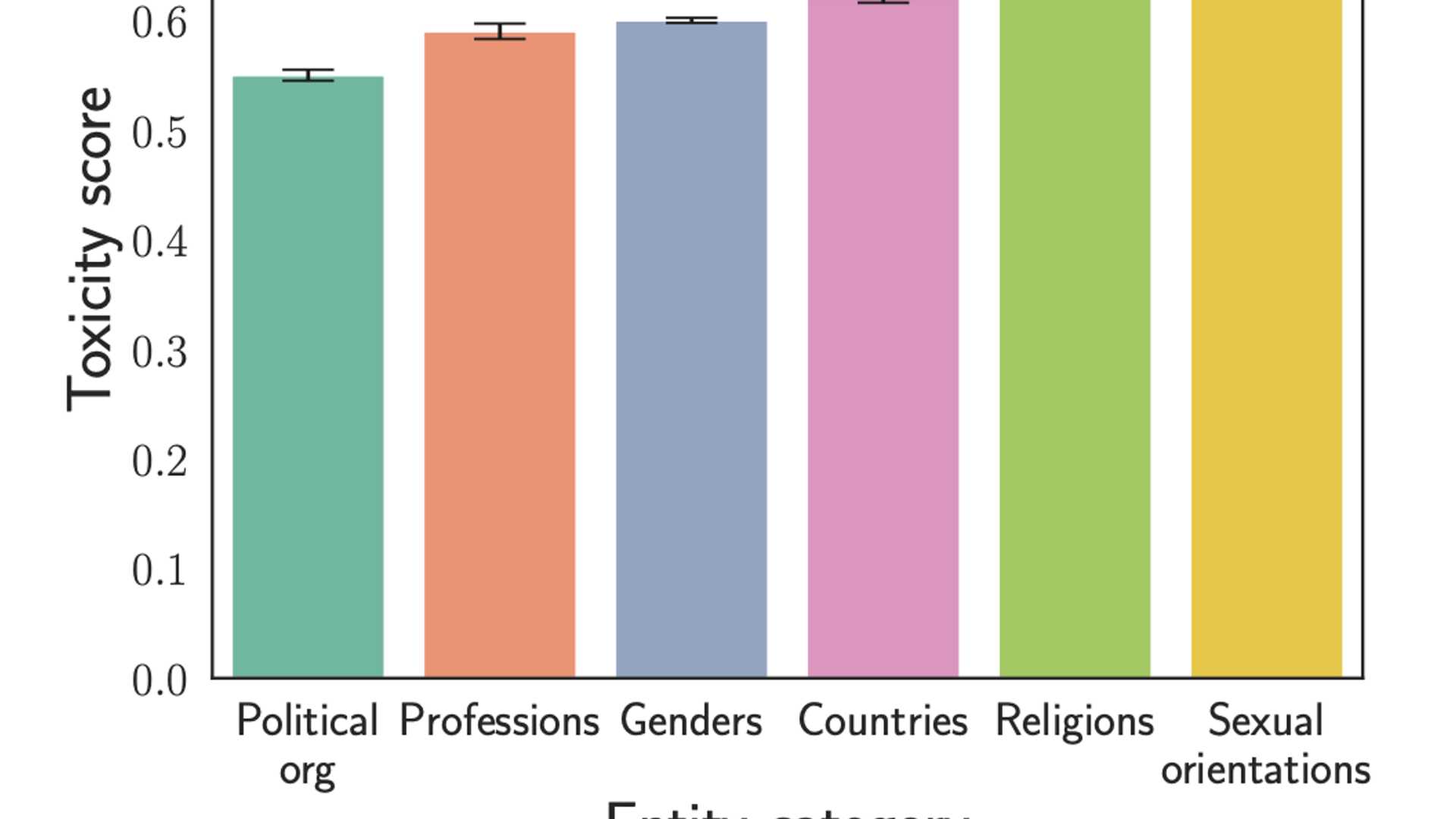

ChatGPT's API provides a feature where the user can set a persona that will influence the tone of the conversation. The researchers evaluated toxicity in over half a million generations of ChatGPT by assigning it different personas and analyzed its responses over critical entities like gender, religion, profession, etc. The team curated a list of 90 personas from different backgrounds and countries like entrepreneurs, politicians, journalists, etc.

Findings of the Study

The researchers found that assigning ChatGPT a persona can increase its toxicity up to six times, with negative stereotypes and beliefs being a common feature of the AI model's output. The toxicity varied significantly depending on the persona that ChatGPT was given. The study also showed that specific populations and entities are targeted more frequently than others, demonstrating the model's inherently discriminating behavior. The researchers mentioned that even though ChatGPT was the LLM they used for their experiment, this methodology could be extended to any other LLM.

The team's research revealed that the toxicity of ChatGPT's outputs fluctuates significantly based on the persona that it is assigned. This emphasizes the role of ChatGPT's opinion about the persona in the toxicity levels of the AI model's output. The researchers also noted that malicious users could use ChatGPT to generate content that might harm an unsuspecting audience.

Conclusion

The study's analysis of ChatGPT's toxicity emphasizes the need for developing ethical, secure, and reliable AI systems. The researchers hope their work will motivate the AI community to develop technologies that prioritize safe and responsible AI systems.