Meta Says Its Latest AI Model Is Less Woke, More Like Elon's Grok

Meta has announced that its newest AI model, Llama 4, has been developed to be less politically biased compared to its previous versions. The company shared that one of the strategies implemented to achieve this is by allowing the model to address more politically controversial questions. Additionally, Meta highlighted that Llama 4 now demonstrates a neutral stance, similar to Grok, the "non-woke" chatbot from Elon Musk's xAI startup.

Removing Bias and Promoting Neutrality

Meta expressed its commitment to eliminating bias from its AI models, with a focus on ensuring that Llama can comprehend and convey both ends of a contentious issue. The company emphasized the importance of enhancing Llama's responsiveness to enable it to engage with various viewpoints without showing favoritism towards any particular stance.

Controlling the Information Landscape

Concerns have been raised by critics regarding the influence that large AI models developed by a select few companies can have on shaping the flow of information. The capability to manipulate the data received by individuals by controlling AI models has been a topic of discussion. Meta has faced criticism, particularly from conservatives, who allege that the platform has suppressed right-leaning perspectives despite their popularity on Facebook. CEO Mark Zuckerberg has been actively engaging with authorities to mitigate regulatory challenges.

The Challenge of Achieving Balance

Striving for balance in AI models can sometimes lead to a false equivalency, inadvertently lending credibility to unfounded arguments. The concept of "bothsidesism" has been criticized for giving undue weight to baseless viewpoints over fact-based arguments. This phenomenon has been observed in media representation, where fringe movements like QAnon have been disproportionately highlighted despite their limited representation.

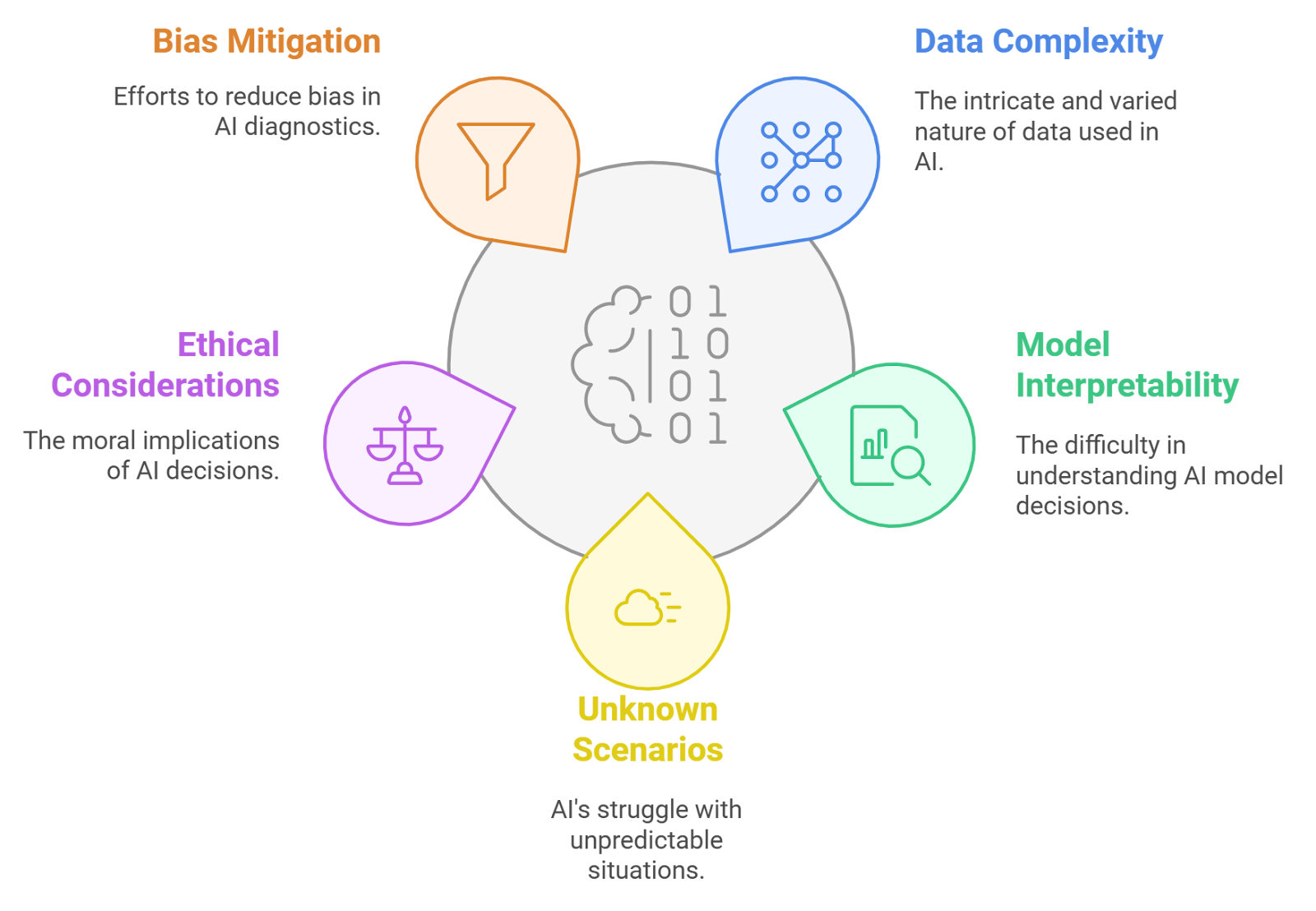

Addressing Bias in AI Models

AI models have been known to exhibit bias in various forms, from image recognition issues with people of color to the sexualized portrayal of women in generated content. Moreover, inaccuracies and misinformation continue to persist in AI-generated text, posing risks in information dissemination. Meta's efforts to reduce bias in its AI models reflect a broader industry challenge of ensuring fairness and accuracy.