The Truth Behind OpenAI's Silence On GPT-4

In March, OpenAI made headlines with the launch of GPT-4, but questions arose about the lack of transparency surrounding the model, including details on its size and architecture.

Speculation about GPT-4's inner workings has been a hot topic among AI experts. OpenAI's CEO, Sam Altman, hinted at the vastness of the model, suggesting that disappointment may be inevitable.

Speculations and Confirmations

Renowned hacker and software engineer, George Hotz, theorized that GPT-4 comprises eight separate models, each with 220 billion parameters. This speculation was later backed by Soumith Chintala, co-founder of PyTorch, confirming a total of 1.76 trillion parameters.

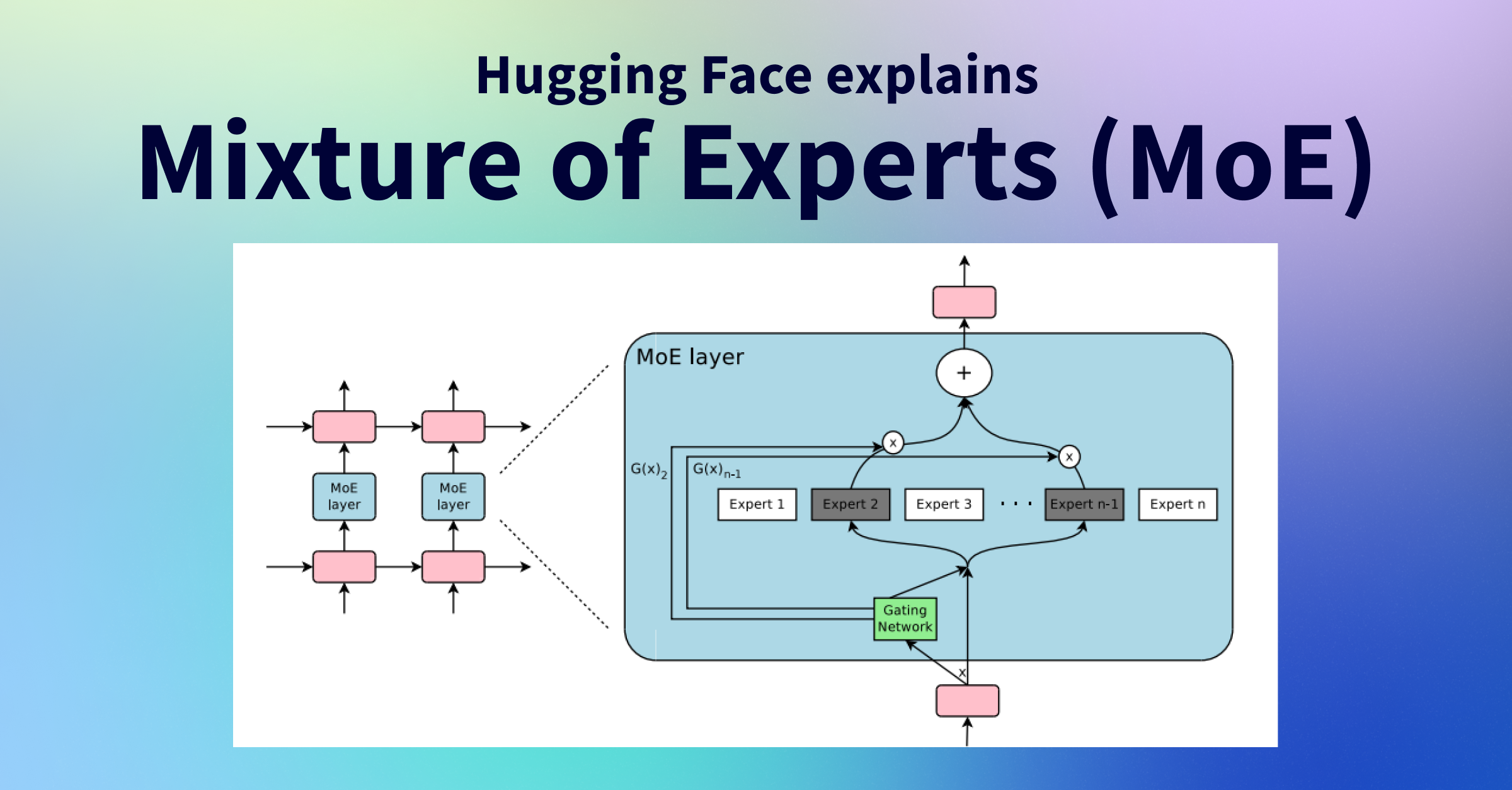

The unique architecture of GPT-4 involves a mixture of expert models, each tailored for specific tasks. These expert models collaborate to enhance the overall performance of the system, allowing for improved responses within various domains.

Benefits of the MoE Architecture

The Mixture of Expert (MoE) architecture offers several advantages, including enhanced accuracy through domain-specific fine-tuning. This modular approach also facilitates easier updates and maintenance compared to traditional monolithic models.

Hotz suggested that GPT-4 employs iterative inference to refine its outputs, potentially analyzing inputs from its expert models to mitigate errors. However, this iterative process could significantly increase operational costs.

Challenges Faced by OpenAI

The Mixture of Expert (MoE) architecture offers several advantages, including enhanced accuracy through domain-specific fine-tuning. This modular approach also facilitates easier updates and maintenance compared to traditional monolithic models.

Users have reported a decline in response quality from ChatGPT, prompting adjustments to the model's performance and default settings. While API users seem to have avoided these issues, concerns linger about prioritizing cost-effectiveness over quality.

Conclusion

The hurried release of GPT-4 underscores the complexities faced by OpenAI in balancing innovation with operational efficiency. While the MoE model marks a significant advancement, scalability issues highlight the need for strategic solutions to sustain performance and user satisfaction.