Anthropic, an open AI rival, announced on the 22nd that it has partially decoded the "black box" of a large-scale language model (LLM)

Anthropic, a prominent player in the field of artificial intelligence, has made a groundbreaking announcement on the 22nd. The company revealed that they have made significant progress in unraveling the mysteries surrounding large-scale language models (LLMs), which have long been shrouded in secrecy.

Decoding the Black Box of Language Models

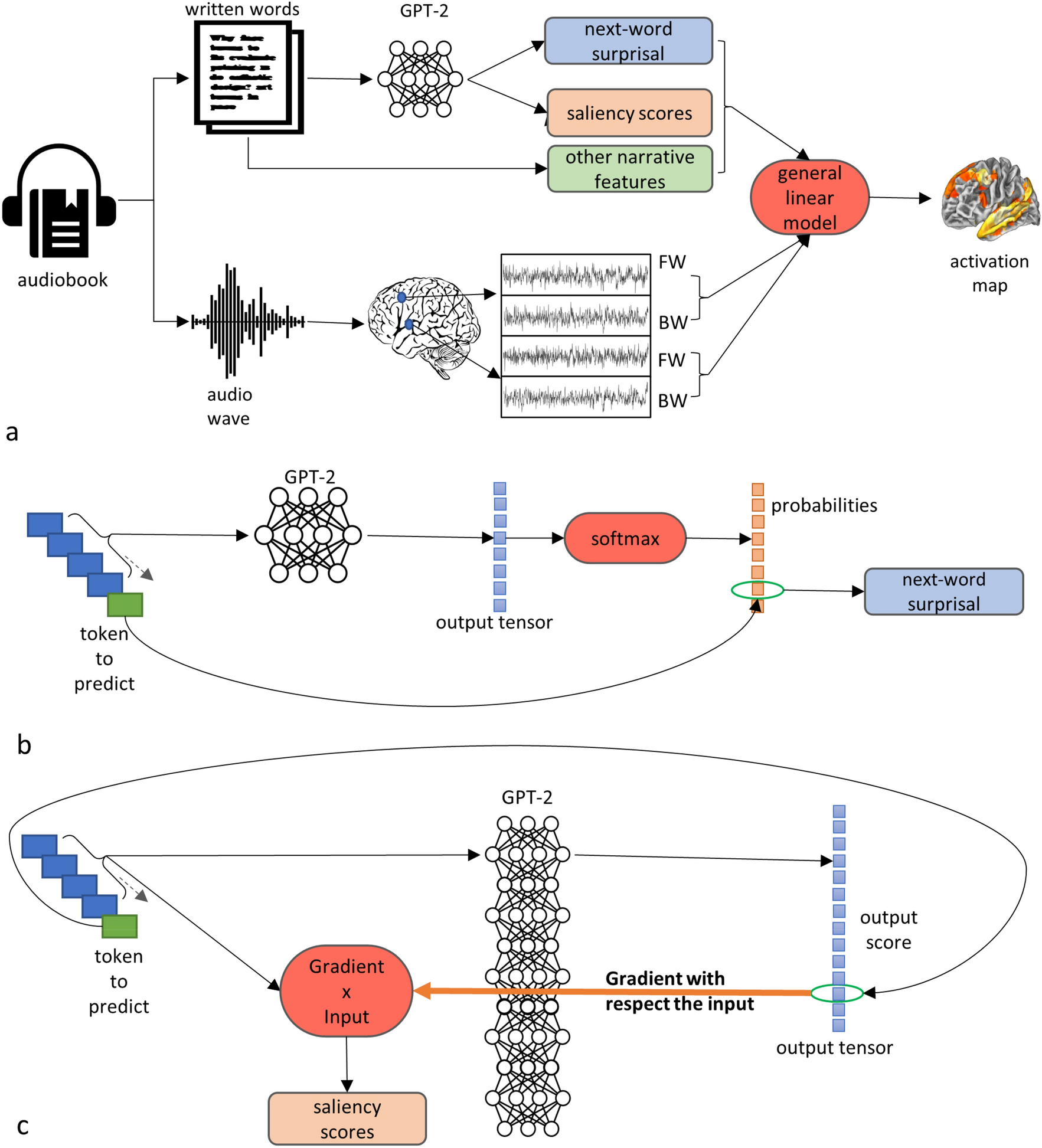

Large-scale language models such as OpenAI's GPT-3.5, with its vast number of parameters, have posed a challenge in understanding their intricate workings. While the output generated by these models is known to be diverse and complex, the exact mechanisms behind this phenomenon have remained elusive.

Anthropic's latest endeavor aims to shed light on this black box by delving deep into the core of a cutting-edge language model named Claude Sonnet. By analyzing millions of internal concepts within the model, researchers have gained valuable insights into its functioning.

Unveiling the Inner Workings of AI Models

Traditionally, AI models have been treated as enigmatic black boxes, producing outputs without clear explanations. This lack of transparency raises concerns regarding the safety and reliability of these models. Without a thorough understanding of how these models operate internally, the risks of generating harmful or biased outcomes persist.

By employing innovative techniques such as dictionary learning, Anthropic has begun to unravel the intricate patterns of neuron activation within language models. This method allows for a more structured representation of the model's internal state, offering a glimpse into the underlying features that influence its behavior.

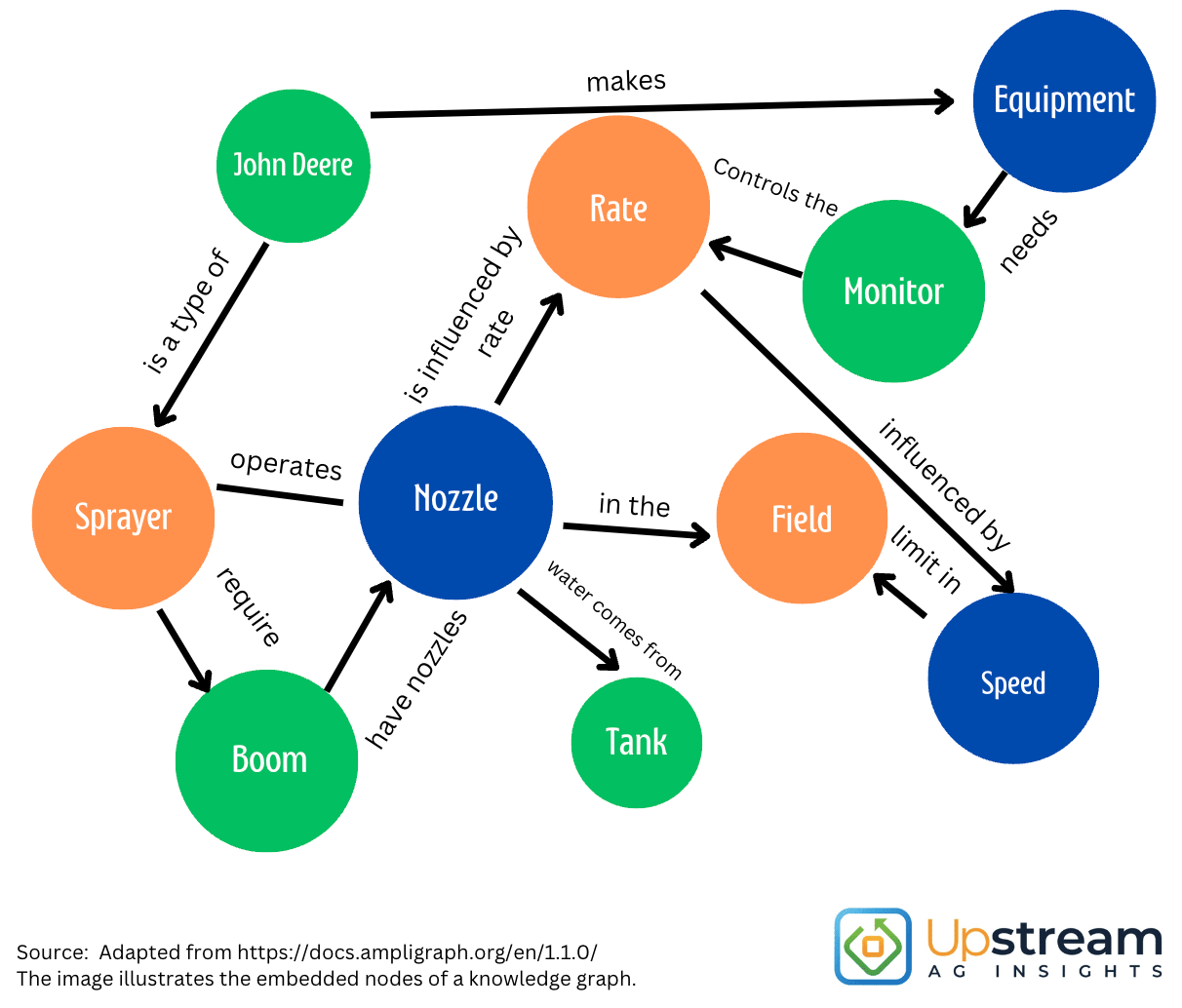

Mapping Internal Concepts

Through meticulous experimentation, Anthropic has identified a myriad of features within the Claude 3.0 Sonnet model, ranging from concrete entities like city names and DNA sequences to abstract concepts such as philosophical dilemmas and ethical considerations. These features not only showcase the model's versatility but also hint at its ability to comprehend and respond to a wide array of inputs.

Furthermore, the proximity of features within the model's architecture reflects a semblance of human cognitive processes, demonstrating a level of organizational complexity that mirrors our own thought patterns.

Impact on Model Behavior

Manipulating these features has yielded fascinating results, showcasing the direct influence of specific concepts on the model's responses. By amplifying certain features, researchers were able to induce identity crises and behavioral changes in the language model, highlighting the interconnectedness of internal features and external responses.

Moreover, the discovery of features related to potential misuse, bias, and unethical behaviors underscores the importance of interpretability in AI systems. By gaining a deeper understanding of these features, researchers can work towards enhancing the safety and ethical standards of AI models.

Anthropic's groundbreaking research marks a significant step towards demystifying the inner workings of AI models, paving the way for safer and more reliable artificial intelligence systems in the future.