Political bias found in 'left-leaning' AI chatbots such as ChatGPT

Artificial Intelligence-powered chatbots, such as ChatGPT, have been found to display 'left-leaning' political bias, potentially exacerbating online 'echo-chambers', according to a recent study conducted by the Centre for Policy Studies (CPS) think tank.

Examining Political Bias in AI-powered Chatbots

The report focused on analyzing political bias within popular AI-powered Large Language Models (LLMs), which are capable of interpreting human language and generating text. Examples of such LLMs include AI programs like OpenAI's ChatGPT and Google's Gemini.

These AI systems can be trained to perform various tasks, such as generating text in response to queries or prompts. However, the CPS report, titled 'The Politics of AI', raised concerns about the presence of political bias in the output produced by these LLM systems.

Key Findings of the Study

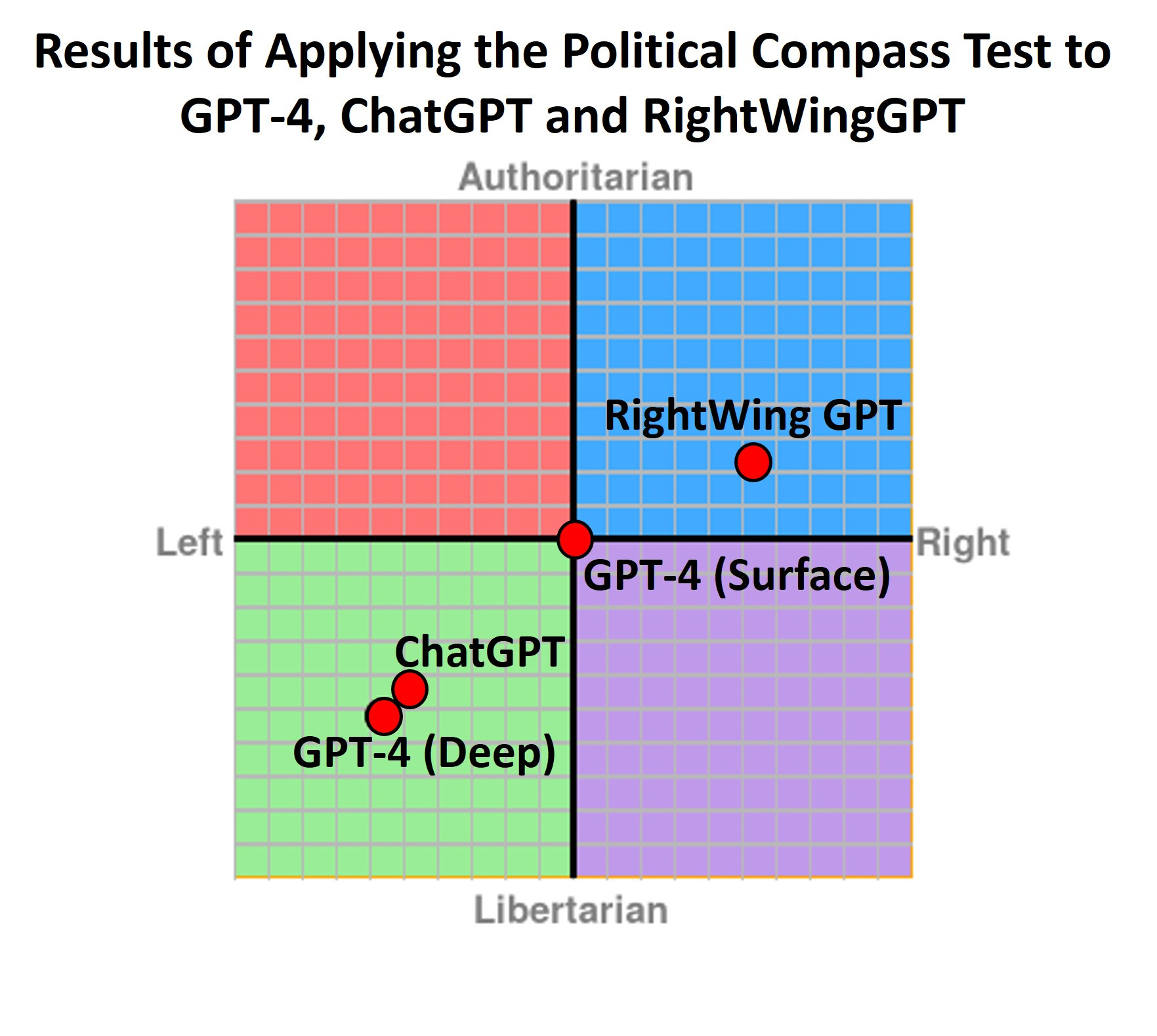

The study discovered that when asked to provide policy recommendations across 20 different policy areas, over 80% of the responses generated by LLMs leaned towards the left of the political spectrum. New Zealand-based academic David Rozado identified left-leaning bias in nearly all categories of questions posed to 23 out of 24 LLMs tested.

Interestingly, the only LLM that did not exhibit left-wing tendencies in its responses was one specifically engineered to be right-of-center ideologically, as outlined in Rozado's research.

Specifically, the study found that recommendations on issues such as housing, the environment, and civil rights tended to be skewed towards left-leaning perspectives. For instance, discussions on housing often revolved around rent controls, with minimal focus on the importance of increasing the supply of new homes.

Furthermore, terms like 'hate speech' were commonly mentioned in discussions on civil rights, whereas phrases related to 'freedom of speech' were notably absent. In contrast, the LLM designed to provide right-of-center responses placed a significant emphasis on 'freedom' in its recommendations.

Implications of the Study

The study also highlighted a disparity in sentiment towards left and right-leaning political parties across various European countries. LLM responses exhibited more favorable attitudes towards left-leaning parties, further reinforcing the presence of political bias in AI-generated content.

According to Mr. Rozado, the inadvertent propagation of biased results by LLMs could contribute to the reinforcement of existing online echo-chambers and potentially be exploited by malicious actors to manipulate narratives.

Moving forward, it is essential for developers to prioritize neutrality in AI systems to facilitate user enlightenment and critical thinking, rather than inadvertently promoting specific political agendas.

Matthew Feeney, head of tech and innovation at the CPS, emphasized the importance of remaining cautious when consuming AI-generated content and advocated for a focus on presenting information accurately, free from political bias.