New MIT report reveals energy costs of AI tools like ChatGPT...

You've probably heard that statistic that every search on ChatGPT uses the equivalent of a bottle of water. And while that's technically true, it misses some of the nuance. The MIT Technology Review dropped a massive report that reveals how the artificial intelligence industry uses energy — and exactly how much energy it costs to use a service like ChatGPT.

Energy Cost of AI Models

The report determined that the energy cost of large-language models like ChatGPT cost anywhere from 114 joules per response to 6,706 joules per response — that's the difference between running a microwave for one-tenth of a second to running a microwave for eight seconds. The lower-energy models, according to the report, use less energy because they use fewer parameters, which also means the answers tend to be less accurate.

It makes sense, then, that AI-produced video takes a whole lot more energy. According to the MIT Technology Review's investigation, to create a five-second video, a newer AI model uses "about 3.4 million joules, more than 700 times the energy required to generate a high-quality image". That's the equivalent of running a microwave for over an hour.

Energy Consumption in Real-world Scenarios

The researchers tallied up the amount of energy it would cost if someone, hypothetically, asked an AI chatbot 15 questions, asked for 10 images, and three five-second videos. The answer? Roughly 2.9 kilowatt-hours of electricity, which is the equivalent of running a microwave for over 3.5 hours.

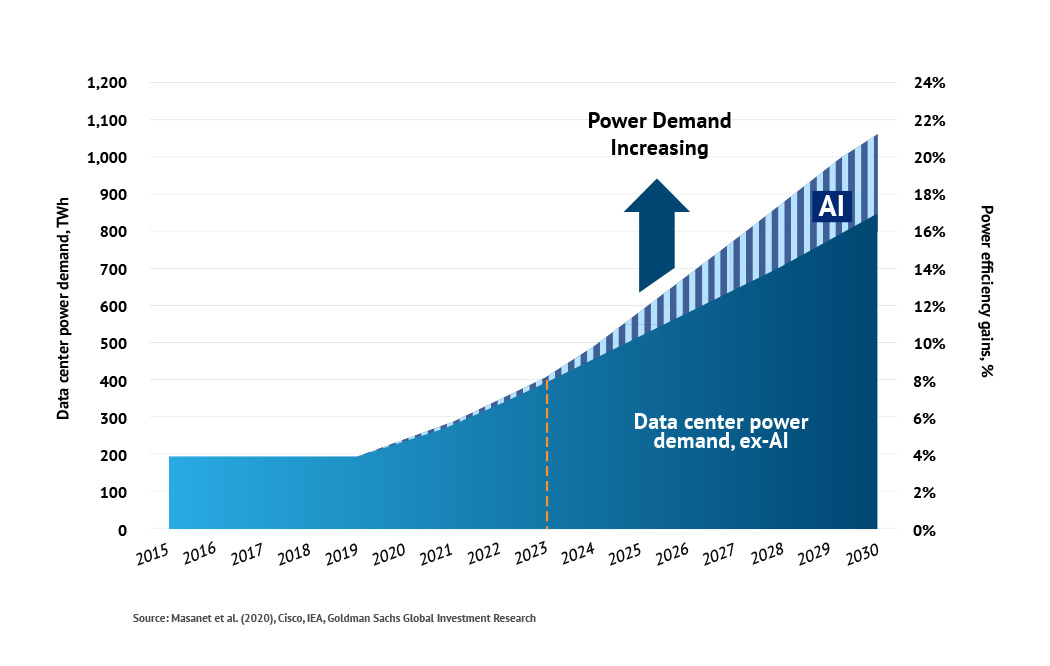

The investigation also examined the rising energy costs of the data centers that power the AI industry. The report found that prior to the advent of AI, the electricity usage of data centers was largely flat thanks to increased efficiency. However, due to energy-intensive AI technology, the energy consumed by data centers in the United States has doubled since 2017. Government data suggests that half the electricity used by data centers will go toward powering AI tools by 2028.

AI Integration Across Industries

This report arrives at a time in which people are using generative AI for various purposes. Google, for example, announced at its annual I/O event that it's leaning into AI with fervor. Google Search, Gmail, Docs, and Meet are all seeing AI integrations. People are using AI to lead job interviews, create deepfakes of OnlyFans models, and cheat in college. And all of that, according to this in-depth new report, comes at a pretty high cost.