The Human Ai Institute® on LinkedIn: On the Future of Content in the ...

Agree & Join LinkedIn By clicking Continue to join or sign in, you agree to LinkedIn’s User Agreement, Privacy Policy, and Cookie Policy.

Great Read and Insights

Here is the preprint of "On the Future of Content in the Age of Artificial Intelligence: Some Implications and Directions", freely available @SSRN https://lnkd.in/e_EmrE5Y. To view or add a comment, sign in.

Full-blown Censorship by Meta / Facebook / Meta Facebook?

Who would have thought 🤔 Dr. Susie A., Divya Dwivedi. To view or add a comment, sign in.

A Promising United Nations In-depth Discussion on Macroeconomic Challenges and Global Governance Innovations

Join us for a conversation with Marc Uzan, Executive Director and Founder of the Reinventing Bretton Woods Committee.

- Date: 13 August 2024

- Time: 18:30–19:30 JST

- Location: UNU Headquarters, Tokyo

- Language: English

Marc Uzan will join UNU Head of Communications Kiki Bowman for an in-depth discussion on issues of economic fragmentation, macroeconomic policy challenges, and innovations in global governance. Learn more and register by 12 August 2024 ℹ️ https://buff.ly/3ysX35h. To view or add a comment, sign in.

"Nobody Knows How to Secure GenAI" 🤔

We are sharing those concerns! Experienced cyber security data scientist and data engineer. CISSP | Ex CIA, JP Morgan. GenAI | NLP | Python | SQL | Java | Speaker | Blackhat Instructor and O'Reilly Author | Classic car enthusiast.

I just returned from BlackHat in Vegas and am not a happy camper. Usually when I go to conferences I return inspired and full of new ideas. This year, I came back genuinely scared. I attended a series of talks about AI and here were the main points:

- Gen AI cannot be used to secure Gen AI.

- Gen AI is a very immature technology, and despite that companies are going all in without a clear understanding of the risks.

- Nobody and I mean nobody knows how to secure it.

In almost every presentation I heard, they all circled around to that last point: nobody really knows how to secure it. Let me explain the implications a bit more. Let's say you are using an LLM with RAG to summarize incoming emails. It is entirely possible for an attacker to craft an email with a malicious prompt to gain access to your infrastructure. Basically, any situation where a user is providing input to an LLM is opening up the possibility for a malicious actor to launch a remote code execution attack on your system. And there's no way to stop it. Happy Friday! To view or add a comment, sign in.

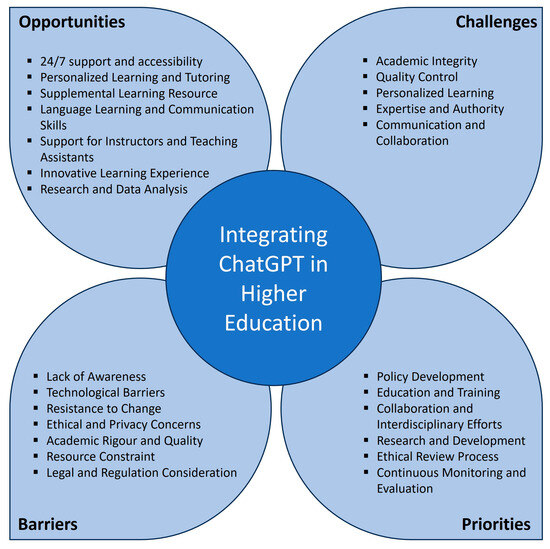

Image for Illustration Purposes

Great Paper on Terminological Choices Within the AI Policy Development Context (e.g. EU AI Act)

EU AI Act (architect & lead author) 📑 Paper 📑 hot off the press with my Joint Research Center colleagues David Fernández Llorca, Emilia Gómez and Ignacio Sánchez!

The goal of the paper is to offer an interdisciplinary analysis of some key concepts of the #AIAct around #GenAI that have emerged after the Commission proposal, notably, #generalpurposeAIsystem, #foundationmodel and #generativeAI. For context we also briefly touch upon the concept of #AIsystem.

The paper does not discuss the final legal text. Instead, it focuses on the previous intermediary versions of the law from the European Commission.