Not every AI prompt deserves multiple seconds of thinking: how ...

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The Problem with Reasoning Models

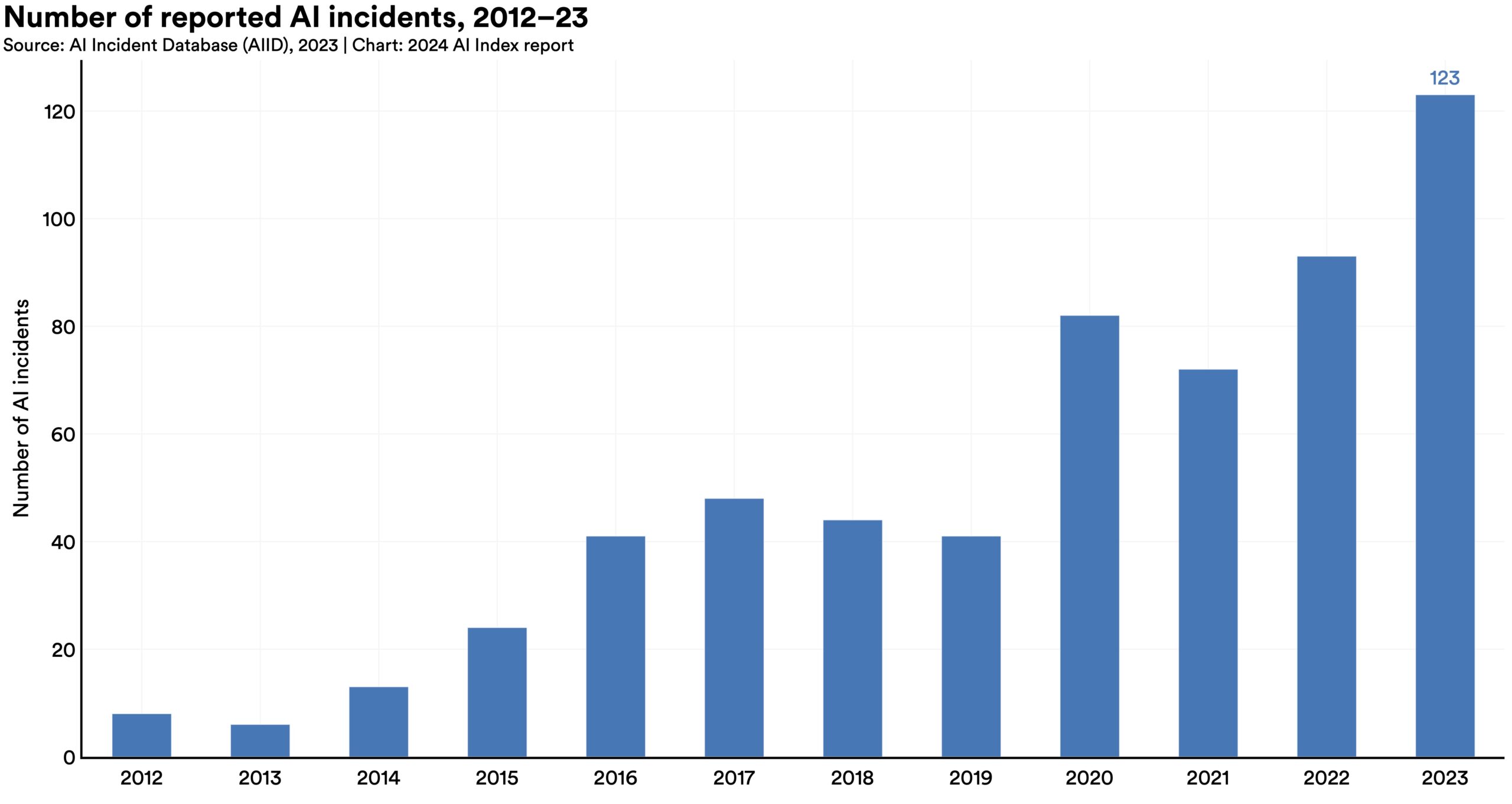

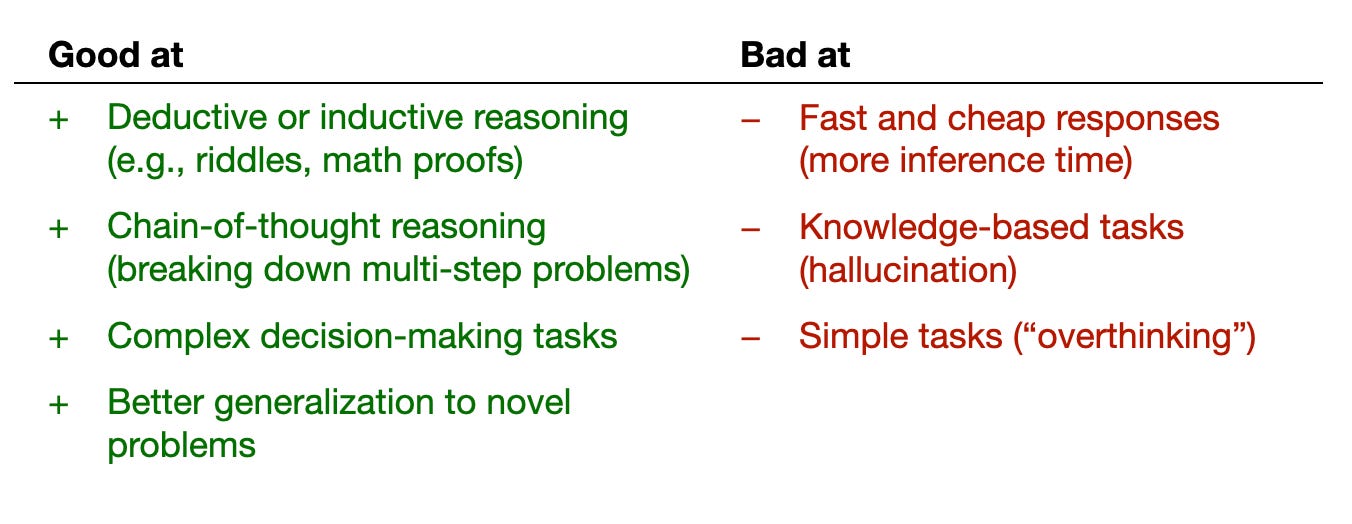

Reasoning models like OpenAI o1 and DeepSeek-R1 have a common issue - they tend to overthink simple questions, resulting in delays in providing answers. This overthinking behavior can be inefficient and costly in terms of resources.

Optimizing AI Response Time

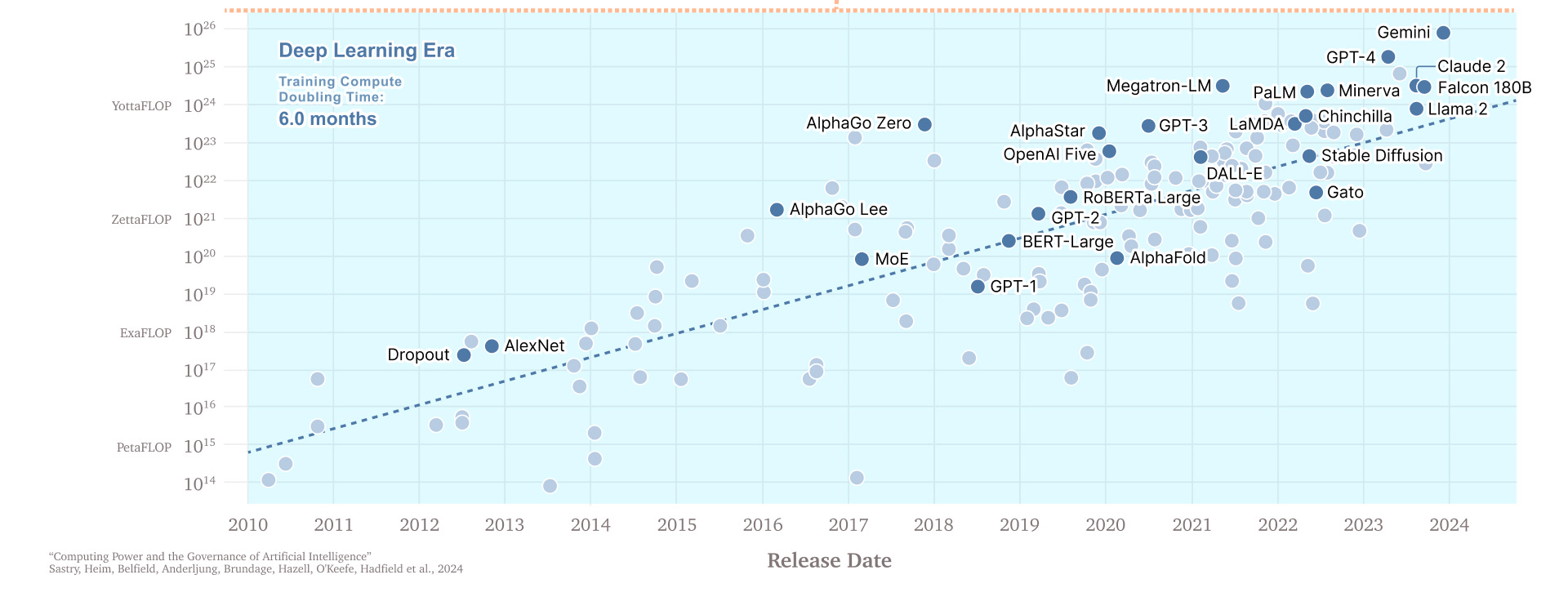

Researchers at Meta AI and the University of Illinois Chicago have introduced a new technique that aims to address this issue by training models to allocate inference budgets based on the complexity of the query. By doing so, the models can provide faster responses, reduce costs, and optimize the allocation of compute resources.

The Role of Large Language Models (LLMs)

Large language models (LLMs) can enhance their performance on reasoning tasks by generating longer reasoning chains, often referred to as "chain-of-thought" (CoT). This success has led to the development of various inference-time scaling techniques that encourage models to contemplate problems more extensively, generate multiple answers, and select the most suitable one.

Improving Efficiency in Reasoning Models

One common approach in reasoning models is employing "majority voting" (MV) by generating multiple answers and selecting the one that appears most frequently. However, this method can lead to unnecessary resource consumption.

The introduction of "sequential voting" (SV) and "adaptive sequential voting" (ASV) techniques aims to make reasoning models more efficient in their responses. SV allows the model to halt the reasoning process once a certain number of similar answers are generated, thus saving time and resources. ASV, on the other hand, prompts the model to generate multiple answers only for complex problems, streamlining the process for simpler queries.

Advancements in AI Models

Experiments have shown that IBPO enhances the efficiency of reasoning models, outperforming traditional approaches when given a fixed inference budget. The development of such techniques comes at a crucial time when AI models are facing limitations in terms of training data quality and efficiency.

Reinforcement learning presents a promising solution, allowing models to self-correct and optimize responses based on objectives, unlike traditional supervised fine-tuning methods. This adaptive approach has proven successful, as seen in the performance of models like DeepSeek-R1.

Scaling: The State of Play in AI - by Ethan Mollick

The continuous evolution of AI models towards more efficient and effective reasoning mechanisms showcases the potential for further advancements in the field of artificial intelligence.

Understanding Reasoning LLMs - by Sebastian Raschka, PhD

If you want to delve deeper into the realm of generative AI and its practical applications, be sure to check out VB Daily for valuable insights and updates.

Thanks for subscribing. Check out more VB newsletters here.