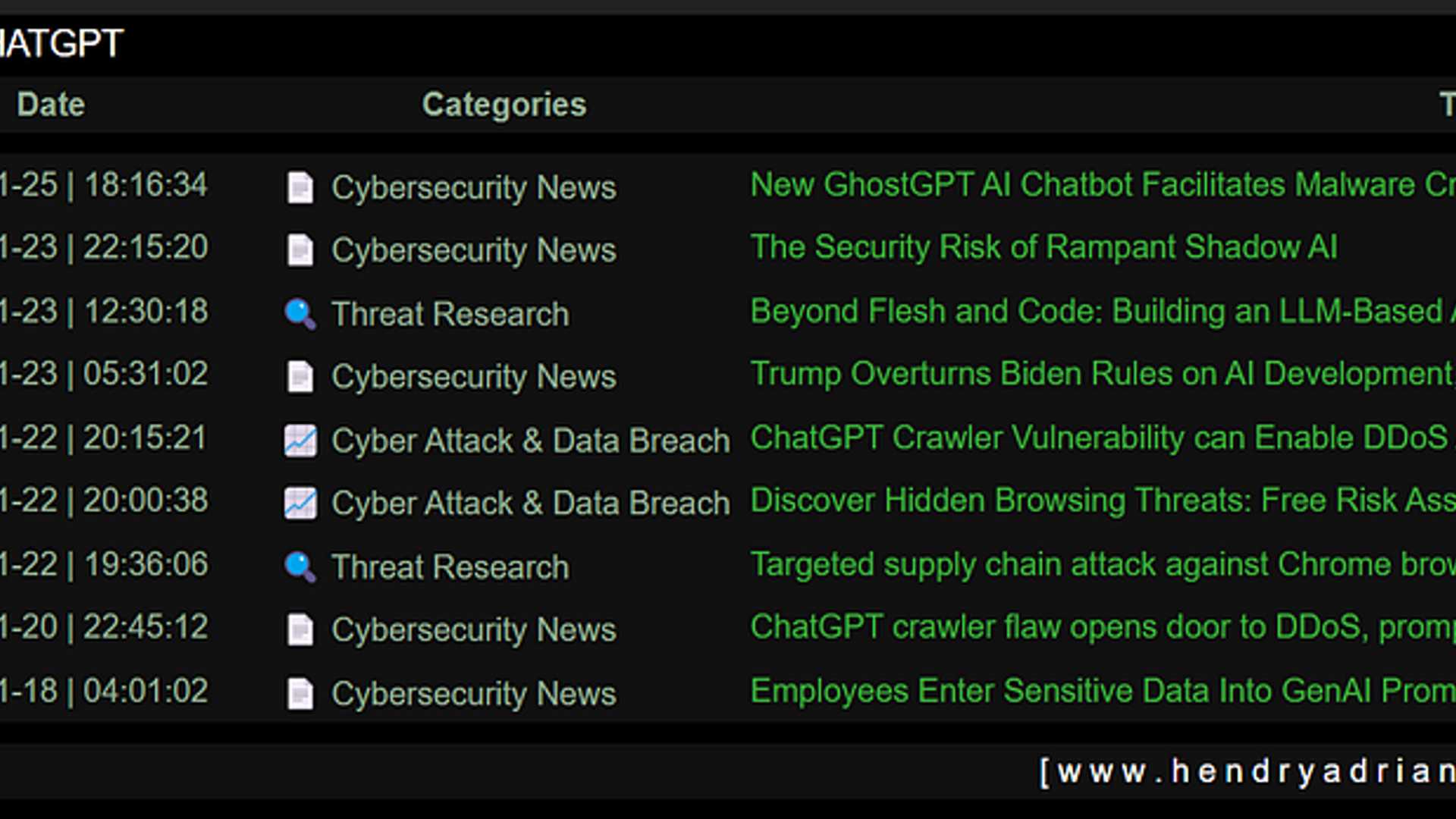

ChatGPT and Cybersecurity: Exploring the Dual-Edged Sword of AI Innovation

The rapid evolution of AI, particularly tools like ChatGPT, has transformed various industries but has also brought forth significant cybersecurity risks. From malicious chatbots to regulatory challenges, the convergence of AI and cybersecurity necessitates immediate attention.

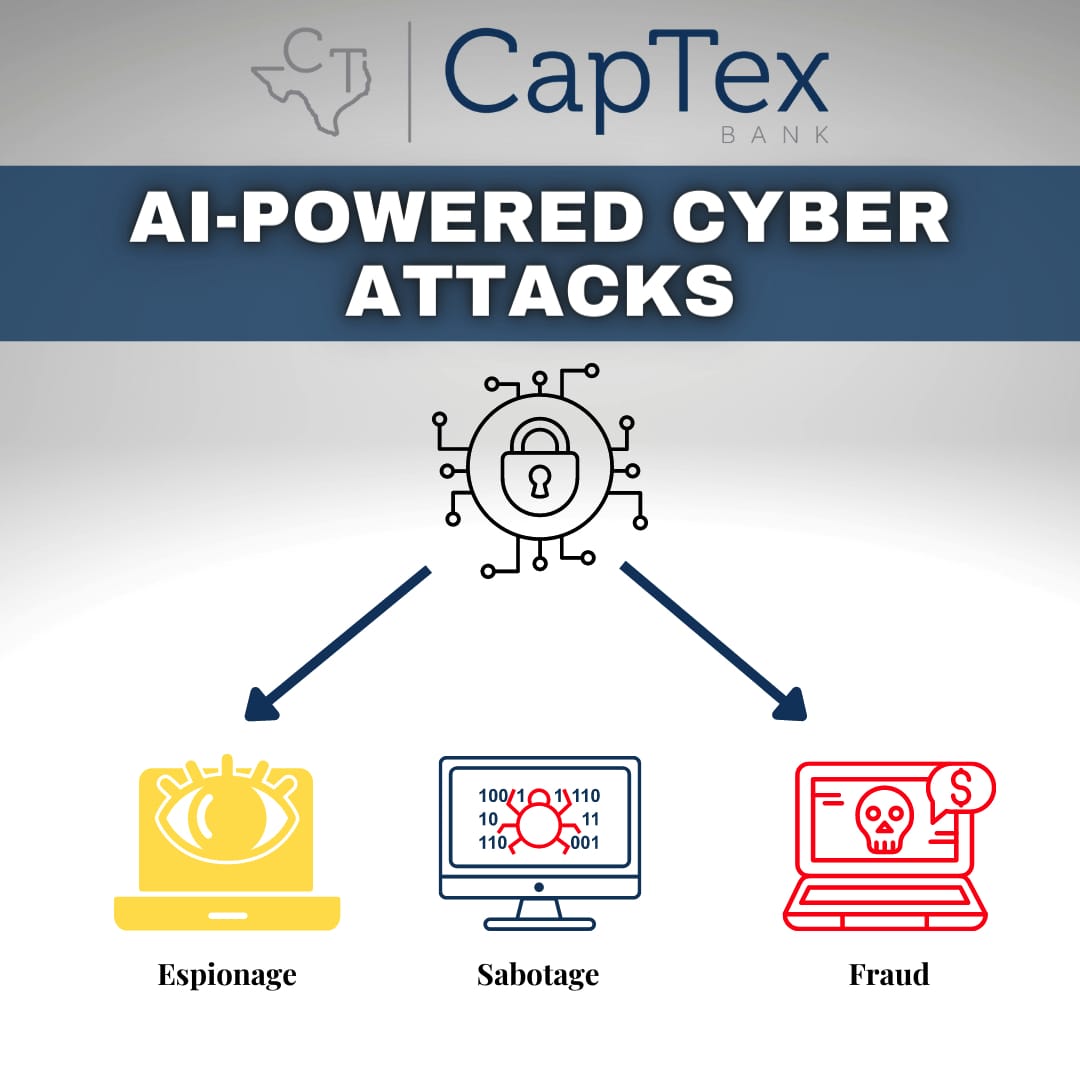

Weaponization of AI by Cybercriminals

Cybercriminals are increasingly leveraging AI technologies such as GhostGPT, a derivative of ChatGPT available on Telegram. GhostGPT streamlines the creation of malware, phishing attacks, and code generation, catering to less experienced attackers. This trend is exemplified by WormGPT, a forerunner in the democratization of cybercrime with AI.

Risks of "Shadow AI" in Corporate Environments

Unauthorized usage of AI tools, also known as "shadow AI," is on the rise among employees, operating beyond the purview of organizational oversight. Shockingly, a substantial 74% of ChatGPT utilization originates from personal accounts, potentially exposing sensitive company data, such as healthcare records and financial information, to external platforms.

Impact of Large Language Models (LLMs) on Cyber Attacks

Large Language Models are reshaping cyber assaults by automating elements of the Cyber Kill Chain. The recent revocation of Biden's 2023 AI executive order, which mandated safety standards for LLM developers, has left critical infrastructure susceptible. Private entities like OpenAI now face reduced accountability, potentially weakening defenses against AI-powered cyber threats.

Vulnerabilities and Exploits

Researchers have discovered vulnerabilities in ChatGPT's API that can facilitate Distributed Denial of Service (DDoS) attacks and prompt injections. In a phishing campaign in December 2024, Chrome extension developers were targeted, leading to the injection of malware into legitimate tools like Cyberhaven's extension. Attackers were able to harvest sensitive information.

Mitigating AI-Driven Cybersecurity Risks

While the potential of AI is undeniable, its misapplication poses significant existential threats. By prioritizing robust security frameworks, advocating for stringent regulations, and fostering a culture of cybersecurity awareness, organizations can harness the capabilities of AI without succumbing to its malevolent applications.

Sources: Compiled from cybersecurity reports and threat analyses (January 2025).