OpenAI's Insanely Powerful Text-to-Video Model 'Sora' is Here | No ...

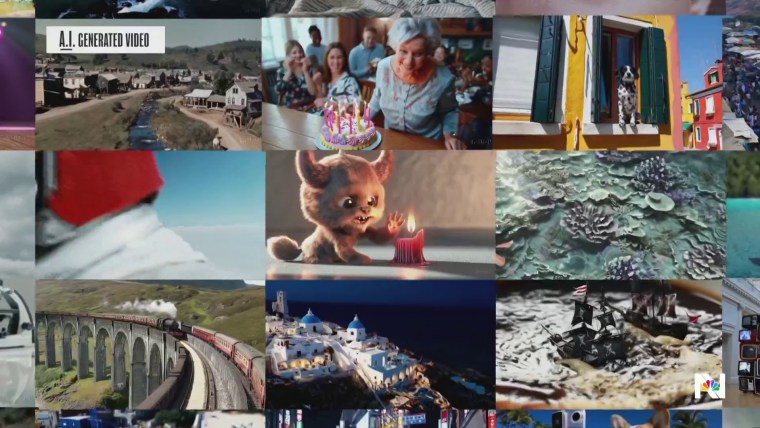

A look at Sora, OpenAI’s new text-to-video model that can create highly detailed scenes from simple text instructions.

Whelp, that’s it, folks! In what has been the least surprising announcement ever, OpenAI has revealed their latest text-to-video model, and… well, it’s just about everything we’ve ever dreamed (or feared) about the coming AI video revolution.

Just announced today, OpenAI’s new model is called ‘Sora’ and it promises to be able to create realistic and imaginative scenes from simple text instructions. And when we say scenes, we mean highly detailed scenes with complex camera motion and multiple characters with vibrant emotions.

Exciting Features of Sora

Let’s check out what’s been shared so far, as well as delve a bit into how this might pretty much blow the doors of generative AI video wide open in the film and video industry.

From announcements shared on X today from the likes of OpenAI and Sam Altman, Sora is officially here. And man does it look good. OpenAI has shared dozens of examples of Sora at work now that all look hyper-realistic and very intricate. And this is just the start.

Sora is capable of creating videos up to 60 seconds long, which is insane and much longer than any of its competitors. The sophistication of the scenes also blows all of the generative video AI models out of the water as well.

Don’t believe us, take a look for yourself.

OpenAI has also announced that Sora is out and available now, although it is currently only usable by red teamers to assess critical areas for harm or risks. OpenAI is also granting access to a select group of visual artists, designers, and filmmakers to give feedback on advancing the model even further.

Revolutionizing the AI Video Industry

If you’ve used any of OpenAI’s models in the past, you should be familiar with how advanced their AI is at understanding language, and as a text-based generative AI your text prompts will be a huge part of the generative process.

Sora is capable of generating complex scenes with multiple characters, specific types of motion, and accurate details of both the subjects and backgrounds. As OpenAI puts it, “the model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world.”

That’s the big question here. As Sora is live now to select users, it’s only a short time before it’s open to everyone we’d assume. To their credit, you could say, OpenAI does report that they’ll be taking “several important safety steps ahead of making Sora available” and are attempting to take certain precautions to keep this technology from being used to create misleading content.

CINEFLARES at NAB 2024

A look at how CINEFLARES is aiming to make lens flares a perfect art form at the NAB 2024 Show.

While we’ve given a lot of coverage this year to new cameras, video editing app updates, and the ever-changing world of AI in video, the working cinematographers are still out there grinding with whatever cameras and rigs are needed for the various shoots they’re tasked to take on.

If you’ve ever wanted to better master how to use lens flares in your shots though, one of our last stops at the NAB Show this year showcased a new app that aims to help DPs master their lens flares once and for all. Let’s look at CINEFLARES aims to offer a resource for creatives to make the most informed decisions in support of their artistic visions.

Check out our full interview with the team at NAB below.

In a conversation with our video hosts from Cinematography for Actors at the NAB Show this year, we got a first-hand demo of how the new CINEFLARES website aims to give users access to a comprehensive collection of all of the cinema lens choices available—including vintage, anamorphic, large format, and more.

If you’re in search of a clean, neutral lens for VFX plates, or one with a specific flare pattern reminiscent of a certain period, this program is here to instantly reveal these lens characteristics and to help you make informed decisions.

Check out our full demo of CINEFLARES and conversation with the team below.

No Film School's coverage of NAB 2024 is brought to you by Blackmagic Design.