Latest Improved Open AI Is The Biggest

Nowadays, the most widespread news revolves around AI and its integration with the tech industry. As technology progresses, artificial intelligence continues to advance, becoming more sophisticated and human-like each day. Meta has now introduced its latest open-source model, "Llama 3.1," which is poised to make a significant impact in the AI landscape.

Advanced Features of Llama 3.1

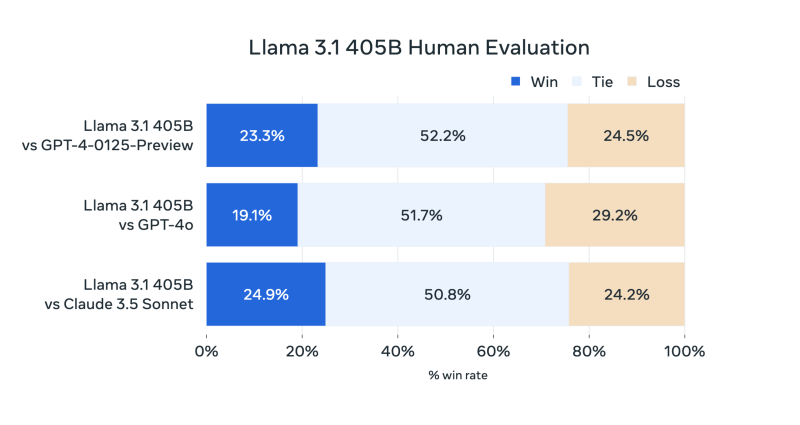

Llama 3.1 boasts an impressive 405 billion parameters, making it one of the largest AI models available today. This substantial increase in parameters enhances the model's capabilities, allowing it to excel in various tasks with improved accuracy and efficiency, outperforming its predecessors and competitors in key benchmarks.

The model supports eight languages, including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai, with a context length of 128K tokens. These language capabilities, combined with the large context window, result in more logical and contextually relevant responses, enhancing user experiences across different applications.

Open-Source Accessibility

Meta's decision to release Llama 3.1 as an open-source model signifies a commitment to fostering innovation and collaboration within the AI community. By providing developers with unrestricted access to these advanced models, Meta aims to facilitate the development of new applications and drive research advancements without the constraints of proprietary limitations.

Training and Development

Llama 3.1 underwent rigorous training using 16,000 Nvidia H100 GPUs on a dataset containing 15 trillion tokens, equivalent to 750 billion words. Meta employed stringent quality assurance measures and data filtering techniques, along with enhanced data editing processes, to enhance the model's performance.

Additionally, AI companies are increasingly leveraging artificially generated data to refine their models and improve overall efficiency.

Future Enhancements

While Llama 3.1 is currently a text-only model, Meta is actively working on developing multimodal capabilities for future versions. These upcoming iterations may have the capacity to recognize and generate images, videos, and speech, expanding the model's potential applications across various industries and sectors.

Environmental Considerations

Despite its innovative features, the large scale of Llama 3.1 raises concerns about computational resource demands and long-term environmental implications. Meta acknowledges these challenges and is dedicated to enhancing the model's efficiency to mitigate potential costs and environmental effects.

Conclusion

Meta's release of Llama 3.1 marks a significant milestone in the realm of AI technology. With its expansive scale, multilingual support, and open-source accessibility, Llama 3.1 has the potential to drive innovation and collaboration in AI development. As Meta continues to refine and expand the model's capabilities, the future of AI appears promising, offering numerous benefits for diverse industries and applications.