How Google makes custom chips used to train Apple AI models and...

Inside a sprawling lab at Google headquarters in Mountain View, California, hundreds of server racks hum across several aisles, performing tasks far less ubiquitous than running the world's dominant search engine or executing workloads for Google Cloud's millions of customers. Instead, they're running tests on Google's own microchips, called Tensor Processing Units, or TPUs. Originally trained for internal workloads, Google's TPUs have been available to cloud customers since 2018. In July, Apple revealed it uses TPUs to train AI models underpinning Apple Intelligence. Google also relies on TPUs to train and run its Gemini chatbot.

Google's Custom Chips

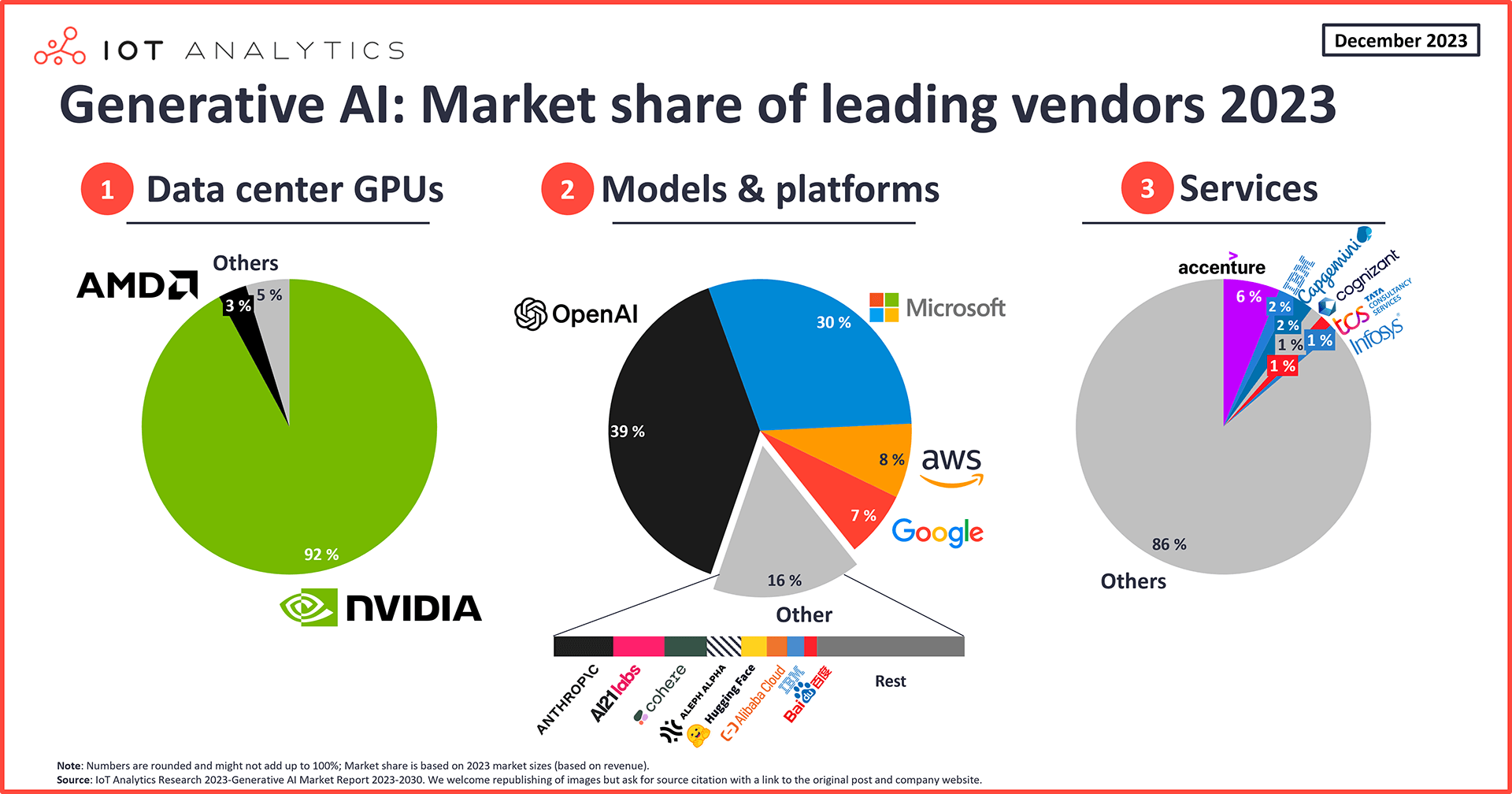

"The world sort of has this fundamental belief that all AI, large language models, are being trained on Nvidia, and of course Nvidia has the lion's share of training volume. But Google took its own path here," said Futurum Group CEO Daniel Newman. He's been covering Google's custom cloud chips since they launched in 2015. Google was the first cloud provider to make custom AI chips.

Three years later, Amazon Web Services announced its first cloud AI chip, Inferentia. Microsoft's first custom AI chip, Maia, wasn't announced until the end of 2023.

Challenges and Criticisms

Being first in AI chips hasn't translated to a top spot in the overall rat race of generative AI. Google's faced criticism for botched product releases, and Gemini came out more than a year after OpenAI's ChatGPT.

Advancements in AI

Google Cloud, however, has gained momentum due in part to AI offerings. Google parent company Alphabet reported cloud revenue rose 29% in the most recent quarter, surpassing $10 billion in quarterly revenues for the first time. "The AI cloud era has completely reordered the way companies are seen, and this silicon differentiation, the TPU itself, may be one of the biggest reasons that Google went from the third cloud to being seen truly on parity, and in some eyes, maybe even ahead of the other two clouds for its AI prowess," Newman said.

Custom Chip Development

In July, CNBC got the first on-camera tour of Google's chip lab and sat down with the head of custom cloud chips, Amin Vahdat. He was already at Google when it first toyed with the idea of making chips in 2014. "It all started with a simple but powerful thought experiment," Vahdat said.

"A number of leads at the company asked the question: What would happen if Google users wanted to interact with Google via voice for just 30 seconds a day? And how much compute power would we need to support our users?" The group determined Google would need to double the number of computers in its data centers.

So they looked for a better solution. "We realized that we could build custom hardware, not general purpose hardware, but custom hardware — Tensor Processing Units in this case — to support that much, much more efficiently. In fact, a factor of 100 more efficiently than it would have been otherwise," Vahdat said.

Custom Chip Implementation

Google data centers still rely on general-purpose central processing units, or CPUs, and Nvidia's graphics processing units, or GPUs. Google's TPUs are a different type of chip called an application-specific integrated circuit, or ASIC, which are custom-built for specific purposes. The TPU is focused on AI. Google makes another ASIC focused on video called a Video Coding Unit.

Market Dominance

The TPU, however, is what set Google apart. It was the first of its kind when it launched in 2015. Google TPUs still dominate among custom cloud AI accelerators, with 58% of the market share, according to The Futurum Group. Google coined the term based on the algebraic term "tensor," referring to the large-scale matrix multiplications that happen rapidly for advanced AI applications.

Future of AI Chips

With the second TPU release in 2018, Google expanded the focus from inference to training and made them available for its cloud customers to run workloads, alongside market-leading chips such as Nvidia's GPUs.

"If you're using GPUs, they're more programmable, they're more flexible. But they've been in tight supply," said Stacy Rasgon, senior analyst covering semiconductors at Bernstein Research. The AI boom has sent Nvidia's stock through the roof, catapulting the chipmaker to a $3 trillion market cap in June, surpassing Alphabet and jockeying with Apple and Microsoft for position as the world's most valuable public company.

Partnerships and Collaborations

Now that we know Apple's using Google's TPUs to train its AI models, the real test will come as those full AI features roll out on iPhones and Macs next year.