Introducing Gemma 3: Setting New Benchmarks for Open Compact Models

Google has recently unveiled Gemma 3, the latest iteration of its open-model family, setting new benchmarks in the realm of compact models. Gemma 3 builds upon the success of its predecessors and incorporates highly requested features from its user community. This new model boasts cutting-edge capabilities, including multimodal support, extended context windows, and enhanced multilingual functionality.

Key Features of Gemma 3

Gemma 3 is available in four different sizes—1B, 4B, 12B, and 27B—providing users with flexibility in choosing the model that best suits their needs. Users can access Gemma 3 as pre-trained models for fine-tuning or utilize the general-purpose instruction-tuned versions for various applications.

One of the standout features of Gemma 3 is its support for vision-language input in conjunction with text outputs. This unique capability enables users to seamlessly integrate images with textual data, facilitating tasks such as image analysis, object recognition, and answering questions related to visual content.

The model comes equipped with an integrated vision encoder based on SigLIP, ensuring consistency across all model sizes. Moreover, Gemma 3 can efficiently handle high-resolution and non-square images through an adaptive window algorithm.

Gemma 3 can accommodate context windows containing up to 128k tokens and offers compatibility with over 140 languages. The model's improved capabilities in math, reasoning, coding, and structured output further enhance its versatility and usability.

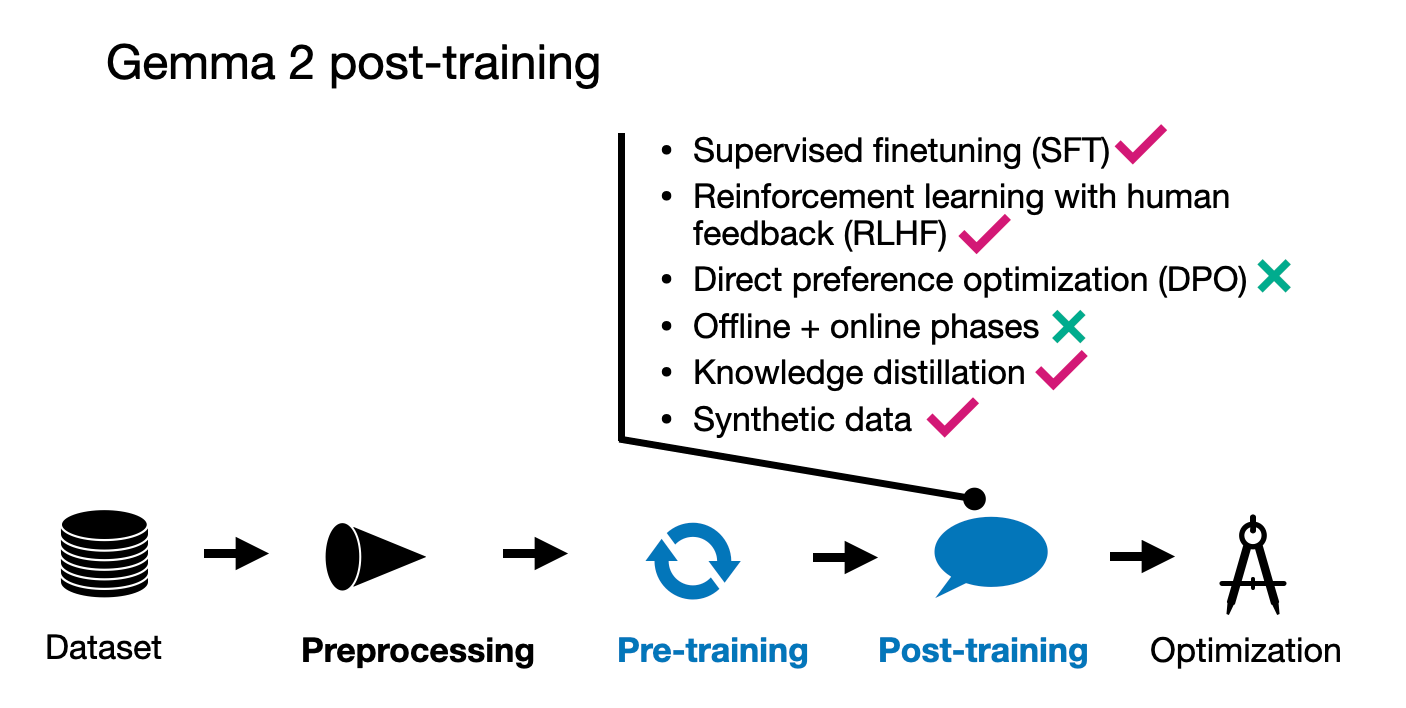

Training Process and Optimization

The development of Gemma 3 involved a rigorous training process that encompassed extensive optimization techniques such as distillation, reinforcement learning, and model merging.

Google leveraged token datasets ranging from 2T to 14T across different model sizes, utilizing Google TPUs and the JAX framework for training purposes. A new tokenizer has been introduced to enhance the model's multilingual functionality.

Gemma 3 has made significant strides in performance, achieving an impressive score of 1338 in LMArena for compact open models. Additionally, Google has introduced ShieldGemma 2—a safety moderation tool built on Gemma 3—to categorize synthetic and natural images across key safety categories.

Community Contributions and Impact

The release of Gemma 3 underscores the collaborative efforts of the Gemma community, with contributions ranging from innovative fine-tuning techniques to unique applications. Techniques like SimPO from Princeton NLP and applications such as OmniAudio by Nexa AI have enriched the Gemma ecosystem, showcasing the model's adaptability and utility across diverse domains.

With a remarkable track record of over 100 million downloads and a multitude of community-created variations, Gemma continues to make significant strides in the field of open compact models, expanding its impact and reach globally.

For more information, you can visit the source.