Google Unveils Gemini 2.0: Transforming AI with Multimodal Capabilities

Experience the future of AI as Google’s Gemini 2.0 redefines human-agent interaction with faster performance, real-time collaboration, and groundbreaking tools for everyday life.

A recent article published on Google's "The Keyword" website described Gemini 2.0, an advanced multimodal artificial intelligence (AI) model designed for the agentic era. It improved reasoning, multimodal input/output, and tool usage. It supported tasks like research assistance, real-time multimodal interactions, and advanced AI agents such as Project Astra and Mariner. This launch marks a transformative shift in AI's ability to anticipate, reason, and act under human supervision, a cornerstone of the agentic era.

Advancements in AI Models

Google's past AI work showcased Gemini 1.0 and 1.5 as pioneering multimodal models that advanced understanding of text, video, images, audio, and code. These models supported long-context processing, reimagined key products, and empowered developers. Efforts in 2023 focused on building agentic models capable of advanced reasoning, proactive action, and multimodal outputs. Gemini 2.0 represented a leap forward, integrating these innovations to enable advanced AI agents and enhanced tools like deep research and AI overviews. These advancements are part of Google’s broader goal to create universal AI assistants capable of handling increasingly complex tasks.

Enhanced Performance and Capabilities

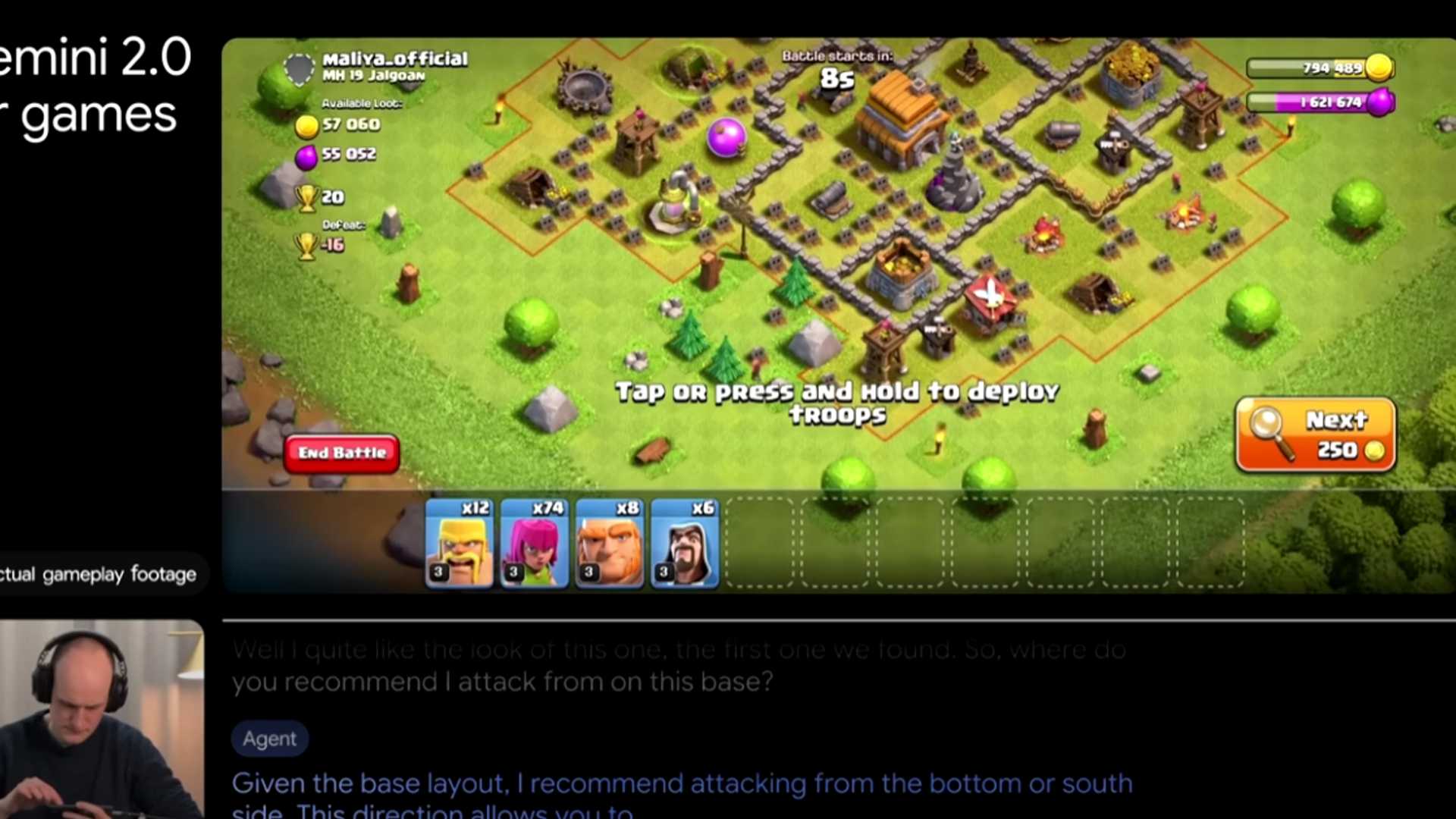

Gemini 2.0 Flash builds on the success of its predecessor, 1.5 Flash. It offers enhanced performance at twice the speed while outperforming 1.5 Pro on key benchmarks. It introduces new capabilities, including multimodal outputs like natively generated images with text and multilingual, steerable text-to-speech. Additionally, it supports tools such as Google Search, code execution, and user-defined functions, making it a robust choice for developers. The model’s real-time audio and video streaming capabilities through the Multimodal Live API allow for the development of dynamic, interactive applications.

Availability and Future Applications

The experimental model is available through the Gemini application programming interface (API) in Google AI Studio and Vertex AI. This API enables early-access partners to utilize advanced features such as text-to-speech and native image generation. A new multimodal live API has also been introduced to allow real-time audio and video streaming inputs for building interactive applications. General availability is planned for January.

Gemini 2.0 Flash paves the way for more agentic AI experiences with capabilities such as multimodal reasoning, long-context understanding, and native tool use. These capabilities enable applications like Project Astra, Project Mariner, and Jules. These prototypes showcase AI's ability to integrate seamlessly into daily activities, from research assistance to browser navigation and task execution. While still in the early stages, these developments promise to unlock practical applications of AI agents, helping users and developers achieve their goals efficiently.

Future Developments and Applications

Further advancements include improved latency, thanks to streaming capabilities and native audio understanding, enabling near-human conversational responsiveness. Google is working to extend these features to products like the Gemini app and new form factors, including prototype glasses. The trusted tester program is expanding to evaluate these features in novel applications, including real-world use cases for wearable AI.

In summary, Gemini 2.0 marked a significant advancement in AI, introducing multimodal capabilities and agentic models that could understand and perform tasks with human supervision. The new Flash model demonstrated enhanced performance, while prototypes like Project Astra and Mariner explored AI’s potential in real-world applications. By prioritizing safety, ethical development, and continuous research, Google is setting a new standard for AI innovation and its integration into diverse domains.

Posted in: AI Product News

Tags: Artificial Intelligence, Robotics