Mismatch in Google's Gemini AI Raises Questions

A recent report has cast doubt on the evaluation process of Google’s Gemini AI model. According to TechCrunch, a new internal guideline passed down from Google to contractors working on Gemini has led to concerns that Gemini could be more prone to spouting out inaccurate information on highly sensitive topics.

The new guideline instructs contractors not to skip prompts that require specialized domain knowledge, forcing them to rate the parts they understand and add a note that they lack the necessary expertise for the rest. This has raised questions about how effectively human oversight can serve in validating AI-generated content across diverse fields.

Google responded by stating that the “raters” don’t only review content, but also provide valuable feedback on style, format, and other factors. However, this response has done little to alleviate concerns about the accuracy and potential biases of Gemini’s evaluations.

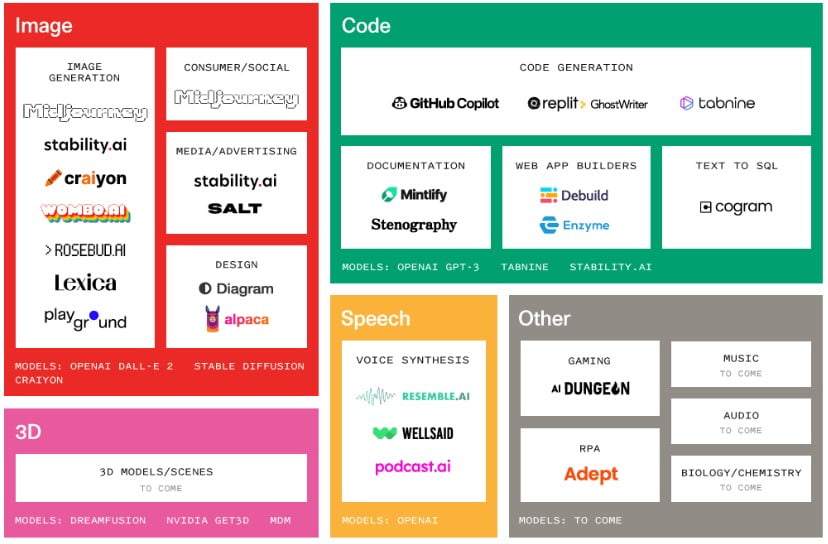

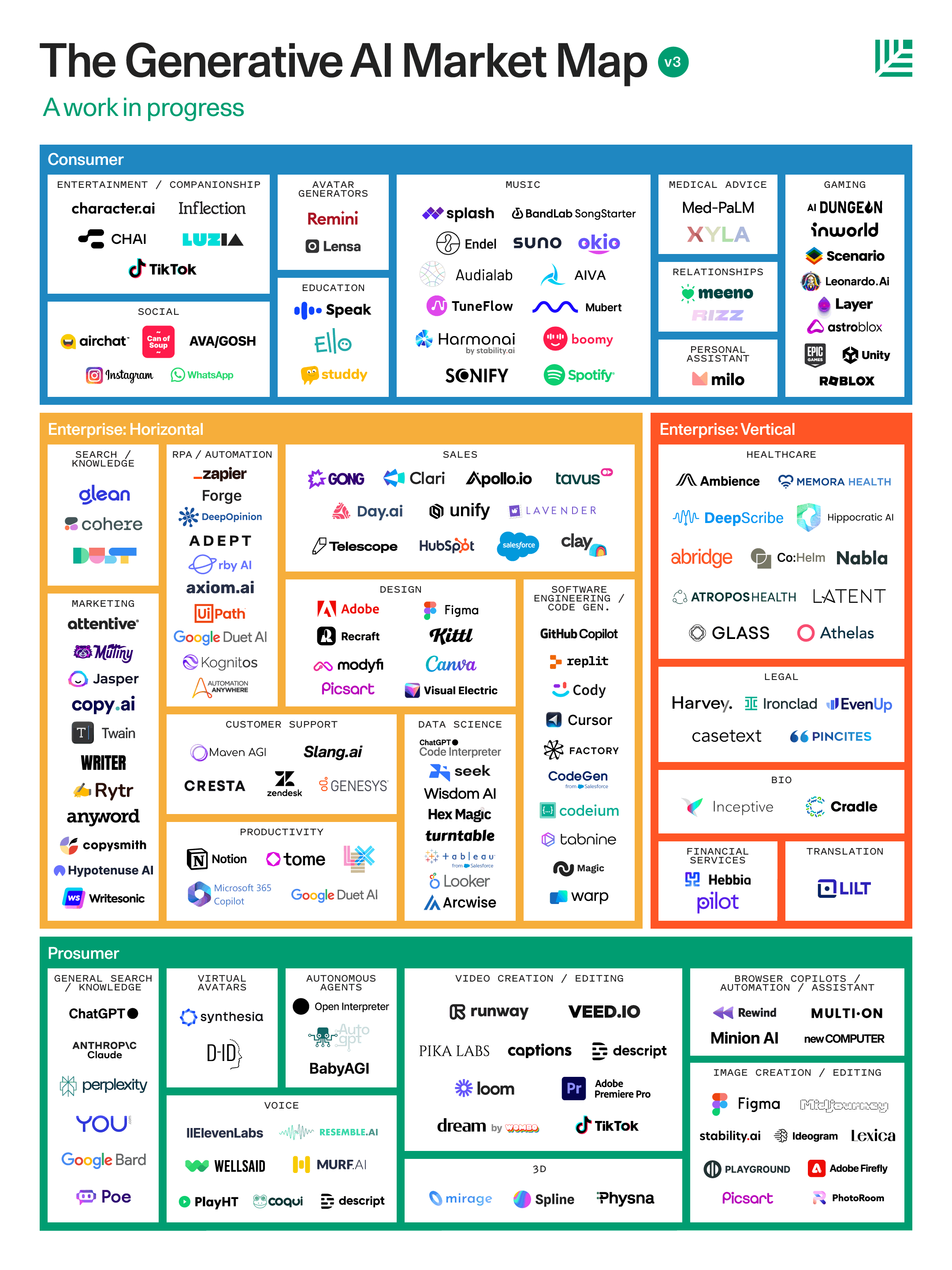

Thomas Randall, research lead at Info-Tech Research Group, highlighted a “hidden component” in the generative AI market landscape. Companies that rely on a gig economy of crowd workers for data...

Your daily dose of tech news, straight to your feed, from AI and software development to emerging innovations.

Serial entrepreneur and seasoned software engineer, specializing in software development, architecture design, and generative AI. vpodk.com