ChatGPT shows Left-wing bias, study finds

Recent research has revealed that ChatGPT, an AI chatbot, demonstrates a Left-wing bias and censors content that it deems "unpalatable" in a manner reminiscent of China's DeepSeek.

Research Findings

During the study, ChatGPT was interrogated on various contentious topics such as military supremacy, free speech, government policies, religion, and equal rights. The responses provided by ChatGPT were then compared to real-world survey data to evaluate its ideological stance.

Expert Opinions

Dr. Fabio Motoki from the University of East Anglia’s Norwich Business School highlighted that AI tools, like ChatGPT, can harbor biases that may influence perceptions and policies unintentionally. The study's co-author added that generative AI biases could potentially exacerbate social divisions and undermine trust in democratic processes.

Impact on Public Discourse

The study also revealed disparities in how ChatGPT treated different ideologies, with a tendency to censor Right-wing content while displaying more leniency towards Left-wing perspectives. Such uneven treatment was deemed detrimental to fostering open and balanced dialogues within society.

Ensuring Fairness in AI

Experts emphasized the importance of interdisciplinary collaboration to develop AI systems that uphold fairness, accountability, and alignment with societal norms. They stressed the need to address biases in generative AI models to prevent further polarization and erosion of democratic values.

Research Implications

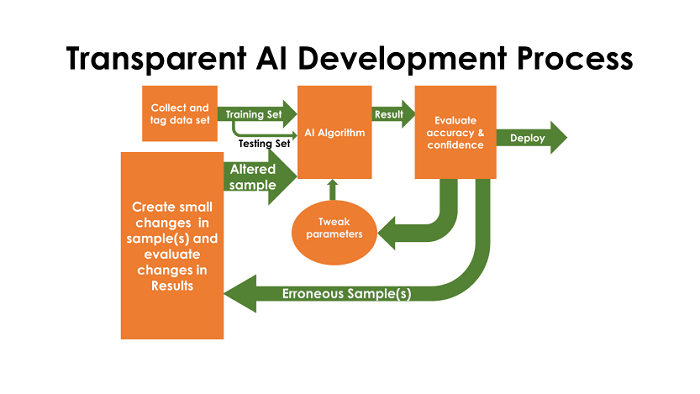

The team's findings also shed light on the opaque training processes of AI models, suggesting that biases may stem from the data ingested during training and human feedback loops. The researchers advocated for greater transparency and scrutiny in the development of AI systems to mitigate inherent biases.

The research study, published in the Journal of Economic Behaviour and Organisation, underscores the critical need for ethical considerations in AI development and deployment.