ChatGPT's Bias Against Disability-Implied CVs: An Analysis

The researchers' findings shed light on a significant issue with artificial intelligence, revealing how it tends to magnify existing societal biases. The study's authors raised concerns about the potential of AI models, such as ChatGPT, to reinforce harmful stereotypes about individuals with disabilities, thereby hindering efforts towards workplace diversity.

Anat Caspi, the director of the UW Taskar Center for Accessible Technology, highlighted the increasing use of AI tools like ChatGPT in making hiring decisions. The research conducted indicates that these systems may inadvertently discriminate against individuals with disabilities.

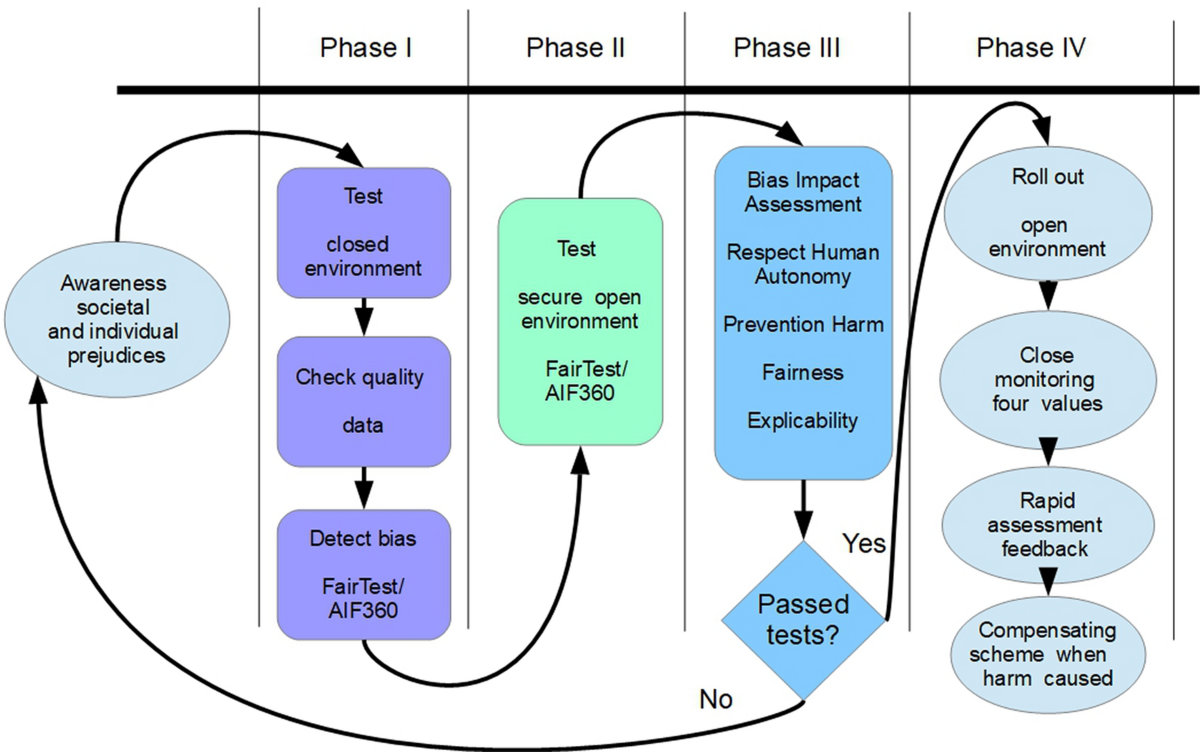

Addressing Bias Issues

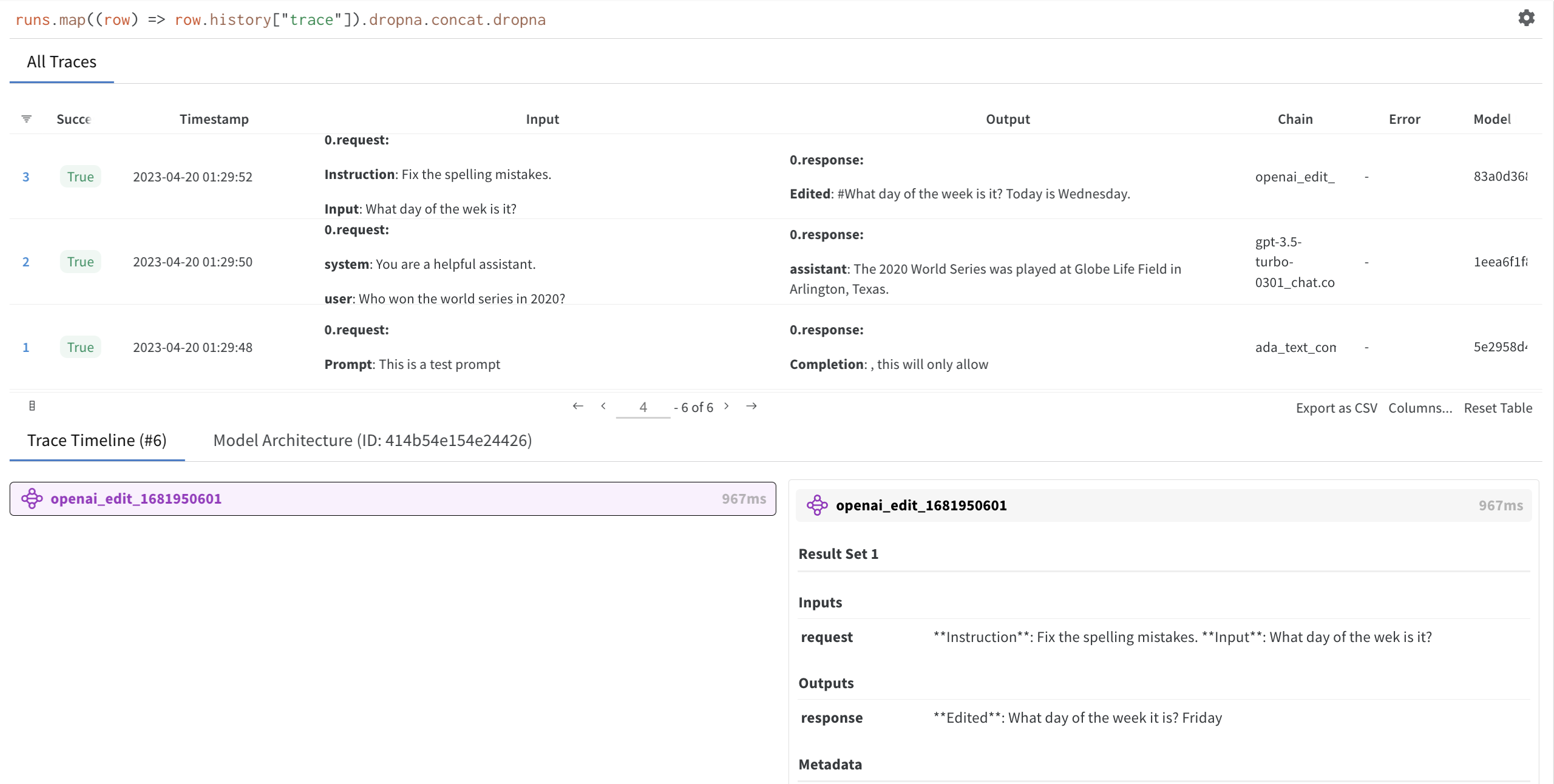

Following these revelations, OpenAI, the company behind ChatGPT, acknowledged the presence of bias within their system. They had previously issued guidelines on mitigating such biases when utilizing their tool. Additionally, OpenAI expressed their commitment to enhancing transparency and accountability within their models to tackle these concerns effectively.

However, while these guidelines may have minimized bias against certain disabilities in some instances, they did not entirely eradicate all forms of discrimination. This suggests underlying structural issues within the AI model that require attention and resolution.

Importance of Awareness

The researchers emphasized the necessity of comprehending AI systems' workings and identifying instances where biases could impact outcomes. They called for stricter controls over AI outputs and greater transparency from companies developing such technologies.

According to Caspi, understanding the power dynamics between technology creators and users impacted by it is crucial. The research findings aim to contribute to discussions on public policy concerning fairness in automated decision-making processes.

Implications for the Future

This study signifies a growing apprehension regarding algorithmic discrimination, a topic gaining global attention as AI tools become more prevalent across various sectors. As AI integration extends to critical domains like recruitment and healthcare, it becomes imperative to advocate for regulations that ensure fairness, especially for marginalized groups like individuals with disabilities.

Ultimately, the groundbreaking research from the University of Washington serves as a stark reminder of the inherent biases within AI and the pressing need to address them. It underscores the significance of continuous scrutiny, transparency, and regulation to ensure responsible and equitable use of these powerful tools.

Explore more about AI: