[All About AI] The Origins, Evolution & Future of AI

AI-powered robots that walk, talk, and think like humans have long been a staple of sci-fi comics and movies. However, AI and robotics are no longer merely works of fiction—they have become a reality. Now that AI is here and transforming people’s lives, it is prudent to look back and consider AI’s origins, the milestones which have shaped the technology’s evolution, and consider what the future might hold.

Figure 1. Chapter I Overview of AI Technology -LIU SHEN & ASSOCIATES

Figure 1. Chapter I Overview of AI Technology -LIU SHEN & ASSOCIATES

The birth of AI can be traced back to the 1950s. In 1950, British mathematician Alan Turing proposed that machines could “think,” introducing what is now known as the “Turing test” to evaluate this capability. This is widely recognized to be the first study to present the concept of AI. In 1956, the Dartmouth Summer Research Project on Artificial Intelligence formally introduced the term “AI” to the wider world for the first time. Held in the U.S. state of New Hampshire, the conference fueled further debates on whether machines could learn and evolve like humans.

The Evolution of AI

During the same decade, the development of artificial neural network1 models marked a significant milestone in computing history. In 1957, U.S. neuropsychologist Frank Rosenblatt introduced the “perceptron” model2, empirically demonstrating that computers can learn and recognize patterns. This practical application built on the “neural network theory” developed in 1943 by neurophysiologists Warren McCulloch and Walter Pitts, who conceptualized nerve cell interactions into a simple computational model. Despite these early breakthroughs raising high expectations, research in the field soon stagnated due to limitations in computing power, logical framework, and data availability.

Figure 2. Advanced Project in Artificial Neural Networks in Machine Learning

Figure 2. Advanced Project in Artificial Neural Networks in Machine Learning

The Emergence of Expert Systems

Then in the 1980s, “expert system” emerged which operated solely based on human-defined rules. These systems could make automated decisions to perform tasks such as diagnosis, categorization, and analysis in practical fields such as medicine, law, and retail. However, during this period, expert systems were limited by their reliance on rules set by humans and struggled to understand the complexities of the real world.

Neural network: A machine learning program, or model, that makes decisions in a manner similar to the human brain. It creates an adaptive system to make decisions and learn from mistakes.

2Perceptron: The simplest form of a neural network. It is a model of a single neuron that can be used for binary classification problems, enabling it to determine whether an input belongs to one class or another.

The Shift to Machine Learning

In the 1990s, AI evolved from following human commands to autonomously learning and discovering new rules by adopting machine learning algorithms. This became possible due to the advent of digital technology and the internet, which provided access to vast amounts of online data. At this point, AI was able to unearth new rules even humans could not discover. This period marked the start of renewed momentum for AI research, based on machine learning.

Figure 3. Advanced Topics in Artificial Neural Networks - YouTube

Figure 3. Advanced Topics in Artificial Neural Networks - YouTube

The Rise of Deep Learning

While the 1990s presented opportunities for AI to grow, the journey and evolution of AI has had its share of setbacks. In 1969, early artificial neural network research hit a roadblock when it was discovered that the perceptron model could not solve nonlinear problems3, leading to a prolonged downturn in the field. However, computer scientist Geoffrey Hinton, often hailed as the “godfather of deep learning,” breathed new life into artificial neural network research with his groundbreaking ideas.

For example, in 1986, Hinton applied the backpropagation4 algorithm to a “multilayer perceptron” model, essentially layers of artificial neural networks, proving it could address the limitations of the initial perceptron model. This seemed to spark a revival in artificial neural networks research, but as the depth of the networks increased, issues began to emerge in the learning process and outcomes.

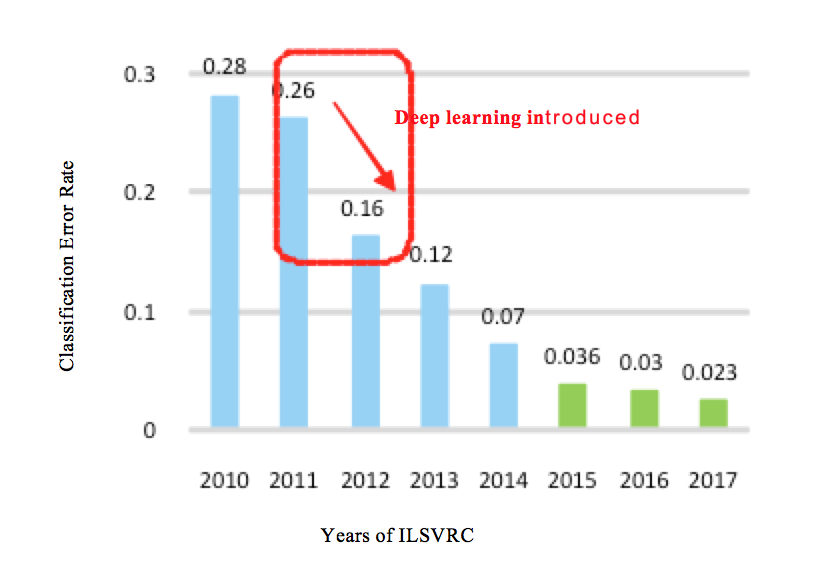

In 2012, deep learning made a historic leap forward when Hinton’s team won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) with their deep learning-based model, AlexNet. This triumph demonstrated deep learning’s immense power by recording an error rate of just 16.4%, surpassing the 25.8% of the previous year’s winner.

The Future of AI

Deep learning, a focal point of AI research, has grown rapidly since the 2010s for two primary reasons. First, advances in computer systems, including graphics processing units (GPUs), have driven AI development. Originally designed for graphics processing, GPUs can process repetitive and similar tasks in parallel. This capability enables GPUs to process data faster than central processing units (CPUs). In the 2010s, general-purpose computing on GPUs (GPGPU) emerged, enabling GPUs to be used for broader computational tasks beyond graphics rendering and allowing them to replace CPUs in some instances. The use of GPUs has further increased as they have been utilized for training artificial neural networks, accelerating the development of deep learning. Deep learning, which needs to perform iterative computations during analysis of large datasets to extract features, benefits from the parallel processing capability of GPUs.

Second, the expansion of data resources has fueled progress in deep learning. Training an artificial neural network requires vast amounts of data. In the past, data was primarily sourced from users manually inputting information into computers. However, the explosion of the internet and search engines in the 1990s exponentially increased the range of data available for processing. In the 2000s, the advent of technologies such as smartphones and the Internet of Things (IoT) contributed to the birth of the Big Data era, where real-time information flows from every corner of the globe. Deep learning algorithms use this large quantity of data for training, growing increasingly sophisticated. This data revolution has therefore set the stage for significant advancements in deep learning technology.